Imbalanced datasets pose significant challenges in data science by skewing model performance toward the majority class, often resulting in poor predictive accuracy for minority classes. Balanced datasets, achieved through techniques like oversampling or undersampling, enable models to learn patterns evenly across all classes, improving overall classification metrics. Selecting the appropriate balancing method enhances model robustness and ensures reliable decision-making in real-world applications.

Table of Comparison

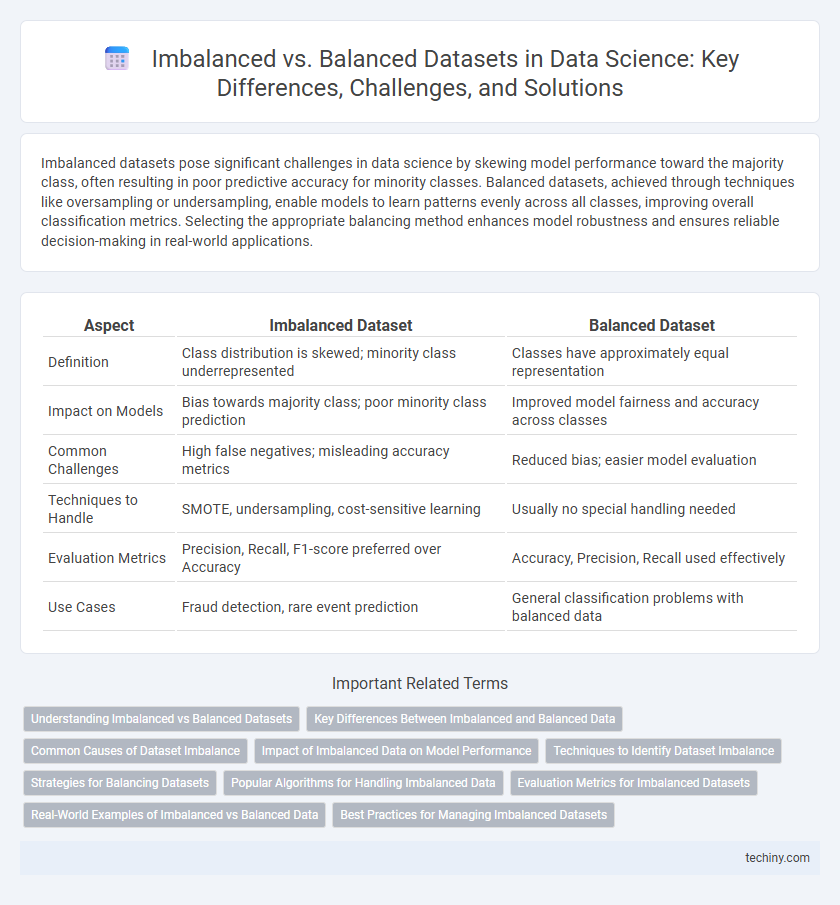

| Aspect | Imbalanced Dataset | Balanced Dataset |

|---|---|---|

| Definition | Class distribution is skewed; minority class underrepresented | Classes have approximately equal representation |

| Impact on Models | Bias towards majority class; poor minority class prediction | Improved model fairness and accuracy across classes |

| Common Challenges | High false negatives; misleading accuracy metrics | Reduced bias; easier model evaluation |

| Techniques to Handle | SMOTE, undersampling, cost-sensitive learning | Usually no special handling needed |

| Evaluation Metrics | Precision, Recall, F1-score preferred over Accuracy | Accuracy, Precision, Recall used effectively |

| Use Cases | Fraud detection, rare event prediction | General classification problems with balanced data |

Understanding Imbalanced vs Balanced Datasets

Imbalanced datasets contain a disproportionate distribution of classes, often leading to biased model predictions that favor the majority class. Balanced datasets feature approximately equal representation of all classes, enabling algorithms like logistic regression and decision trees to learn more effectively and improve classification accuracy. Addressing class imbalance with techniques such as SMOTE, undersampling, or cost-sensitive learning enhances model performance and generalization in data science projects.

Key Differences Between Imbalanced and Balanced Data

Imbalanced datasets contain significantly unequal class distributions, which can lead to biased model predictions favoring the majority class, whereas balanced datasets maintain roughly equal representation across classes, promoting fairer model training. Key differences include the risk of overfitting in imbalanced data and reduced generalization compared to balanced data that supports more robust and reliable performance metrics. Handling imbalanced data often requires techniques such as resampling, synthetic data generation, or cost-sensitive algorithms, unlike balanced datasets where standard training methods typically suffice.

Common Causes of Dataset Imbalance

Imbalanced datasets in data science often arise from naturally occurring disparities in class distribution, such as rare event occurrences or skewed population samples. Data collection methods introducing bias, incomplete data gathering, and labeling errors further exacerbate imbalance by overrepresenting certain classes while underrepresenting others. This imbalance challenges model training, reducing predictive accuracy and necessitating specialized techniques like resampling or synthetic data generation to address the skewed class representation.

Impact of Imbalanced Data on Model Performance

Imbalanced datasets cause biased model predictions by overrepresenting the majority class, leading to poor recall and precision for minority classes. This imbalance skews accuracy metrics, often masking the model's inability to detect critical rare events. Addressing class imbalance through techniques like resampling or synthetic data generation improves model robustness and predictive reliability.

Techniques to Identify Dataset Imbalance

Techniques to identify dataset imbalance in data science include calculating class distribution percentages, using visualization tools such as histograms or bar charts to reveal skewed data, and applying metrics like the imbalance ratio or Gini index. Confusion matrix analysis during model evaluation can also highlight performance disparities caused by imbalance. Employing these methods enables data scientists to detect and address imbalanced datasets effectively for improved model accuracy.

Strategies for Balancing Datasets

Strategies for balancing datasets in data science focus on techniques like oversampling the minority class and undersampling the majority class to address imbalanced datasets. Synthetic data generation methods such as SMOTE (Synthetic Minority Over-sampling Technique) enhance model performance by creating realistic minority samples. Ensemble methods and cost-sensitive learning also improve classification accuracy by emphasizing the importance of minority classes.

Popular Algorithms for Handling Imbalanced Data

Popular algorithms for handling imbalanced datasets in data science include SMOTE (Synthetic Minority Over-sampling Technique), which generates synthetic samples to balance class distribution, and ADASYN, an adaptive variant focusing on harder-to-learn minority examples. Cost-sensitive learning modifies algorithm penalties to prioritize minority classes, while ensemble methods like Random Forest and XGBoost integrate resampling techniques to improve minority class representation. Techniques such as balanced bagging and threshold moving further enhance model performance on imbalanced data by addressing bias and altering decision boundaries.

Evaluation Metrics for Imbalanced Datasets

Evaluation metrics for imbalanced datasets prioritize measures like Precision, Recall, F1-score, and the Area Under the Receiver Operating Characteristic Curve (AUROC) to better capture model performance across minority classes. Unlike accuracy, which can be misleading in skewed datasets, metrics such as Matthews Correlation Coefficient (MCC) and Cohen's Kappa provide more reliable insights by accounting for true positives, false positives, false negatives, and true negatives. Emphasizing these metrics ensures a more nuanced evaluation, enabling data scientists to optimize models for rare event detection and reduce bias in predictions.

Real-World Examples of Imbalanced vs Balanced Data

Imbalanced datasets frequently appear in fraud detection, where fraudulent transactions represent a tiny fraction of total records, complicating model accuracy. Balanced datasets, like customer reviews equally split between positive and negative sentiments, enable more straightforward classification and evaluation. Addressing imbalance through techniques such as SMOTE or class weighting improves predictive performance in real-world scenarios like medical diagnosis or credit scoring.

Best Practices for Managing Imbalanced Datasets

Addressing imbalanced datasets in data science requires techniques such as oversampling minority classes using SMOTE or ADASYN and undersampling majority classes to prevent model bias. Employing evaluation metrics like F1-score, precision-recall curves, and ROC-AUC provides more insightful model performance analysis than accuracy alone. Integrating ensemble methods like Random Forest or Gradient Boosting further enhances predictive power when managing skewed class distributions.

imbalanced dataset vs balanced dataset Infographic

techiny.com

techiny.com