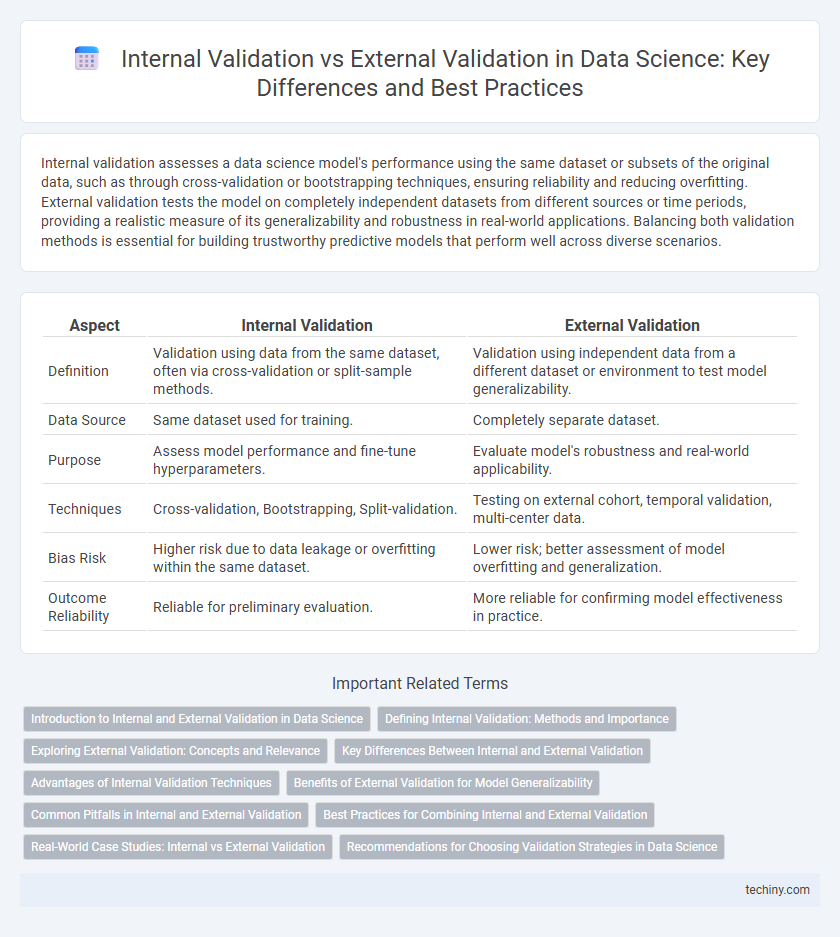

Internal validation assesses a data science model's performance using the same dataset or subsets of the original data, such as through cross-validation or bootstrapping techniques, ensuring reliability and reducing overfitting. External validation tests the model on completely independent datasets from different sources or time periods, providing a realistic measure of its generalizability and robustness in real-world applications. Balancing both validation methods is essential for building trustworthy predictive models that perform well across diverse scenarios.

Table of Comparison

| Aspect | Internal Validation | External Validation |

|---|---|---|

| Definition | Validation using data from the same dataset, often via cross-validation or split-sample methods. | Validation using independent data from a different dataset or environment to test model generalizability. |

| Data Source | Same dataset used for training. | Completely separate dataset. |

| Purpose | Assess model performance and fine-tune hyperparameters. | Evaluate model's robustness and real-world applicability. |

| Techniques | Cross-validation, Bootstrapping, Split-validation. | Testing on external cohort, temporal validation, multi-center data. |

| Bias Risk | Higher risk due to data leakage or overfitting within the same dataset. | Lower risk; better assessment of model overfitting and generalization. |

| Outcome Reliability | Reliable for preliminary evaluation. | More reliable for confirming model effectiveness in practice. |

Introduction to Internal and External Validation in Data Science

Internal validation in data science measures a model's performance using the same dataset or a subset thereof through techniques like cross-validation or bootstrapping, ensuring robustness within the training data. External validation assesses the model's generalizability by testing it on entirely independent datasets collected from different sources or time periods, which helps identify overfitting and real-world applicability. Both validation types are crucial for developing reliable predictive models and ensuring consistent performance across varying conditions and populations.

Defining Internal Validation: Methods and Importance

Internal validation in data science involves techniques such as cross-validation, bootstrapping, and split-sample testing to assess model performance using the original dataset. These methods help detect overfitting, estimate model generalizability, and optimize hyperparameters before external deployment. Ensuring robust internal validation is crucial for building reliable predictive models that perform well on unseen data.

Exploring External Validation: Concepts and Relevance

External validation assesses a data science model's performance on independent datasets distinct from those used during training, ensuring generalizability across diverse real-world scenarios. This process helps identify overfitting by evaluating model accuracy, recall, precision, and AUC metrics on external data sources. Implementing external validation is crucial for deploying robust predictive analytics in domains like healthcare, finance, and marketing where model reliability and unbiased inference are paramount.

Key Differences Between Internal and External Validation

Internal validation uses techniques like cross-validation and bootstrapping within the original dataset to assess model performance and prevent overfitting. External validation involves testing the model on independent, unseen datasets to evaluate generalizability and real-world applicability. Key differences include data source origin, with internal validation relying on the training data and external validation using separate external data, impacting the reliability of performance metrics.

Advantages of Internal Validation Techniques

Internal validation techniques in data science offer the advantage of efficiently assessing model performance using the original dataset through methods like cross-validation and bootstrapping. These techniques provide robust estimates of model accuracy and help prevent overfitting by ensuring the model generalizes well within the data it was trained on. Furthermore, internal validation reduces the need for additional external datasets, saving time and resources during the model development process.

Benefits of External Validation for Model Generalizability

External validation assesses a data science model using independent datasets, ensuring its performance extends beyond the original training environment. This process quantifies model generalizability by identifying overfitting and uncovering biases that internal validation cannot detect. Improved external validation directly correlates with more reliable predictive analytics in real-world applications across diverse populations and scenarios.

Common Pitfalls in Internal and External Validation

Common pitfalls in internal and external validation include data leakage, overfitting, and selection bias. Internal validation often suffers from optimistic performance estimates due to using the same dataset for training and testing, whereas external validation faces challenges in generalizability when the external dataset differs significantly from the training set. Ensuring proper sampling, representative datasets, and robust evaluation metrics are crucial to mitigate these validation issues in data science models.

Best Practices for Combining Internal and External Validation

Combining internal and external validation in data science ensures model robustness and generalizability by leveraging cross-validation methods within the training dataset alongside testing on independent external datasets. Best practices include maintaining consistent preprocessing steps across datasets, using stratified sampling to preserve class distribution, and employing performance metrics that reflect real-world applicability. This hybrid approach minimizes overfitting risks and enhances the credibility of predictive models in diverse operational conditions.

Real-World Case Studies: Internal vs External Validation

Real-world case studies in data science highlight the crucial differences between internal and external validation, where internal validation uses a subset of the original dataset, such as holdout or cross-validation, to assess model performance and prevent overfitting. External validation involves testing the model on entirely independent data from different sources or populations, ensuring robustness and generalizability in practical applications. Effective model deployment relies on combining both validation methods to achieve reliable and unbiased predictive insights.

Recommendations for Choosing Validation Strategies in Data Science

Choosing a validation strategy in data science depends on the dataset size, model complexity, and deployment goals. Internal validation methods such as k-fold cross-validation are recommended for smaller datasets to ensure robust model evaluation without overfitting. External validation using independent datasets is crucial for assessing model generalizability and real-world performance, especially when deployment in varied environments is anticipated.

internal validation vs external validation Infographic

techiny.com

techiny.com