The kernel trick enables support vector machines to implicitly map input features into high-dimensional spaces without explicit computation, enhancing computational efficiency in non-linear classification tasks. Polynomial features explicitly generate new features by raising original features to specified powers, increasing dimensionality and enabling linear models to capture non-linear relationships. While polynomial features increase the feature space size directly, the kernel trick achieves similar effects implicitly, often resulting in reduced computational complexity and improved model performance.

Table of Comparison

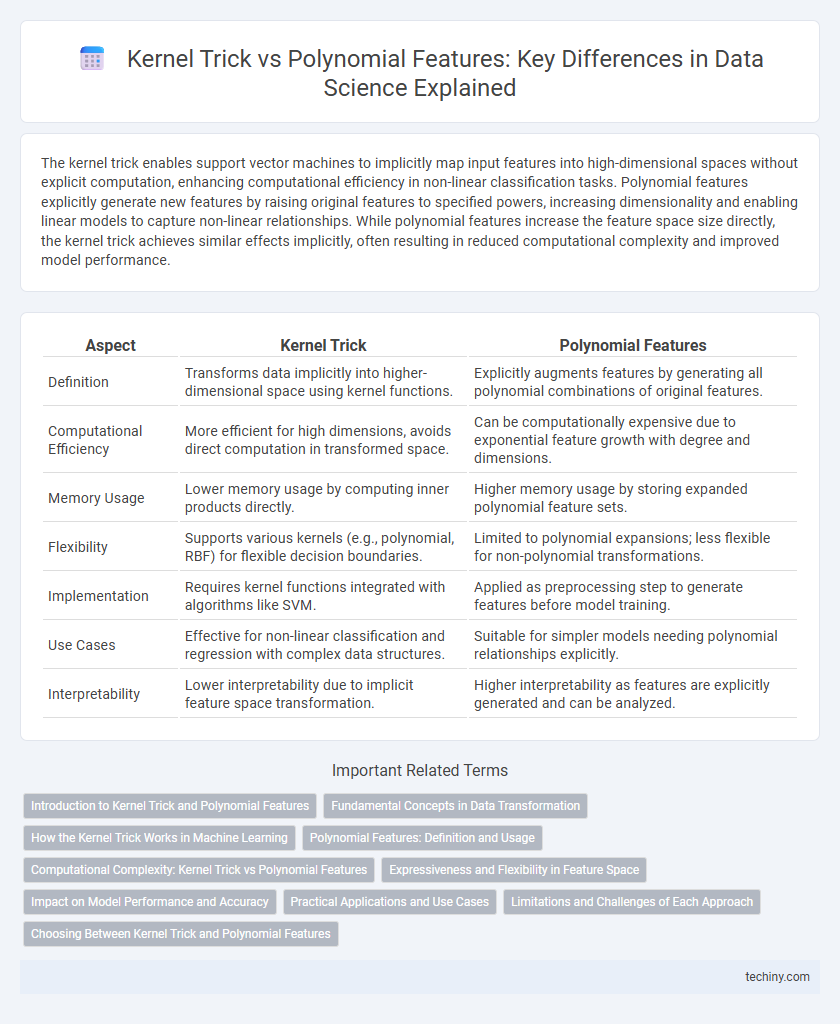

| Aspect | Kernel Trick | Polynomial Features |

|---|---|---|

| Definition | Transforms data implicitly into higher-dimensional space using kernel functions. | Explicitly augments features by generating all polynomial combinations of original features. |

| Computational Efficiency | More efficient for high dimensions, avoids direct computation in transformed space. | Can be computationally expensive due to exponential feature growth with degree and dimensions. |

| Memory Usage | Lower memory usage by computing inner products directly. | Higher memory usage by storing expanded polynomial feature sets. |

| Flexibility | Supports various kernels (e.g., polynomial, RBF) for flexible decision boundaries. | Limited to polynomial expansions; less flexible for non-polynomial transformations. |

| Implementation | Requires kernel functions integrated with algorithms like SVM. | Applied as preprocessing step to generate features before model training. |

| Use Cases | Effective for non-linear classification and regression with complex data structures. | Suitable for simpler models needing polynomial relationships explicitly. |

| Interpretability | Lower interpretability due to implicit feature space transformation. | Higher interpretability as features are explicitly generated and can be analyzed. |

Introduction to Kernel Trick and Polynomial Features

The kernel trick enables algorithms like Support Vector Machines to operate in high-dimensional spaces without explicitly computing the coordinates, improving efficiency in nonlinear classification tasks. Polynomial features transform input data by creating new features from polynomial combinations of existing ones, allowing linear models to capture nonlinear relationships. Both methods enhance model flexibility, with kernel trick leveraging implicit mappings and polynomial features relying on explicit feature expansion.

Fundamental Concepts in Data Transformation

Kernel trick leverages implicit mapping of input data into high-dimensional feature spaces without explicit computation, enabling efficient handling of complex patterns in algorithms like support vector machines. Polynomial features explicitly transform input variables into higher-degree polynomial terms, increasing dimensionality and capturing nonlinear relationships but often at the cost of computational complexity. Understanding these fundamental concepts in data transformation is crucial for selecting appropriate methods to improve model performance and to balance computational efficiency with representation power.

How the Kernel Trick Works in Machine Learning

The kernel trick enables algorithms to operate in high-dimensional feature spaces without explicitly computing the coordinates, allowing non-linear patterns to be captured efficiently. By implicitly mapping input data into a higher-dimensional space using kernel functions such as the Gaussian or polynomial kernel, it avoids the computational cost of polynomial feature expansion. This technique is fundamental in support vector machines and other kernel-based methods, enhancing model flexibility and performance on complex datasets.

Polynomial Features: Definition and Usage

Polynomial features transform input data by creating new features that are powers and interaction terms of the original variables, enabling linear models to capture nonlinear relationships. This technique is commonly used in regression and classification tasks to enhance model complexity without explicitly changing the algorithm. Polynomial feature expansion, often implemented via tools like scikit-learn's PolynomialFeatures, improves model accuracy by increasing the feature space dimensionality, allowing linear models to fit curves and complex patterns in data.

Computational Complexity: Kernel Trick vs Polynomial Features

The kernel trick significantly reduces computational complexity by implicitly mapping data into higher-dimensional spaces without explicitly calculating polynomial features, which can grow exponentially with feature degree and sample size. Polynomial feature expansion increases input dimensions directly, causing increased memory usage and slower model training, especially for large datasets or high-degree polynomials. Support Vector Machines (SVMs) using kernel functions maintain efficiency by operating in the original feature space while capturing nonlinear relationships, making them preferable for scalable machine learning tasks.

Expressiveness and Flexibility in Feature Space

The kernel trick enables implicit mapping of input data into high-dimensional feature spaces without explicit computation, allowing complex decision boundaries with efficient computational cost. Polynomial features explicitly transform input variables into polynomial combinations, increasing expressiveness but often leading to higher dimensionality and potential overfitting. Kernel methods provide greater flexibility by adapting the feature space implicitly, whereas polynomial features require manual selection of the degree and interaction terms, limiting adaptability.

Impact on Model Performance and Accuracy

The kernel trick enhances model performance by implicitly mapping inputs into high-dimensional feature spaces without explicit computation, enabling efficient handling of complex, non-linear relationships and improving accuracy with fewer computational resources. Polynomial features explicitly create new features by raising input variables to specified degrees, potentially increasing model complexity and risk of overfitting, which can negatively impact accuracy on unseen data. Kernel methods, such as the radial basis function (RBF) kernel, often outperform polynomial expansion in terms of balancing bias and variance, resulting in more robust generalization and higher predictive accuracy.

Practical Applications and Use Cases

Kernel trick is widely used in Support Vector Machines for efficiently handling high-dimensional, non-linear data without explicitly computing polynomial features, making it ideal for complex pattern recognition in image and speech recognition. Polynomial features, on the other hand, enhance linear models by explicitly adding higher-degree terms, improving performance in scenarios like regression analysis and feature engineering when interpretability and model simplicity are prioritized. Practical applications favor kernel methods in large-scale classification tasks, while polynomial features excel in smaller datasets requiring clear insight into feature interactions.

Limitations and Challenges of Each Approach

Kernel trick methods excel in handling high-dimensional feature spaces without explicitly computing polynomial features but face computational intensity and scalability issues with large datasets. Polynomial features transform data explicitly, increasing model interpretability but often lead to overfitting and a significant rise in dimensionality, causing increased computational costs. Both approaches encounter limitations in balancing model complexity and computational efficiency, requiring careful selection based on dataset size and feature behavior.

Choosing Between Kernel Trick and Polynomial Features

Choosing between the kernel trick and polynomial features depends on dataset size, complexity, and computational resources. Kernel trick enables efficient handling of high-dimensional feature spaces without explicitly computing polynomial features, ideal for large datasets with nonlinear relationships. Polynomial features explicitly generate interaction terms and degrees, offering interpretability but increasing dimensionality and risk of overfitting on small datasets.

kernel trick vs polynomial features Infographic

techiny.com

techiny.com