K-Nearest Neighbors (KNN) excels in simplicity and effectiveness for small datasets by classifying data points based on the closest training samples in feature space. Support Vector Machines (SVM) provide robust performance in high-dimensional spaces using hyperplanes to maximize the margin between classes, handling non-linear boundaries with kernel functions. Choosing between KNN and SVM depends on dataset size, dimensionality, and the need for interpretability versus computational efficiency.

Table of Comparison

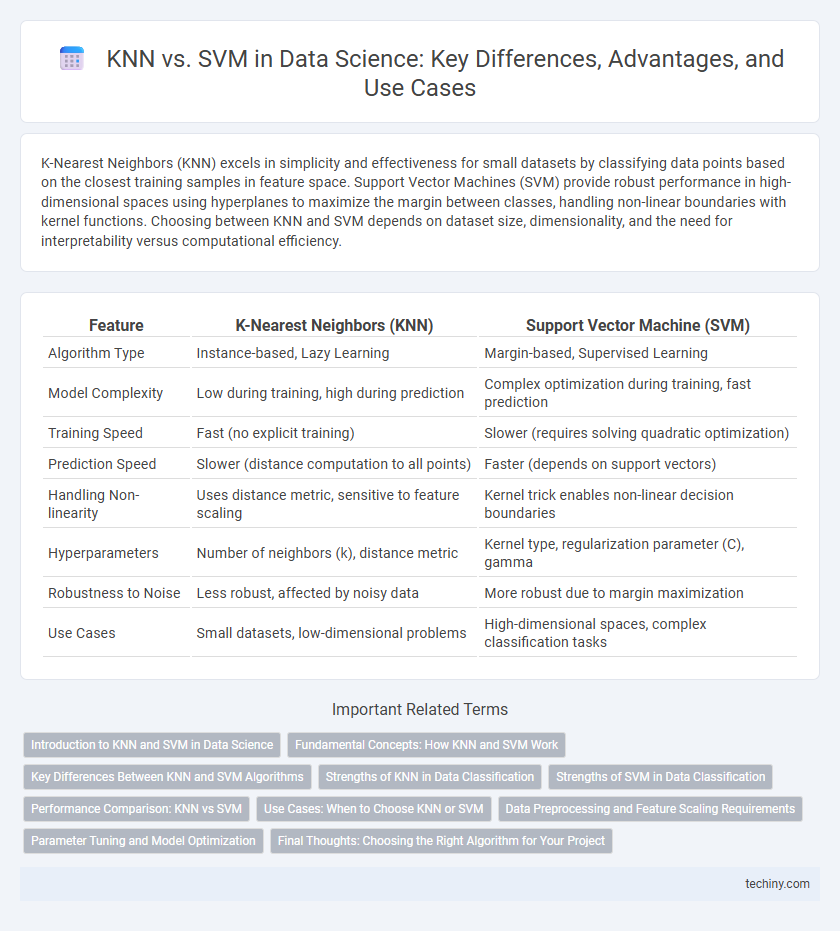

| Feature | K-Nearest Neighbors (KNN) | Support Vector Machine (SVM) |

|---|---|---|

| Algorithm Type | Instance-based, Lazy Learning | Margin-based, Supervised Learning |

| Model Complexity | Low during training, high during prediction | Complex optimization during training, fast prediction |

| Training Speed | Fast (no explicit training) | Slower (requires solving quadratic optimization) |

| Prediction Speed | Slower (distance computation to all points) | Faster (depends on support vectors) |

| Handling Non-linearity | Uses distance metric, sensitive to feature scaling | Kernel trick enables non-linear decision boundaries |

| Hyperparameters | Number of neighbors (k), distance metric | Kernel type, regularization parameter (C), gamma |

| Robustness to Noise | Less robust, affected by noisy data | More robust due to margin maximization |

| Use Cases | Small datasets, low-dimensional problems | High-dimensional spaces, complex classification tasks |

Introduction to KNN and SVM in Data Science

K-Nearest Neighbors (KNN) is a simple, instance-based learning algorithm used in data science for classification and regression by measuring the distance between data points to identify the closest neighbors. Support Vector Machines (SVM) create hyperplanes in a multidimensional space that optimally separate different classes, making SVM highly effective for high-dimensional datasets. Both algorithms serve crucial roles in predictive modeling, with KNN excelling in easy-to-interpret scenarios and SVM providing robustness in complex classification tasks.

Fundamental Concepts: How KNN and SVM Work

K-Nearest Neighbors (KNN) operates by classifying data points based on the majority label of their closest neighbors in feature space, relying on distance metrics like Euclidean or Manhattan. Support Vector Machine (SVM) constructs an optimal hyperplane that maximizes the margin between different classes, utilizing kernel functions to handle non-linear separations. KNN is instance-based and non-parametric, while SVM is a margin-based classifier with strong theoretical guarantees on generalization.

Key Differences Between KNN and SVM Algorithms

K-Nearest Neighbors (KNN) is a non-parametric, instance-based learning algorithm that classifies data points based on the majority label of their closest neighbors, making it simple but sensitive to noisy and high-dimensional data. Support Vector Machine (SVM) constructs a hyperplane or set of hyperplanes in a high-dimensional space to optimize the margin between different classes, offering strong performance with clear margin separation and robustness to overfitting through kernel functions. While KNN relies on distance metrics and is computationally intensive during prediction, SVM requires optimization during training and excels in handling linearly and non-linearly separable data.

Strengths of KNN in Data Classification

K-Nearest Neighbors (KNN) excels in data classification by offering simplicity and effective handling of multi-class problems without requiring complex model training. Its instance-based learning approach enables adaptability to non-linear decision boundaries by leveraging local data patterns. KNN also naturally incorporates new data points without retraining, making it ideal for dynamic datasets where quick updates are essential.

Strengths of SVM in Data Classification

Support Vector Machines (SVM) excel in data classification due to their ability to create optimal hyperplanes that maximize the margin between classes, enhancing generalization on unseen data. SVM effectively handles high-dimensional data and works well with non-linear boundaries through kernel functions like the radial basis function (RBF), making it versatile across various data distributions. Its robustness against overfitting, especially in cases with clear class separability, positions SVM as a powerful tool compared to K-Nearest Neighbors (KNN) in complex classification tasks.

Performance Comparison: KNN vs SVM

K-Nearest Neighbors (KNN) often excels in simplicity and interpretability but can suffer from scalability issues with large datasets, leading to slower prediction times. Support Vector Machines (SVM) generally provide higher accuracy and better generalization on high-dimensional data due to their margin maximization and kernel trick capabilities. While KNN's performance heavily depends on the choice of k and distance metric, SVMs typically achieve superior classification boundaries, especially in complex, non-linear problems.

Use Cases: When to Choose KNN or SVM

K-Nearest Neighbors (KNN) is ideal for small datasets with low dimensionality and when interpretability and ease of implementation are priorities, commonly used in image recognition and recommendation systems. Support Vector Machines (SVM) excel in high-dimensional spaces and are effective for complex classification problems such as text categorization and bioinformatics, especially when the data is not linearly separable. Choosing KNN suits scenarios requiring real-time prediction with low computational overhead, while SVM provides robust performance with clear decision boundaries in noisy datasets.

Data Preprocessing and Feature Scaling Requirements

K-Nearest Neighbors (KNN) heavily depends on feature scaling since it calculates distances between data points, making normalization or standardization essential to improve accuracy and convergence. Support Vector Machines (SVM) also benefit from feature scaling as it helps optimize the margin maximization process, but SVM is more robust to unscaled features compared to KNN. Both algorithms require careful data preprocessing, including handling missing values and encoding categorical variables, to ensure reliable model performance in classification tasks.

Parameter Tuning and Model Optimization

K-Nearest Neighbors (KNN) requires parameter tuning focused primarily on selecting the optimal number of neighbors (k) and distance metrics like Euclidean or Manhattan to balance bias-variance tradeoff and improve classification accuracy. Support Vector Machines (SVM) demand careful optimization of hyperparameters such as the regularization parameter (C), kernel type (linear, polynomial, RBF), and kernel-specific parameters like gamma to maximize margin and minimize classification error. Grid search and cross-validation are essential techniques in both models for systematic exploration of parameter combinations to achieve robust, high-performance predictive models.

Final Thoughts: Choosing the Right Algorithm for Your Project

K-Nearest Neighbors (KNN) excels in simplicity and works well with small to medium-sized datasets but struggles with high-dimensional data and large-scale problems. Support Vector Machines (SVM) offer robust performance in high-dimensional spaces and handle non-linear boundaries effectively using kernel tricks, making them ideal for complex classification tasks. Selecting the right algorithm depends on data characteristics, computational resources, and the specific project goals for accuracy and interpretability.

KNN vs SVM Infographic

techiny.com

techiny.com