Linear regression predicts continuous numerical values by modeling the relationship between dependent and independent variables, making it ideal for forecasting and trend analysis. Logistic regression, on the other hand, estimates probabilities for categorical outcomes using a sigmoid function, excelling in classification tasks like binary or multinomial class prediction. Both algorithms are fundamental in data science for different purposes: linear regression for regression problems and logistic regression for classification challenges.

Table of Comparison

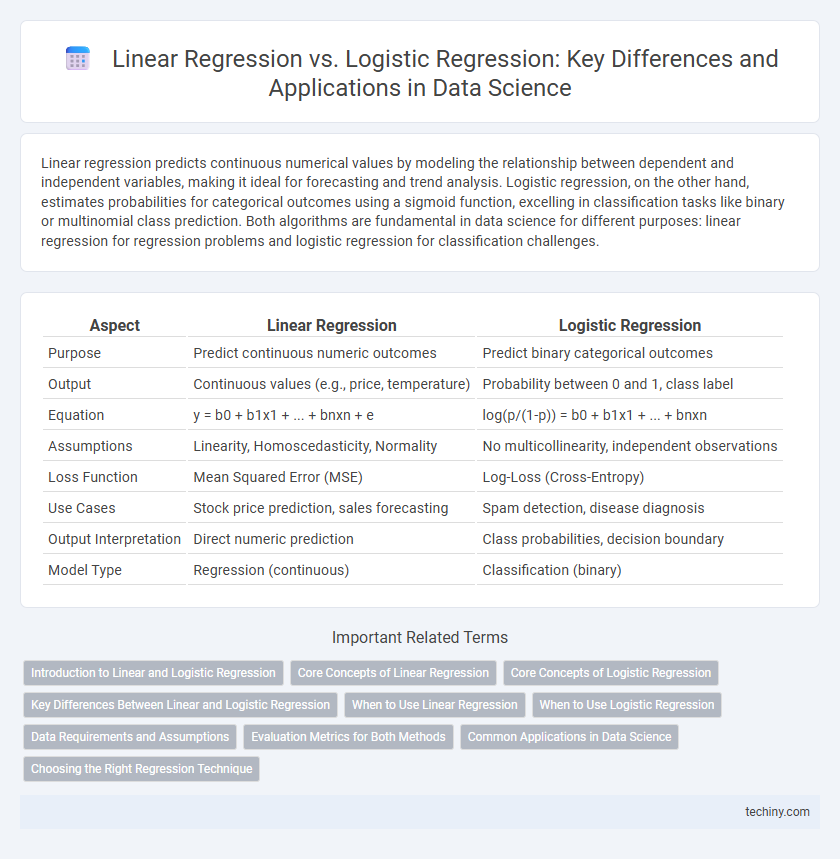

| Aspect | Linear Regression | Logistic Regression |

|---|---|---|

| Purpose | Predict continuous numeric outcomes | Predict binary categorical outcomes |

| Output | Continuous values (e.g., price, temperature) | Probability between 0 and 1, class label |

| Equation | y = b0 + b1x1 + ... + bnxn + e | log(p/(1-p)) = b0 + b1x1 + ... + bnxn |

| Assumptions | Linearity, Homoscedasticity, Normality | No multicollinearity, independent observations |

| Loss Function | Mean Squared Error (MSE) | Log-Loss (Cross-Entropy) |

| Use Cases | Stock price prediction, sales forecasting | Spam detection, disease diagnosis |

| Output Interpretation | Direct numeric prediction | Class probabilities, decision boundary |

| Model Type | Regression (continuous) | Classification (binary) |

Introduction to Linear and Logistic Regression

Linear regression predicts continuous outcomes by modeling the relationship between dependent and independent variables using a best-fit line through data points. Logistic regression estimates the probability of a binary outcome by applying a logistic function to model categorical dependent variables. Both methods serve fundamental roles in data science for predictive analytics, with linear regression addressing regression problems and logistic regression solving classification tasks.

Core Concepts of Linear Regression

Linear regression models the relationship between a continuous dependent variable and one or more independent variables by fitting a linear equation to observed data. It estimates coefficients by minimizing the sum of squared residuals, providing predictions through the equation y = b0 + b1x1 + ... + bnxn. The method assumes linearity, homoscedasticity, independence, and normality of errors, which are essential for reliable inference and prediction.

Core Concepts of Logistic Regression

Logistic regression models the probability of a binary outcome using the logistic function to map predicted values between 0 and 1, enabling classification tasks. It estimates the relationship between independent variables and a categorical dependent variable through maximum likelihood estimation rather than ordinary least squares. Unlike linear regression, logistic regression handles non-linear decision boundaries by predicting log-odds, making it essential for classification problems in data science.

Key Differences Between Linear and Logistic Regression

Linear regression predicts continuous numeric outcomes by fitting a linear relationship between independent variables and a dependent variable using the least squares method. Logistic regression estimates the probability of a binary outcome by modeling the log-odds of the dependent variable as a linear combination of independent variables through the sigmoid function. Key differences include the nature of the dependent variable--continuous for linear regression versus categorical for logistic regression--and the types of problems they solve, with linear regression used for regression tasks and logistic regression tailored for classification problems.

When to Use Linear Regression

Linear regression is best used when predicting a continuous dependent variable based on one or more independent variables, such as forecasting sales revenue or estimating housing prices. It assumes a linear relationship between the input features and the output, making it suitable for problems where the target variable is quantitative. Use linear regression when the goal is to model and predict numerical values with minimal classification boundaries or categories.

When to Use Logistic Regression

Logistic regression is ideal for classification problems where the dependent variable is categorical, typically binary, such as success/failure or yes/no outcomes. It models the probability that a given input belongs to a particular class by using the logistic function to constrain predictions between 0 and 1. This method excels in scenarios like credit scoring, medical diagnosis, and spam detection, where the goal is to predict class membership rather than continuous values.

Data Requirements and Assumptions

Linear regression requires a continuous dependent variable and assumes linearity, homoscedasticity, independence of errors, and normally distributed residuals. Logistic regression is designed for binary or categorical dependent variables and assumes a logistic relationship between predictors and the log-odds of the outcome, independence of observations, and absence of multicollinearity. Both models require careful consideration of data quality and appropriate feature scaling to meet their respective assumptions.

Evaluation Metrics for Both Methods

Linear Regression evaluation primarily relies on metrics such as Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R-squared to quantify model accuracy on continuous target variables. Logistic Regression assessment focuses on classification metrics including Accuracy, Precision, Recall, F1 Score, and Area Under the Receiver Operating Characteristic Curve (AUC-ROC) to measure model performance on binary or multiclass categorical outcomes. Choosing the appropriate evaluation metric is crucial and depends on the nature of the prediction task--regression for continuous predictions versus classification for categorical targets.

Common Applications in Data Science

Linear regression is primarily used for predicting continuous variables such as sales forecasting, temperature estimation, and price modeling. Logistic regression excels in classification problems including spam detection, customer churn prediction, and medical diagnosis by estimating probabilities of binary outcomes. Both models are fundamental in data science for interpreting relationships between variables and making informed decisions based on data patterns.

Choosing the Right Regression Technique

Selecting the appropriate regression technique depends on the nature of the dependent variable: linear regression is ideal for predicting continuous outcomes by modeling the relationship between independent variables and a numeric target, while logistic regression is suited for classification problems involving binary or categorical outcomes. Evaluating the data distribution, target variable type, and problem objectives ensures the use of the correct algorithm, optimizing model accuracy and interpretability. Incorporating regularization methods and assessing performance metrics such as R2 for linear regression or AUC-ROC for logistic regression further refines the choice for reliable predictive modeling in data science.

Linear Regression vs Logistic Regression Infographic

techiny.com

techiny.com