MapReduce processes large-scale data by dividing tasks into independent map and reduce phases, which can introduce latency due to disk-based storage between steps. Spark enhances data processing speed through in-memory computation, enabling faster iterative algorithms and real-time analytics. Its versatile APIs and DAG execution model make Spark more efficient and suitable for complex data workflows compared to MapReduce.

Table of Comparison

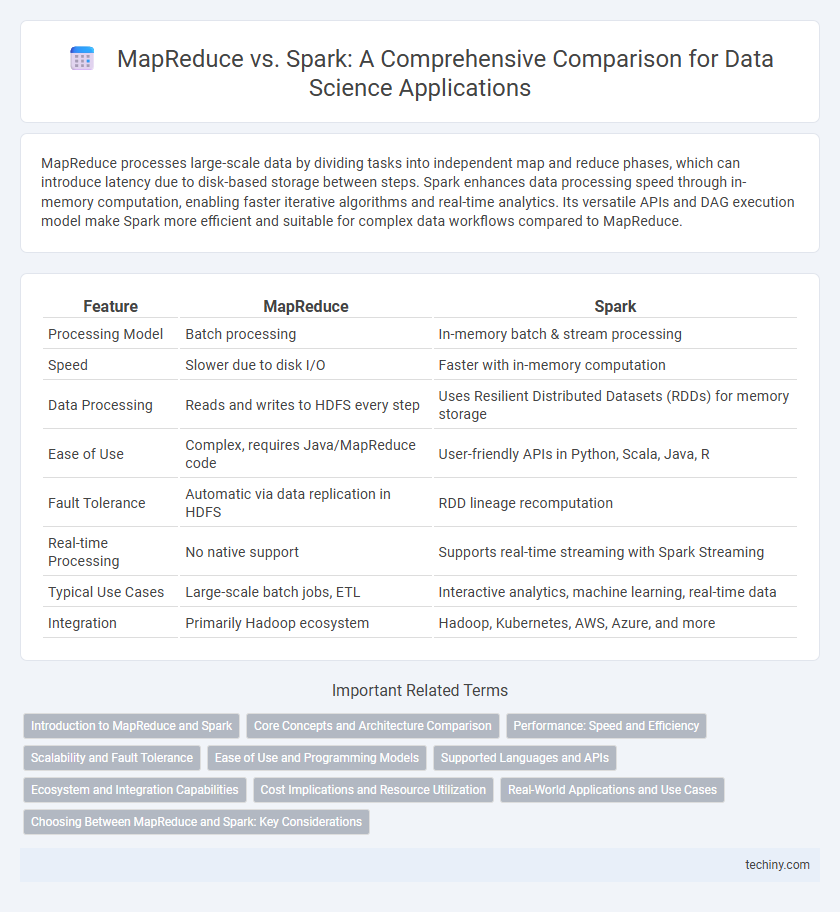

| Feature | MapReduce | Spark |

|---|---|---|

| Processing Model | Batch processing | In-memory batch & stream processing |

| Speed | Slower due to disk I/O | Faster with in-memory computation |

| Data Processing | Reads and writes to HDFS every step | Uses Resilient Distributed Datasets (RDDs) for memory storage |

| Ease of Use | Complex, requires Java/MapReduce code | User-friendly APIs in Python, Scala, Java, R |

| Fault Tolerance | Automatic via data replication in HDFS | RDD lineage recomputation |

| Real-time Processing | No native support | Supports real-time streaming with Spark Streaming |

| Typical Use Cases | Large-scale batch jobs, ETL | Interactive analytics, machine learning, real-time data |

| Integration | Primarily Hadoop ecosystem | Hadoop, Kubernetes, AWS, Azure, and more |

Introduction to MapReduce and Spark

MapReduce is a programming model designed for processing large data sets with a distributed algorithm that divides tasks into the Map and Reduce phases, enabling scalable and fault-tolerant batch processing. Apache Spark, a unified analytics engine, offers in-memory computation and supports batch and real-time processing with improved speed and flexibility over MapReduce by leveraging resilient distributed datasets (RDDs). Both frameworks are essential in big data processing, but Spark's advanced DAG execution and in-memory processing significantly reduce latency compared to MapReduce's disk-based Map and Reduce cycles.

Core Concepts and Architecture Comparison

MapReduce processes large datasets using a batch processing model with a two-phase (map and reduce) execution, emphasizing fault tolerance through disk-based storage between phases. Apache Spark enhances processing speed by leveraging in-memory computing and DAG (Directed Acyclic Graph) execution, supporting iterative algorithms and real-time data processing. While MapReduce relies on HDFS for data storage and sequential processing, Spark's resilient distributed datasets (RDDs) and DAG scheduler optimize performance and resource management in distributed environments.

Performance: Speed and Efficiency

Apache Spark significantly outperforms MapReduce in both speed and efficiency by processing data in-memory, reducing disk I/O operations that typically bottleneck MapReduce's batch processing model. Spark's DAG (Directed Acyclic Graph) execution engine optimizes task scheduling and reduces latency compared to MapReduce's rigid, sequential map and reduce phases. For iterative algorithms and real-time data processing common in data science workflows, Spark's performance advantage directly translates to faster data analysis and lower computational costs.

Scalability and Fault Tolerance

Spark offers superior scalability compared to MapReduce by processing data in-memory, significantly reducing execution time for large-scale data analytics. Both frameworks provide fault tolerance through data replication and lineage information, but Spark's resilient distributed datasets (RDDs) enable faster recovery from failures without recomputing the entire dataset. This makes Spark more efficient and reliable for real-time and iterative data processing tasks at scale.

Ease of Use and Programming Models

MapReduce uses a rigid, low-level programming model primarily based on key-value pairs and requires explicit management of data flow between map and reduce phases, which can be complex and time-consuming for developers. Apache Spark offers a more flexible and user-friendly programming model with high-level APIs in languages like Scala, Python, and Java, enabling easier development of iterative algorithms and interactive data analysis. Spark's in-memory processing and built-in libraries for SQL, streaming, and machine learning significantly reduce the complexity and improve the productivity compared to the traditional MapReduce approach.

Supported Languages and APIs

MapReduce primarily supports Java, with limited support for other languages through streaming APIs, making it less flexible for diverse development environments. Apache Spark offers native APIs in Scala, Java, Python, and R, enabling seamless integration with various data science workflows and accelerating development processes. Spark's comprehensive language support and rich APIs provide significant advantages for interactive data analysis and iterative machine learning tasks.

Ecosystem and Integration Capabilities

MapReduce operates within the Hadoop ecosystem, leveraging HDFS for distributed storage but often suffers from high-latency batch processing limitations. Apache Spark integrates seamlessly with various data sources, including HDFS, Cassandra, and Amazon S3, offering in-memory computation that significantly accelerates data processing workflows. Spark's ecosystem includes libraries like MLlib for machine learning and GraphX for graph processing, enabling richer data science applications compared to the more map-reduce-centric Hadoop environment.

Cost Implications and Resource Utilization

MapReduce often incurs higher costs due to its reliance on slower disk I/O operations and batch processing, leading to increased resource consumption and longer job execution times. Apache Spark optimizes resource utilization with in-memory processing, reducing the need for extensive disk reads and writes, which lowers operational expenses and accelerates data processing tasks. Spark's ability to handle iterative algorithms more efficiently further decreases compute costs, making it a preferred choice for cost-effective big data analytics.

Real-World Applications and Use Cases

MapReduce excels in batch processing of massive datasets within distributed systems, making it ideal for large-scale indexing tasks such as web search engines and archival data analysis. Spark's in-memory computing framework significantly accelerates iterative algorithms used in machine learning, real-time data processing, and analytics platforms like fraud detection and recommendation systems. Enterprises leverage MapReduce for stable, fault-tolerant ETL workflows while adopting Spark to enhance speed and flexibility in streaming data and complex data science pipelines.

Choosing Between MapReduce and Spark: Key Considerations

Choosing between MapReduce and Spark depends on the specific data processing needs and infrastructure constraints. MapReduce excels in batch processing with robust fault tolerance on Hadoop clusters, while Spark offers superior in-memory computation for faster iterative algorithms and real-time analytics. Factors such as processing speed, resource availability, scalability, and workload type should guide the selection between these big data frameworks.

MapReduce vs Spark Infographic

techiny.com

techiny.com