Mean Absolute Error (MAE) provides a straightforward average of absolute prediction errors, making it easy to interpret but less sensitive to large errors. Root Mean Squared Error (RMSE) squares the errors before averaging, thus penalizing larger deviations more heavily and emphasizing significant prediction inaccuracies. Selecting between MAE and RMSE depends on whether minimizing average error or controlling large errors is more critical for the data science model's performance evaluation.

Table of Comparison

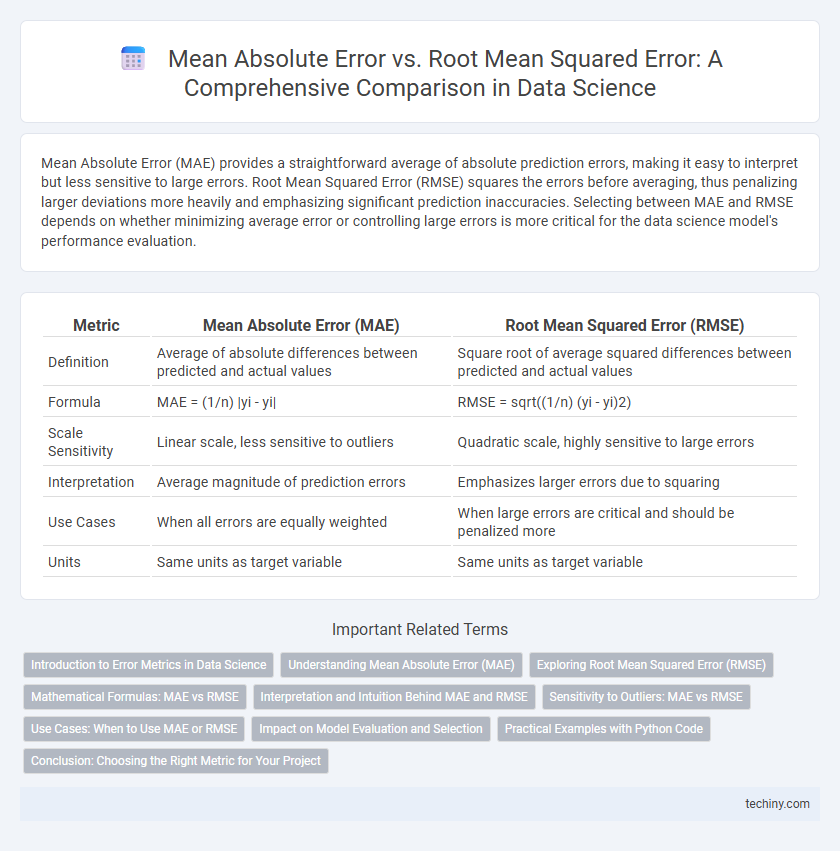

| Metric | Mean Absolute Error (MAE) | Root Mean Squared Error (RMSE) |

|---|---|---|

| Definition | Average of absolute differences between predicted and actual values | Square root of average squared differences between predicted and actual values |

| Formula | MAE = (1/n) |yi - yi| | RMSE = sqrt((1/n) (yi - yi)2) |

| Scale Sensitivity | Linear scale, less sensitive to outliers | Quadratic scale, highly sensitive to large errors |

| Interpretation | Average magnitude of prediction errors | Emphasizes larger errors due to squaring |

| Use Cases | When all errors are equally weighted | When large errors are critical and should be penalized more |

| Units | Same units as target variable | Same units as target variable |

Introduction to Error Metrics in Data Science

Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) are fundamental error metrics for evaluating predictive models in data science, quantifying the average magnitude of prediction errors without considering their direction. MAE calculates the average absolute difference between predicted and actual values, providing a linear score that directly interprets average error, while RMSE squares the differences before averaging and then takes the square root, giving higher weight to larger errors. These metrics help data scientists measure model accuracy, guide model selection, and identify the presence of outliers or variance in prediction errors.

Understanding Mean Absolute Error (MAE)

Mean Absolute Error (MAE) quantifies the average magnitude of errors between predicted and actual values in a dataset without considering their direction. It provides an intuitive measure of prediction accuracy by expressing errors in the same units as the target variable, enhancing interpretability. Compared to Root Mean Squared Error (RMSE), MAE treats all errors equally, making it less sensitive to outliers in machine learning model evaluation.

Exploring Root Mean Squared Error (RMSE)

Root Mean Squared Error (RMSE) measures the square root of the average squared differences between predicted and actual values, emphasizing larger errors more than Mean Absolute Error (MAE). RMSE is particularly sensitive to outliers, making it a preferred metric in regression tasks where penalizing significant deviations is critical. This metric is widely used in data science for evaluating model accuracy in fields like forecasting, machine learning, and statistical analysis.

Mathematical Formulas: MAE vs RMSE

Mean Absolute Error (MAE) is calculated using the formula MAE = (1/n) |yi - yi|, where yi represents actual values, yi are predicted values, and n is the number of observations. Root Mean Squared Error (RMSE) is computed as RMSE = [(1/n) (yi - yi)2], emphasizing larger errors due to square terms. These mathematical formulas highlight MAE's linear error averaging versus RMSE's quadratic error sensitivity, critical for model evaluation in data science.

Interpretation and Intuition Behind MAE and RMSE

Mean Absolute Error (MAE) measures the average magnitude of errors without considering their direction, providing an intuitive understanding of typical prediction errors in the original data units. Root Mean Squared Error (RMSE) emphasizes larger errors by squaring the deviations before averaging, making it more sensitive to outliers and reflecting model performance in situations where large errors are particularly undesirable. Interpreting MAE helps grasp the general accuracy, while RMSE provides insight into the variance and impact of extreme discrepancies in predictive modeling.

Sensitivity to Outliers: MAE vs RMSE

Mean Absolute Error (MAE) calculates the average absolute differences between predicted and actual values, providing a linear measure of error that treats all deviations equally, making it less sensitive to outliers. Root Mean Squared Error (RMSE) squares the residuals before averaging and then takes the square root, amplifying larger errors and thus increasing sensitivity to outliers due to the quadratic penalization. In data science, RMSE is preferred when large errors are particularly undesirable, while MAE offers a more robust metric for datasets prone to outliers.

Use Cases: When to Use MAE or RMSE

Mean Absolute Error (MAE) is ideal for applications where uniform error magnitude is crucial, such as in forecasting sales or demand, as it treats all errors equally without emphasizing larger deviations. Root Mean Squared Error (RMSE) is preferred in scenarios like regression modeling in weather prediction or machine learning where larger errors need to be penalized more severely due to their greater impact on model performance. Selecting MAE or RMSE depends on whether the priority is interpretability and equal weighting of errors (MAE) or sensitivity to outliers and higher error emphasis (RMSE).

Impact on Model Evaluation and Selection

Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) serve distinct roles in model evaluation and selection, with MAE providing an average magnitude of errors which is less sensitive to outliers, enhancing interpretability in linear regression and forecasting tasks. RMSE penalizes larger errors more significantly due to squaring residuals, making it more suitable for applications where large deviations critically impact performance, such as in computer vision and anomaly detection. Selecting between MAE and RMSE depends on the specific problem domain's tolerance for outliers and the importance of error magnitude, directly influencing the choice of predictive models and hyperparameter tuning strategies.

Practical Examples with Python Code

Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) are crucial metrics for evaluating regression model performance, with MAE providing the average magnitude of errors and RMSE emphasizing larger errors due to squaring. For example, in Python, using sklearn.metrics, `mean_absolute_error(y_true, y_pred)` returns MAE, while `mean_squared_error(y_true, y_pred, squared=False)` computes RMSE directly. In practice, MAE is preferred when consistent error evaluation is needed, whereas RMSE is more sensitive to outliers, making it suitable for scenarios where large errors are especially undesirable.

Conclusion: Choosing the Right Metric for Your Project

Selecting between Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) depends on the sensitivity required for your data science project; MAE provides a straightforward average error magnitude, making it ideal for understanding overall prediction accuracy without emphasizing outliers. RMSE, by squaring errors before averaging, penalizes larger errors more heavily, making it suitable for projects where outliers significantly impact performance. Evaluate the error distribution and project goals to choose the metric that aligns best with your model's evaluation criteria and desired interpretability.

Mean Absolute Error vs Root Mean Squared Error Infographic

techiny.com

techiny.com