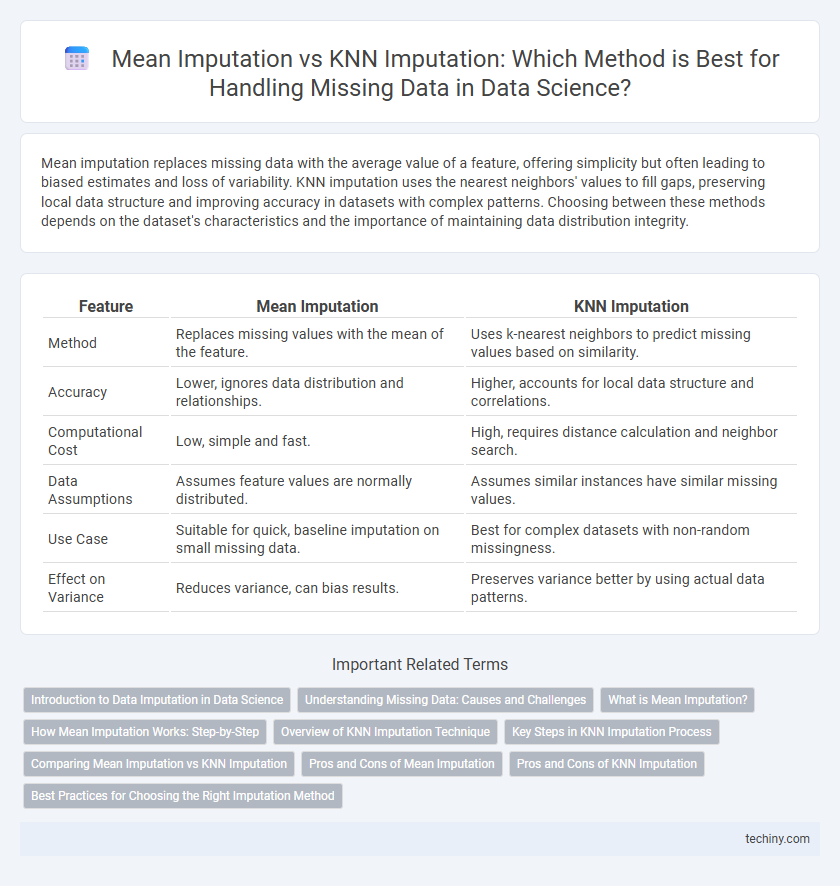

Mean imputation replaces missing data with the average value of a feature, offering simplicity but often leading to biased estimates and loss of variability. KNN imputation uses the nearest neighbors' values to fill gaps, preserving local data structure and improving accuracy in datasets with complex patterns. Choosing between these methods depends on the dataset's characteristics and the importance of maintaining data distribution integrity.

Table of Comparison

| Feature | Mean Imputation | KNN Imputation |

|---|---|---|

| Method | Replaces missing values with the mean of the feature. | Uses k-nearest neighbors to predict missing values based on similarity. |

| Accuracy | Lower, ignores data distribution and relationships. | Higher, accounts for local data structure and correlations. |

| Computational Cost | Low, simple and fast. | High, requires distance calculation and neighbor search. |

| Data Assumptions | Assumes feature values are normally distributed. | Assumes similar instances have similar missing values. |

| Use Case | Suitable for quick, baseline imputation on small missing data. | Best for complex datasets with non-random missingness. |

| Effect on Variance | Reduces variance, can bias results. | Preserves variance better by using actual data patterns. |

Introduction to Data Imputation in Data Science

Data imputation in data science addresses missing values by replacing them with estimated data points to maintain dataset integrity and improve model accuracy. Mean imputation substitutes missing entries with the average of available values, offering simplicity but potentially introducing bias in datasets with variable distributions. KNN imputation leverages the similarity between data points by using the nearest neighbors' values, enhancing accuracy in complex datasets at the cost of increased computational complexity.

Understanding Missing Data: Causes and Challenges

Missing data in datasets often arises from sensor errors, data entry mistakes, or non-responses in surveys, which can significantly bias analysis results if untreated. Mean imputation replaces missing values with the average of observed entries, simplifying computations but potentially underestimating variability and distorting data distributions. KNN imputation uses the similarity between data points, identifying k-nearest neighbors to infer missing values and preserve local data patterns, though it is computationally intensive and sensitive to the choice of k and distance metrics.

What is Mean Imputation?

Mean imputation is a simple statistical technique used in data science to handle missing values by replacing them with the average value of the non-missing data points within the same feature. This method preserves the overall mean of the dataset but can reduce variability and potentially introduce bias in downstream analysis. Mean imputation is computationally efficient and commonly applied in large datasets where missingness is random and minimal.

How Mean Imputation Works: Step-by-Step

Mean imputation replaces missing data values by calculating the average of the observed values within a feature and substituting this mean for each missing entry. The process involves identifying missing points, computing the mean from available data, and filling in missing values with this computed average to ensure dataset completeness. This method is straightforward but may reduce data variance and bias results when missingness is not random.

Overview of KNN Imputation Technique

KNN imputation estimates missing values by identifying the k-nearest neighbors based on feature similarity, using distance metrics such as Euclidean or Manhattan distance to measure closeness between data points. The algorithm imputes missing data by averaging the values of the nearest neighbors, preserving local data structure and relationships. This method is especially effective in datasets with non-linear patterns and mixed data types, offering more accurate imputations compared to mean imputation, which simply replaces missing values with the overall column mean.

Key Steps in KNN Imputation Process

KNN imputation involves identifying the k-nearest neighbors based on a defined distance metric, such as Euclidean distance, to estimate missing values. The process includes selecting an appropriate number of neighbors (k), calculating distances between data points with incomplete features and complete cases, and imputing missing values by averaging or weighting the neighbors' feature values. This method preserves local data structure and tends to produce more accurate and context-aware imputations compared to mean imputation.

Comparing Mean Imputation vs KNN Imputation

Mean Imputation replaces missing values with the average of the observed data points, offering simplicity and speed but often reducing variability and potentially biasing the dataset. KNN Imputation uses the closest data points based on feature similarity to estimate missing values, preserving data distribution and relationships more effectively but requiring higher computational power. When comparing Mean Imputation vs KNN Imputation, KNN generally yields more accurate and reliable results in datasets with complex structures or non-linear relationships.

Pros and Cons of Mean Imputation

Mean imputation is a simple and computationally efficient technique for handling missing data by replacing missing values with the variable's average. It preserves dataset size but can reduce data variability and introduce bias, especially when data are not missing completely at random. While mean imputation maintains consistency for large datasets, it may decrease the accuracy of predictive models due to its inability to capture underlying patterns in the data.

Pros and Cons of KNN Imputation

KNN imputation leverages the similarity between data points to estimate missing values, preserving the underlying data structure and often yielding more accurate results compared to simple mean imputation. However, it is computationally intensive, especially on large datasets, and performance can degrade with high dimensionality due to the curse of dimensionality. Proper selection of the number of neighbors (k) is critical, as inappropriate values can lead to biased or over-smoothed imputations.

Best Practices for Choosing the Right Imputation Method

Mean imputation provides a simple and fast solution for missing data but can introduce bias and underestimate variability in datasets with complex patterns. KNN imputation captures underlying data structures by using similarity-based neighbors, making it more suitable for datasets with nonlinear relationships and mixed data types. Best practices recommend evaluating data distribution, missingness mechanism, and computational resources to select between mean imputation's efficiency and KNN's accuracy for reliable data analysis.

Mean Imputation vs KNN Imputation Infographic

techiny.com

techiny.com