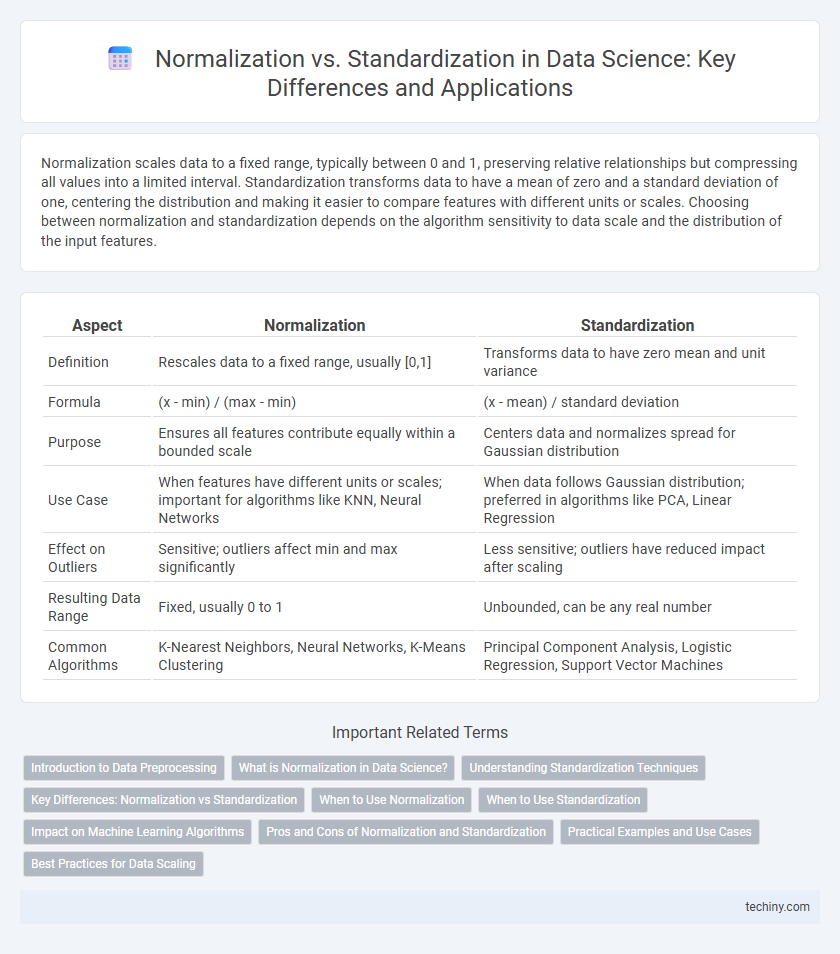

Normalization scales data to a fixed range, typically between 0 and 1, preserving relative relationships but compressing all values into a limited interval. Standardization transforms data to have a mean of zero and a standard deviation of one, centering the distribution and making it easier to compare features with different units or scales. Choosing between normalization and standardization depends on the algorithm sensitivity to data scale and the distribution of the input features.

Table of Comparison

| Aspect | Normalization | Standardization |

|---|---|---|

| Definition | Rescales data to a fixed range, usually [0,1] | Transforms data to have zero mean and unit variance |

| Formula | (x - min) / (max - min) | (x - mean) / standard deviation |

| Purpose | Ensures all features contribute equally within a bounded scale | Centers data and normalizes spread for Gaussian distribution |

| Use Case | When features have different units or scales; important for algorithms like KNN, Neural Networks | When data follows Gaussian distribution; preferred in algorithms like PCA, Linear Regression |

| Effect on Outliers | Sensitive; outliers affect min and max significantly | Less sensitive; outliers have reduced impact after scaling |

| Resulting Data Range | Fixed, usually 0 to 1 | Unbounded, can be any real number |

| Common Algorithms | K-Nearest Neighbors, Neural Networks, K-Means Clustering | Principal Component Analysis, Logistic Regression, Support Vector Machines |

Introduction to Data Preprocessing

Normalization scales features to a fixed range, typically between 0 and 1, enhancing the performance of algorithms sensitive to magnitude differences, such as neural networks and k-nearest neighbors. Standardization transforms data to have a mean of zero and a standard deviation of one, which is crucial for models assuming normally distributed input variables like logistic regression and support vector machines. Both techniques are fundamental in data preprocessing to improve model accuracy and convergence speed by mitigating bias from feature scale disparities.

What is Normalization in Data Science?

Normalization in data science refers to the process of scaling individual data features to a specific range, typically between 0 and 1, to ensure uniformity across different variables. This technique reduces the impact of varying data magnitudes, facilitating faster convergence in machine learning algorithms such as neural networks. Common normalization methods include Min-Max Scaling, which transforms data based on its minimum and maximum values, preserving the shape of the original distribution.

Understanding Standardization Techniques

Standardization techniques transform data to have a mean of zero and a standard deviation of one, ensuring features contribute equally to model performance. Common methods include Z-score normalization, which subtracts the mean and divides by the standard deviation, enhancing algorithm convergence and stability. Standardization is crucial when data contains varying scales or units, improving the accuracy of distance-based models like k-nearest neighbors and support vector machines.

Key Differences: Normalization vs Standardization

Normalization rescales data to a fixed range, typically 0 to 1, preserving the shape of the original distribution but compressing all feature values within a specific scale. Standardization transforms data to have a mean of zero and a standard deviation of one, centering the data and scaling it based on statistical properties, making it suitable for algorithms assuming normally distributed data. Key differences include their impact on data scaling and distribution assumptions: normalization bounds the data within a range, while standardization adjusts data to a Gaussian-like distribution without bounding.

When to Use Normalization

Normalization is ideal when data features have varying scales and need to be transformed into a common range, typically between 0 and 1, to improve the performance of algorithms like k-nearest neighbors and neural networks. It is particularly useful for datasets with bounded feature values or when the goal is to maintain the original distribution shape while scaling. Normalization enhances convergence speed during training and ensures that distance-based methods perform correctly by preventing dominance of larger scale features.

When to Use Standardization

Standardization is essential when the data follows a Gaussian distribution or when features have varying units and scales, ensuring comparability across variables. It is particularly useful in algorithms like Support Vector Machines, Principal Component Analysis, and k-Nearest Neighbors that assume normally distributed data. Applying standardization improves convergence speed and model performance by centering data around zero with a unit variance.

Impact on Machine Learning Algorithms

Normalization scales data to a fixed range, usually [0, 1], improving the convergence speed of gradient-based algorithms like neural networks and k-nearest neighbors by ensuring uniform feature magnitude. Standardization transforms data to have zero mean and unit variance, benefiting algorithms such as Support Vector Machines and Principal Component Analysis by maintaining the original data distribution structure. Both techniques reduce bias caused by feature scale differences, enhancing model accuracy and stability in machine learning workflows.

Pros and Cons of Normalization and Standardization

Normalization scales data to a range, typically [0,1], enhancing performance in algorithms sensitive to feature magnitude like K-nearest neighbors and neural networks, but it is prone to distortion by outliers. Standardization transforms data to have a mean of zero and a standard deviation of one, maintaining the influence of outliers and often benefiting algorithms assuming Gaussian distributions, such as logistic regression and linear discriminant analysis. While normalization improves convergence speed in gradient-based methods, standardization preserves meaningful variance, making the choice dependent on the specific machine learning algorithm and data distribution characteristics.

Practical Examples and Use Cases

Normalization rescales data to a [0,1] range, making it ideal for algorithms like K-Nearest Neighbors and Neural Networks that compute distances or require bounded input features. Standardization transforms data to have zero mean and unit variance, which is crucial for models such as Logistic Regression, Support Vector Machines, and Principal Component Analysis to assume normally distributed features. Practical examples include normalizing pixel values in image processing and standardizing financial indicators for robust portfolio optimization.

Best Practices for Data Scaling

Normalization rescales data to a fixed range, typically 0 to 1, making it ideal for algorithms sensitive to magnitude like neural networks and k-nearest neighbors. Standardization transforms data to have a mean of zero and a standard deviation of one, which is best suited for algorithms assuming normally distributed data, such as logistic regression and support vector machines. Choosing the appropriate scaling technique depends on the data distribution and the specific machine learning algorithm to ensure optimal model performance and convergence.

Normalization vs Standardization Infographic

techiny.com

techiny.com