Random Forest and Gradient Boosted Trees are powerful ensemble learning techniques used in data science for classification and regression tasks. Random Forest builds multiple decision trees independently using bootstrap samples and aggregates their predictions to reduce overfitting and improve accuracy. Gradient Boosted Trees sequentially build trees by focusing on correcting errors from previous trees, resulting in higher predictive accuracy but requiring careful tuning to avoid overfitting.

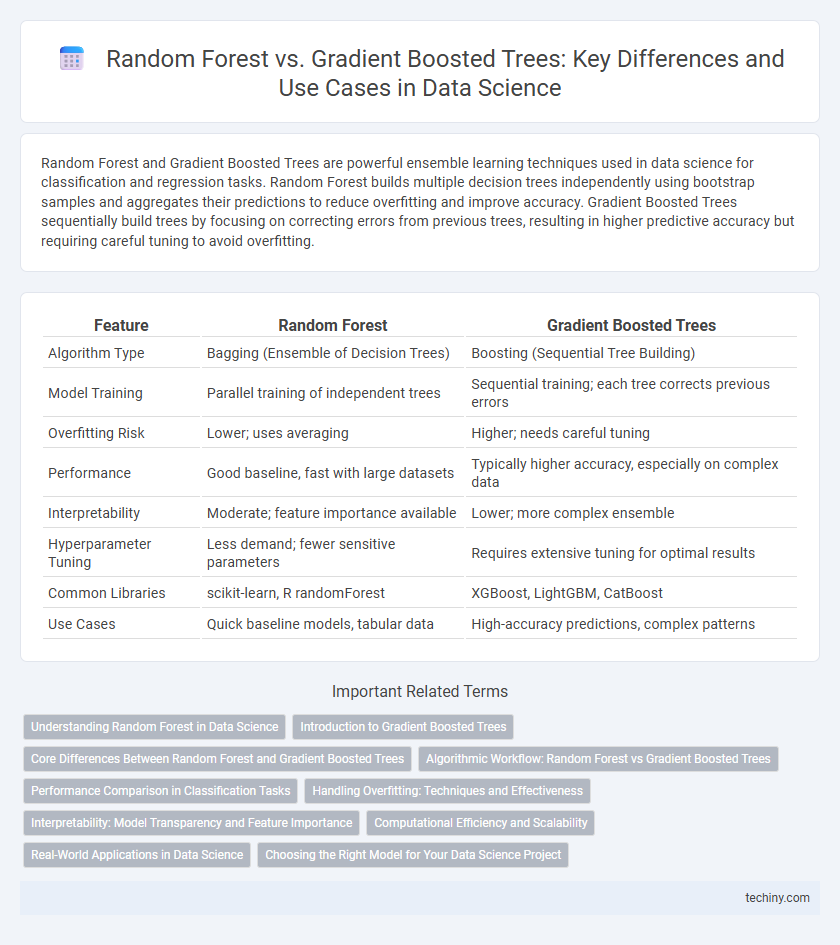

Table of Comparison

| Feature | Random Forest | Gradient Boosted Trees |

|---|---|---|

| Algorithm Type | Bagging (Ensemble of Decision Trees) | Boosting (Sequential Tree Building) |

| Model Training | Parallel training of independent trees | Sequential training; each tree corrects previous errors |

| Overfitting Risk | Lower; uses averaging | Higher; needs careful tuning |

| Performance | Good baseline, fast with large datasets | Typically higher accuracy, especially on complex data |

| Interpretability | Moderate; feature importance available | Lower; more complex ensemble |

| Hyperparameter Tuning | Less demand; fewer sensitive parameters | Requires extensive tuning for optimal results |

| Common Libraries | scikit-learn, R randomForest | XGBoost, LightGBM, CatBoost |

| Use Cases | Quick baseline models, tabular data | High-accuracy predictions, complex patterns |

Understanding Random Forest in Data Science

Random Forest in data science is an ensemble learning method that builds multiple decision trees using bootstrapped datasets and random feature selection to improve prediction accuracy while reducing overfitting. Each tree in the forest independently votes, and the majority vote or average prediction produces a robust and stable model. This approach excels in handling large datasets with diverse feature types and provides insights into feature importance for interpretability.

Introduction to Gradient Boosted Trees

Gradient Boosted Trees build predictive models by sequentially adding decision trees, each correcting errors from the previous one, resulting in high accuracy and reduced bias. This ensemble technique optimizes loss functions through gradient descent, making it powerful for complex data patterns and classification tasks. Compared to Random Forests, which average multiple independent trees, Gradient Boosted Trees emphasize boosting weak learners to enhance model performance.

Core Differences Between Random Forest and Gradient Boosted Trees

Random Forest utilizes bagging by constructing multiple decision trees independently on bootstrapped samples and aggregating their predictions to reduce variance. Gradient Boosted Trees build trees sequentially, where each tree corrects the errors of the previous ones by optimizing a loss function, effectively minimizing bias. This fundamental difference results in Random Forest being more robust to overfitting and easier to parallelize, while Gradient Boosted Trees typically achieve higher accuracy through iterative refinement.

Algorithmic Workflow: Random Forest vs Gradient Boosted Trees

Random Forest builds multiple decision trees independently using bootstrap samples and aggregates their outputs through majority voting or averaging to reduce variance and prevent overfitting. Gradient Boosted Trees sequentially build trees where each new tree corrects errors made by previous trees by optimizing a loss function via gradient descent, resulting in a model with lower bias but potentially higher overfitting risk. The Random Forest's parallel training contrasts with the sequential, stage-wise addition of trees in Gradient Boosted Trees, influencing both training speed and model interpretability.

Performance Comparison in Classification Tasks

Random Forest achieves strong performance in classification tasks by averaging multiple deep decision trees, reducing overfitting and variance. Gradient Boosted Trees sequentially optimize weak learners, often resulting in higher accuracy and better handling of complex patterns but with increased training time and sensitivity to hyperparameters. Empirical studies show Gradient Boosted Trees typically outperform Random Forests on datasets with intricate feature interactions, while Random Forests excel in stability and faster inference.

Handling Overfitting: Techniques and Effectiveness

Random Forest mitigates overfitting by averaging predictions from multiple decorrelated decision trees, leveraging bootstrap aggregation (bagging) and feature randomness to enhance model generalization. Gradient Boosted Trees employ sequential tree building with learning rate control, early stopping, and regularization techniques like shrinkage and subsampling to prevent over-complexity and reduce overfitting risk. Empirical evidence suggests Gradient Boosted Trees typically achieve higher accuracy but require careful hyperparameter tuning to balance bias-variance tradeoff more effectively than Random Forest.

Interpretability: Model Transparency and Feature Importance

Random Forest offers greater interpretability through straightforward model transparency and easy extraction of feature importance via mean decrease impurity or permutation importance metrics. Gradient Boosted Trees provide high predictive accuracy but often at the expense of reduced transparency due to sequential model updates and complex interactions. Techniques like SHAP values are essential for understanding feature contributions in both models, with Random Forest typically favored in scenarios prioritizing clearer interpretability.

Computational Efficiency and Scalability

Random Forest algorithms generally offer higher computational efficiency due to their parallelizable nature, allowing trees to be built simultaneously, which scales well with large datasets and multiple processors. Gradient Boosted Trees, while often achieving better predictive accuracy, involve sequential tree building that can slow down training time and pose challenges for scalability on very large datasets. Optimizing Gradient Boosting frameworks like XGBoost or LightGBM can improve performance but typically require more computational resources compared to Random Forest implementations.

Real-World Applications in Data Science

Random Forest and Gradient Boosted Trees are pivotal in data science for tasks like classification, regression, and feature selection across industries such as finance, healthcare, and marketing. Random Forest excels in handling large datasets with noisy features due to its ensemble of decision trees providing robustness against overfitting. Gradient Boosted Trees deliver higher predictive accuracy by sequentially minimizing errors, making them ideal for complex patterns in customer churn prediction and fraud detection.

Choosing the Right Model for Your Data Science Project

Random Forests excel in handling large datasets with high dimensionality and noisy features due to their robust averaging of multiple decision trees, reducing overfitting and improving generalization. Gradient Boosted Trees optimize predictive accuracy by sequentially correcting errors from prior models, making them ideal for complex datasets where fine-tuned performance is critical, albeit with higher computational cost and sensitivity to hyperparameters. Selecting the appropriate model depends on dataset size, feature interactions, and project goals, balancing Random Forest's stability against Gradient Boosted Trees' precision for optimal data science outcomes.

Random Forest vs Gradient Boosted Trees Infographic

techiny.com

techiny.com