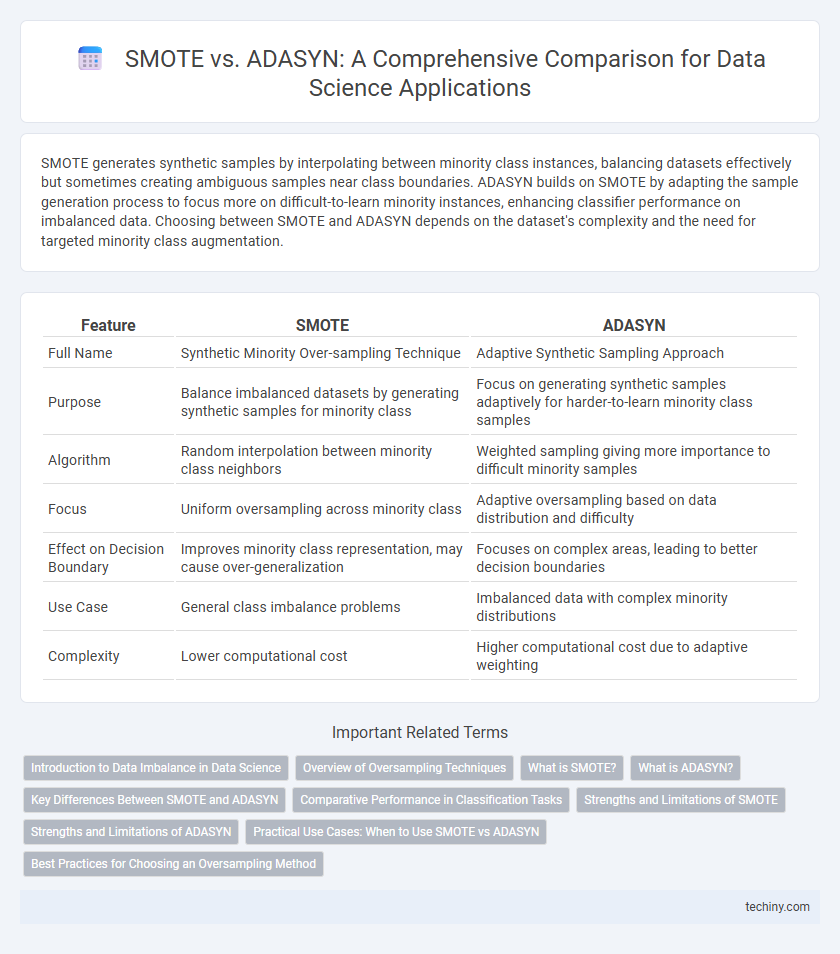

SMOTE generates synthetic samples by interpolating between minority class instances, balancing datasets effectively but sometimes creating ambiguous samples near class boundaries. ADASYN builds on SMOTE by adapting the sample generation process to focus more on difficult-to-learn minority instances, enhancing classifier performance on imbalanced data. Choosing between SMOTE and ADASYN depends on the dataset's complexity and the need for targeted minority class augmentation.

Table of Comparison

| Feature | SMOTE | ADASYN |

|---|---|---|

| Full Name | Synthetic Minority Over-sampling Technique | Adaptive Synthetic Sampling Approach |

| Purpose | Balance imbalanced datasets by generating synthetic samples for minority class | Focus on generating synthetic samples adaptively for harder-to-learn minority class samples |

| Algorithm | Random interpolation between minority class neighbors | Weighted sampling giving more importance to difficult minority samples |

| Focus | Uniform oversampling across minority class | Adaptive oversampling based on data distribution and difficulty |

| Effect on Decision Boundary | Improves minority class representation, may cause over-generalization | Focuses on complex areas, leading to better decision boundaries |

| Use Case | General class imbalance problems | Imbalanced data with complex minority distributions |

| Complexity | Lower computational cost | Higher computational cost due to adaptive weighting |

Introduction to Data Imbalance in Data Science

Data imbalance in data science occurs when certain classes in a dataset are underrepresented, leading to biased model predictions and poor generalization. SMOTE (Synthetic Minority Over-sampling Technique) and ADASYN (Adaptive Synthetic Sampling) address this challenge by generating synthetic samples for minority classes to balance the dataset. SMOTE creates samples along the line segments between minority class neighbors, while ADASYN focuses on generating more samples in harder-to-learn regions, improving classifier performance on imbalanced data.

Overview of Oversampling Techniques

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples by interpolating between existing minority class instances to address class imbalance. ADASYN (Adaptive Synthetic Sampling) builds on SMOTE by focusing more on difficult-to-learn examples, adaptively generating more synthetic data where the minority class distribution is sparse. Both techniques improve model performance by reducing bias toward the majority class, but ADASYN emphasizes harder-to-classify regions for enhanced learning.

What is SMOTE?

SMOTE (Synthetic Minority Over-sampling Technique) is a popular data augmentation method used to address class imbalance in machine learning by generating synthetic samples for the minority class. It works by interpolating between existing minority class instances and their nearest neighbors to create new, plausible data points. This technique helps improve the performance of classifiers by providing a more balanced training dataset, reducing bias towards the majority class.

What is ADASYN?

ADASYN (Adaptive Synthetic Sampling) is an advanced oversampling technique designed to address class imbalance in datasets by generating synthetic samples for minority classes based on data distribution and learning difficulty. It adaptively shifts the decision boundary toward harder-to-learn examples, improving classifier performance on imbalanced data. Unlike SMOTE, ADASYN allocates more synthetic samples to minority class instances that are harder to classify, enhancing model generalization in imbalanced classification tasks.

Key Differences Between SMOTE and ADASYN

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples by interpolating between existing minority class instances uniformly, while ADASYN (Adaptive Synthetic Sampling) focuses on generating more synthetic data near minority samples that are harder to classify, adapting to the data distribution. SMOTE treats all minority class samples equally during oversampling, whereas ADASYN dynamically weighs minority samples based on their learning difficulty, leading to targeted sampling. This makes ADASYN more effective in handling imbalanced datasets where class overlap or complex decision boundaries exist.

Comparative Performance in Classification Tasks

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples by interpolating between minority class instances, effectively balancing datasets and improving classifier performance on imbalanced data. ADASYN (Adaptive Synthetic Sampling) extends SMOTE by focusing more on harder-to-learn minority class examples, enhancing the classifier's ability to handle borderline cases. Comparative studies reveal ADASYN often yields higher recall and F1-scores in complex classification tasks, while SMOTE provides more stable precision, making the choice dependent on the specific imbalance severity and classification goals.

Strengths and Limitations of SMOTE

SMOTE (Synthetic Minority Over-sampling Technique) excels at generating synthetic samples by interpolating between minority class instances, which effectively balances imbalanced datasets and improves classifier performance. Its strength lies in reducing overfitting by creating diverse synthetic examples rather than duplicating existing ones, but it struggles with noisy data and overlapping class boundaries, potentially leading to class ambiguity. SMOTE's limitation includes the inability to adaptively focus on harder-to-learn examples, which newer techniques like ADASYN address by emphasizing synthetic sample generation in complex regions.

Strengths and Limitations of ADASYN

ADASYN excels at generating synthetic samples for minority classes by adapting the number of samples created based on local data density, which improves classifier performance on imbalanced datasets. Its strength lies in focusing more on difficult-to-learn examples, enhancing decision boundaries and reducing bias toward majority classes. However, ADASYN can introduce noise by oversampling borderline or outlier minority instances and may increase computational complexity compared to simpler methods like SMOTE.

Practical Use Cases: When to Use SMOTE vs ADASYN

SMOTE is ideal for datasets with moderate class imbalance where generating synthetic minority samples evenly helps improve classifier performance. ADASYN excels in highly imbalanced datasets by adaptively generating more synthetic data near complex or harder-to-learn minority class examples. Practical use involves selecting SMOTE for balanced data augmentation and ADASYN when focusing on boundary minority instances to boost model robustness.

Best Practices for Choosing an Oversampling Method

When selecting an oversampling method in data science, SMOTE is preferred for balanced datasets with moderate class imbalance due to its ability to generate synthetic samples evenly across minority classes. ADASYN is better suited for highly imbalanced datasets as it focuses on creating more synthetic samples in regions where minority class data is sparse, improving classifier performance on difficult-to-learn examples. Evaluating dataset complexity, degree of imbalance, and model sensitivity to noise is crucial for choosing between SMOTE and ADASYN to optimize classification accuracy and robustness.

SMOTE vs ADASYN Infographic

techiny.com

techiny.com