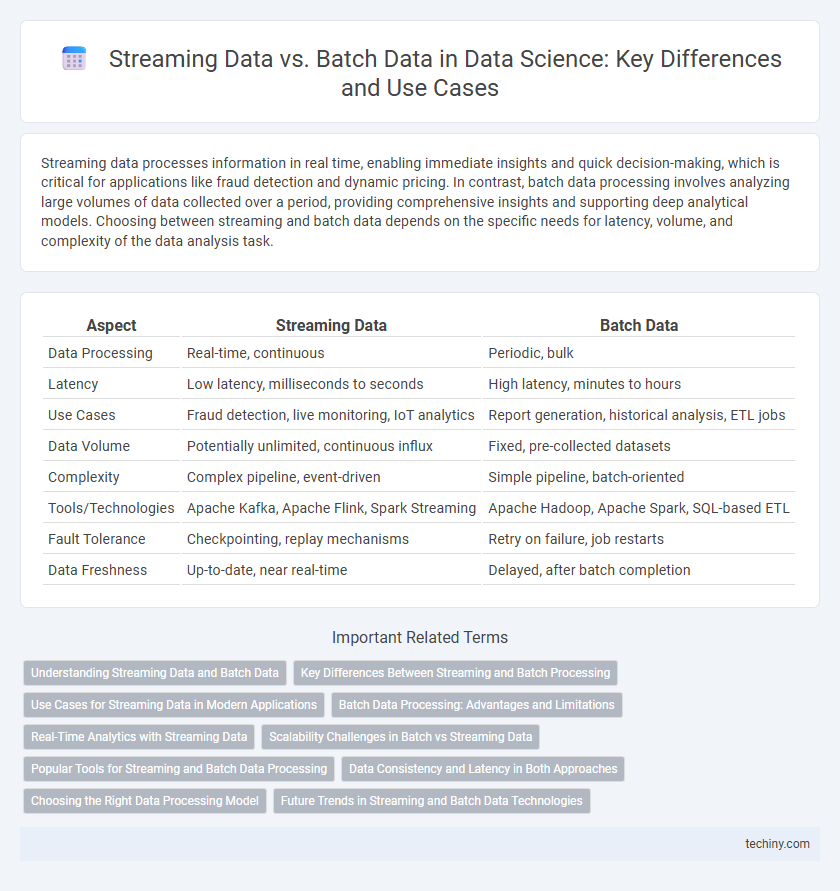

Streaming data processes information in real time, enabling immediate insights and quick decision-making, which is critical for applications like fraud detection and dynamic pricing. In contrast, batch data processing involves analyzing large volumes of data collected over a period, providing comprehensive insights and supporting deep analytical models. Choosing between streaming and batch data depends on the specific needs for latency, volume, and complexity of the data analysis task.

Table of Comparison

| Aspect | Streaming Data | Batch Data |

|---|---|---|

| Data Processing | Real-time, continuous | Periodic, bulk |

| Latency | Low latency, milliseconds to seconds | High latency, minutes to hours |

| Use Cases | Fraud detection, live monitoring, IoT analytics | Report generation, historical analysis, ETL jobs |

| Data Volume | Potentially unlimited, continuous influx | Fixed, pre-collected datasets |

| Complexity | Complex pipeline, event-driven | Simple pipeline, batch-oriented |

| Tools/Technologies | Apache Kafka, Apache Flink, Spark Streaming | Apache Hadoop, Apache Spark, SQL-based ETL |

| Fault Tolerance | Checkpointing, replay mechanisms | Retry on failure, job restarts |

| Data Freshness | Up-to-date, near real-time | Delayed, after batch completion |

Understanding Streaming Data and Batch Data

Streaming data is generated continuously from sources like sensors, social media, and financial transactions, providing real-time insights crucial for immediate decision-making. Batch data involves collecting large volumes of data over time, then processing it in bulk, suitable for complex analytics and historical trend analysis. Understanding the trade-offs between streaming and batch data processing enables organizations to optimize data workflows for latency, accuracy, and resource efficiency.

Key Differences Between Streaming and Batch Processing

Streaming data processes information in real-time, enabling immediate analytics and rapid decision-making by continuously ingesting and analyzing data as it arrives. Batch processing handles large volumes of data collected over a period, executing complex computations and transformations in scheduled intervals for thorough data aggregation and historical analysis. Key differences include latency, with streaming offering low latency and batch processing exhibiting higher latency, and the use case adaptability where streaming suits time-sensitive applications while batch is optimal for comprehensive, resource-intensive workflows.

Use Cases for Streaming Data in Modern Applications

Streaming data enables real-time analytics in applications such as fraud detection, where immediate response to suspicious transactions is critical. It powers live recommendation engines in e-commerce and media platforms by continuously processing user interactions. IoT device monitoring leverages streaming data to promptly identify anomalies and trigger alerts, ensuring operational efficiency and safety.

Batch Data Processing: Advantages and Limitations

Batch data processing offers the advantage of handling large volumes of data efficiently, enabling complex computations and thorough data transformations within scheduled time frames. It excels in scenarios requiring historical data analysis, data warehousing, and offline reporting due to its ability to process data in bulk with high accuracy. However, batch processing suffers from latency issues as it does not provide real-time insights, making it less suitable for time-sensitive applications like fraud detection or live recommendation systems.

Real-Time Analytics with Streaming Data

Streaming data enables real-time analytics by processing continuous data flows instantly, allowing immediate insights and rapid decision-making. Unlike batch data, which aggregates information over time and updates results periodically, streaming data analytics supports dynamic environments with time-sensitive applications such as fraud detection, predictive maintenance, and live customer personalization. This continuous data ingestion and analysis reduces latency, enhances responsiveness, and drives more proactive business strategies.

Scalability Challenges in Batch vs Streaming Data

Batch data processing faces scalability challenges due to fixed-size data chunks, leading to high latency and resource spikes during large data loads. Streaming data systems, designed for continuous data flow, encounter challenges in maintaining low-latency processing and state management at scale. Efficient distributed architectures and real-time data partitioning are critical for overcoming scalability issues in both paradigms.

Popular Tools for Streaming and Batch Data Processing

Apache Kafka and Apache Flink are popular tools for streaming data processing, enabling real-time analytics and event-driven applications. For batch data processing, Apache Hadoop and Apache Spark dominate with their robust frameworks supporting large-scale data transformation and analysis. These platforms optimize performance for specific workloads, with Kafka handling continuous data streams and Hadoop's MapReduce excelling in processing huge static datasets.

Data Consistency and Latency in Both Approaches

Streaming data offers low latency by processing data in real time, enabling immediate insights, while batch data provides higher data consistency through processing large, complete datasets at scheduled intervals. Streaming systems face challenges maintaining strict consistency due to continuous data flow and potential out-of-order events, whereas batch processing ensures consistency by handling well-defined, static data snapshots. The trade-off between latency and consistency requires selecting the appropriate approach based on application requirements such as real-time analytics or comprehensive data accuracy.

Choosing the Right Data Processing Model

Selecting the appropriate data processing model depends on the specific requirements of a use case, with streaming data offering real-time insights by processing continuous data flows, while batch data allows for large-scale analysis by handling massive datasets at scheduled intervals. Streaming data processing frameworks like Apache Kafka and Apache Flink are optimized for low-latency applications such as fraud detection and live analytics. Batch processing tools such as Apache Hadoop and Apache Spark excel in complex computations on historical data, enabling deep data analysis and reporting.

Future Trends in Streaming and Batch Data Technologies

Future trends in streaming data technologies emphasize real-time analytics powered by advances in machine learning and edge computing, enabling rapid decision-making across industries such as finance and IoT. Batch data processing continues to evolve with enhanced scalability and integration of cloud-based platforms, facilitating complex large-scale data transformations and historical data analysis. Hybrid architectures that combine streaming and batch data processing are gaining traction, optimizing resource allocation and improving data accuracy for comprehensive business intelligence solutions.

streaming data vs batch data Infographic

techiny.com

techiny.com