The training set is a subset of data used to teach machine learning models by allowing them to learn patterns and relationships within the data. The testing set evaluates the model's performance on unseen data to ensure generalization and prevent overfitting. Proper separation between training and testing sets is crucial for reliable model validation and accurate assessment of predictive capabilities.

Table of Comparison

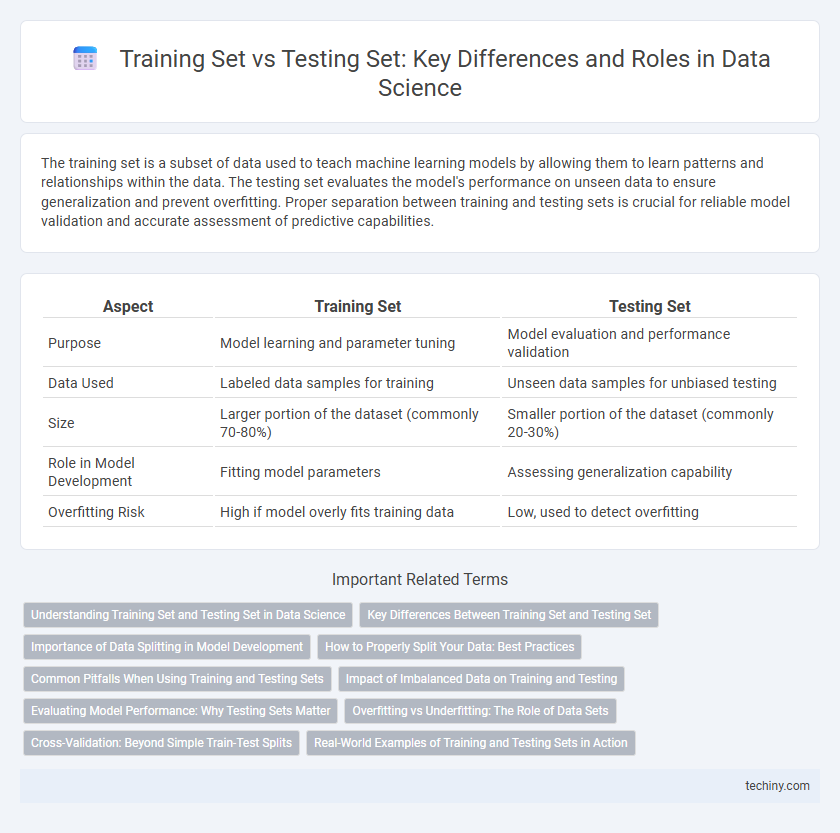

| Aspect | Training Set | Testing Set |

|---|---|---|

| Purpose | Model learning and parameter tuning | Model evaluation and performance validation |

| Data Used | Labeled data samples for training | Unseen data samples for unbiased testing |

| Size | Larger portion of the dataset (commonly 70-80%) | Smaller portion of the dataset (commonly 20-30%) |

| Role in Model Development | Fitting model parameters | Assessing generalization capability |

| Overfitting Risk | High if model overly fits training data | Low, used to detect overfitting |

Understanding Training Set and Testing Set in Data Science

The training set in data science consists of labeled data used to teach machine learning models to identify patterns and make predictions. The testing set, reserved from the original dataset, evaluates the model's performance on unseen data to assess generalization and avoid overfitting. Proper separation between training and testing sets ensures accurate model validation and reliable performance metrics.

Key Differences Between Training Set and Testing Set

The training set is a subset of the dataset used to fit and optimize machine learning models by adjusting model parameters, while the testing set evaluates the model's performance on unseen data to assess generalization capabilities. Key differences include the training set's role in model learning versus the testing set's function as an unbiased benchmark, with the testing set strictly excluded from the training process to prevent overfitting. Accurate separation of these sets is essential for reliable model validation and performance estimation in data science workflows.

Importance of Data Splitting in Model Development

Data splitting into training and testing sets is crucial for accurate model evaluation and preventing overfitting in data science. The training set enables the model to learn patterns from historical data, while the testing set provides an unbiased assessment of model performance on unseen data. Proper data splitting techniques enhance the reliability and generalizability of predictive models in real-world applications.

How to Properly Split Your Data: Best Practices

Properly splitting your data into training and testing sets involves allocating typically 70-80% of the data for training and 20-30% for testing to ensure model generalization. Employ stratified sampling techniques especially for imbalanced datasets to maintain the same distribution of classes across both sets. Always shuffle data before splitting and use cross-validation methods to optimize model performance and evaluation reliability.

Common Pitfalls When Using Training and Testing Sets

Common pitfalls when using training and testing sets include data leakage, where information from the testing set inadvertently influences the training process, leading to overly optimistic performance estimates. Another frequent issue is an imbalanced distribution between sets, causing biased model evaluation and poor generalization on unseen data. Overfitting to the training set often results from inadequate separation of datasets, undermining the reliability of model validation.

Impact of Imbalanced Data on Training and Testing

Imbalanced data in training sets often leads to biased models that favor the majority class, resulting in poor generalization and inaccurate predictions on the testing set. Models trained on skewed distributions tend to exhibit high precision but low recall for minority classes during evaluation, highlighting challenges in model robustness. Addressing imbalance through techniques like oversampling, undersampling, or synthetic data generation is crucial to improve model performance and reliability across both training and testing phases.

Evaluating Model Performance: Why Testing Sets Matter

Testing sets provide an unbiased assessment of a machine learning model's performance by evaluating its accuracy on unseen data, crucial for detecting overfitting in the training set. They enable the measurement of key metrics like precision, recall, and F1 score, which reflect the model's ability to generalize beyond the training data. Reliable evaluation using testing sets ensures robust deployment of predictive models in real-world applications.

Overfitting vs Underfitting: The Role of Data Sets

Training sets are used to fit machine learning models, directly affecting model complexity and the risk of overfitting, where the model memorizes training data but performs poorly on new data. Testing sets evaluate model generalization and highlight underfitting issues, indicating when the model is too simple to capture underlying patterns. Balancing training and testing data sizes is critical to optimize model accuracy while minimizing both overfitting and underfitting.

Cross-Validation: Beyond Simple Train-Test Splits

Cross-validation enhances model evaluation by partitioning the dataset into multiple training and testing subsets, reducing bias from simple train-test splits. Techniques like k-fold cross-validation provide more reliable performance metrics by ensuring each data point is used for both training and testing. This approach improves generalization and robustness in data science models, especially when dataset sizes are limited.

Real-World Examples of Training and Testing Sets in Action

Training sets in data science typically contain labeled data such as numerous images of handwritten digits used by models like MNIST for digit recognition, while testing sets consist of unseen data to evaluate model accuracy. For example, in fraud detection systems, historical transaction data serves as the training set to teach algorithms normal vs. fraudulent patterns, and real-time transactions comprise the testing set to assess real-world effectiveness. Autonomous vehicle systems rely on annotated driving scenarios in training sets to learn conditions, whereas new road environments act as testing sets to validate decision-making capabilities.

Training Set vs Testing Set Infographic

techiny.com

techiny.com