Type I error occurs when a true null hypothesis is incorrectly rejected, leading to a false positive result, while Type II error happens when a false null hypothesis is not rejected, causing a false negative outcome. Minimizing Type I error reduces the risk of identifying a relationship or effect that does not exist, whereas minimizing Type II error improves the detection of actual effects or patterns in the data. Balancing these errors is crucial in data science to ensure reliable hypothesis testing and accurate model evaluation.

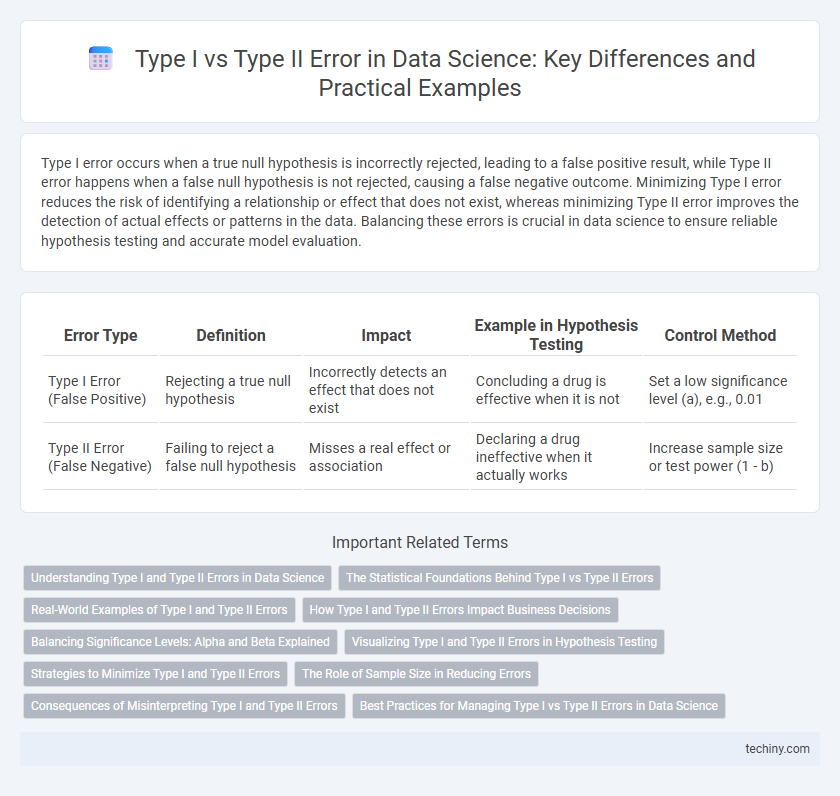

Table of Comparison

| Error Type | Definition | Impact | Example in Hypothesis Testing | Control Method |

|---|---|---|---|---|

| Type I Error (False Positive) | Rejecting a true null hypothesis | Incorrectly detects an effect that does not exist | Concluding a drug is effective when it is not | Set a low significance level (a), e.g., 0.01 |

| Type II Error (False Negative) | Failing to reject a false null hypothesis | Misses a real effect or association | Declaring a drug ineffective when it actually works | Increase sample size or test power (1 - b) |

Understanding Type I and Type II Errors in Data Science

Type I error in data science occurs when a true null hypothesis is incorrectly rejected, leading to false positives, while Type II error happens when a false null hypothesis is not rejected, causing false negatives. Balancing the significance level (alpha) and power (1 - beta) of statistical tests helps minimize these errors, which is crucial for accurate predictive modeling and hypothesis testing. Understanding Type I and Type II errors ensures data-driven decisions are reliable and reduces risks of misinterpretation in machine learning and data analysis.

The Statistical Foundations Behind Type I vs Type II Errors

Type I error occurs when a true null hypothesis is incorrectly rejected, representing a false positive, while Type II error happens when a false null hypothesis is not rejected, resulting in a false negative. The statistical foundations of these errors lie in hypothesis testing, where significance level (alpha) controls the probability of Type I error, and statistical power (1 - beta) relates to the likelihood of avoiding Type II error. Balancing alpha and beta is crucial for valid inference in data science, impacting model reliability and decision-making accuracy.

Real-World Examples of Type I and Type II Errors

Type I error, or false positive, occurs in medical testing when a healthy patient is incorrectly diagnosed with a disease, leading to unnecessary treatments and anxiety. Type II error, or false negative, happens when a cancer screening fails to detect a tumor, delaying crucial intervention and worsening patient outcomes. In fraud detection systems, Type I errors flag legitimate transactions as fraudulent, inconveniencing customers, while Type II errors allow fraudulent activities to proceed undetected, causing financial losses.

How Type I and Type II Errors Impact Business Decisions

Type I errors, or false positives, lead businesses to act on incorrect signals, potentially causing unnecessary expenditures or strategic shifts. Type II errors, or false negatives, result in missed opportunities by failing to detect significant effects or trends, hindering competitive advantage. Balancing these errors through appropriate significance levels and power analysis is crucial for data-driven decision-making accuracy.

Balancing Significance Levels: Alpha and Beta Explained

Balancing significance levels involves managing Type I error (alpha), the probability of falsely rejecting a true null hypothesis, and Type II error (beta), the probability of failing to reject a false null hypothesis. Optimizing alpha and beta is crucial in data science to maintain hypothesis test reliability and ensure accurate decision-making. Adjusting sample size and effect size helps control these errors, improving statistical power and minimizing incorrect inferences.

Visualizing Type I and Type II Errors in Hypothesis Testing

Visualizing Type I and Type II errors in hypothesis testing involves plotting the distributions of the null and alternative hypotheses along with the critical value that separates rejection and non-rejection regions. The area under the null hypothesis curve beyond the critical value represents the Type I error (a), indicating the probability of incorrectly rejecting a true null hypothesis. The area under the alternative hypothesis curve within the non-rejection region represents the Type II error (b), showing the likelihood of failing to reject a false null hypothesis and highlighting the trade-off between sensitivity and specificity in statistical inference.

Strategies to Minimize Type I and Type II Errors

Minimizing Type I and Type II errors in data science requires careful balance between sensitivity and specificity, achieved through techniques such as adjusting significance levels (alpha) to control false positives while increasing sample sizes to reduce false negatives. Implementing cross-validation methods and using robust statistical tests can enhance model reliability, thereby optimizing error rates. Regularly performing power analysis ensures sufficient test sensitivity to detect true effects, effectively reducing Type II errors without inflating Type I errors.

The Role of Sample Size in Reducing Errors

Increasing sample size in data science significantly reduces Type I error by providing more accurate estimates and tighter confidence intervals, thus lowering the chance of false positives. Larger samples enhance statistical power, which decreases Type II error by improving the detection of true effects or differences. Optimizing sample size balances the risks of both errors, ensuring more reliable hypothesis testing outcomes.

Consequences of Misinterpreting Type I and Type II Errors

Misinterpreting Type I error, or false positive, can lead to the wrongful rejection of a true null hypothesis, resulting in wasted resources and potential misinformation in data-driven decisions. Ignoring Type II error, or false negative, increases the risk of overlooking significant effects, which may cause missed opportunities or failure to detect critical insights in predictive models. Balancing these errors is crucial in hypothesis testing to optimize model accuracy and maintain the integrity of data science outcomes.

Best Practices for Managing Type I vs Type II Errors in Data Science

Minimizing Type I errors involves setting a stringent significance level and using robust validation techniques like cross-validation to avoid false positives in data science models. Controlling Type II errors requires increasing sample sizes and improving model sensitivity through feature selection and tuning to reduce false negatives. Balancing these errors is achieved by context-driven threshold adjustments and continuous performance monitoring to optimize decision-making accuracy.

type I error vs type II error Infographic

techiny.com

techiny.com