Cache memory offers faster access speeds compared to main memory by storing frequently accessed data closer to the processor, significantly reducing latency. Main memory, typically RAM, has larger capacity but slower access times, serving as the primary storage for active programs and data. Optimizing the interaction between cache and main memory enhances overall system performance and efficiency in hardware engineering.

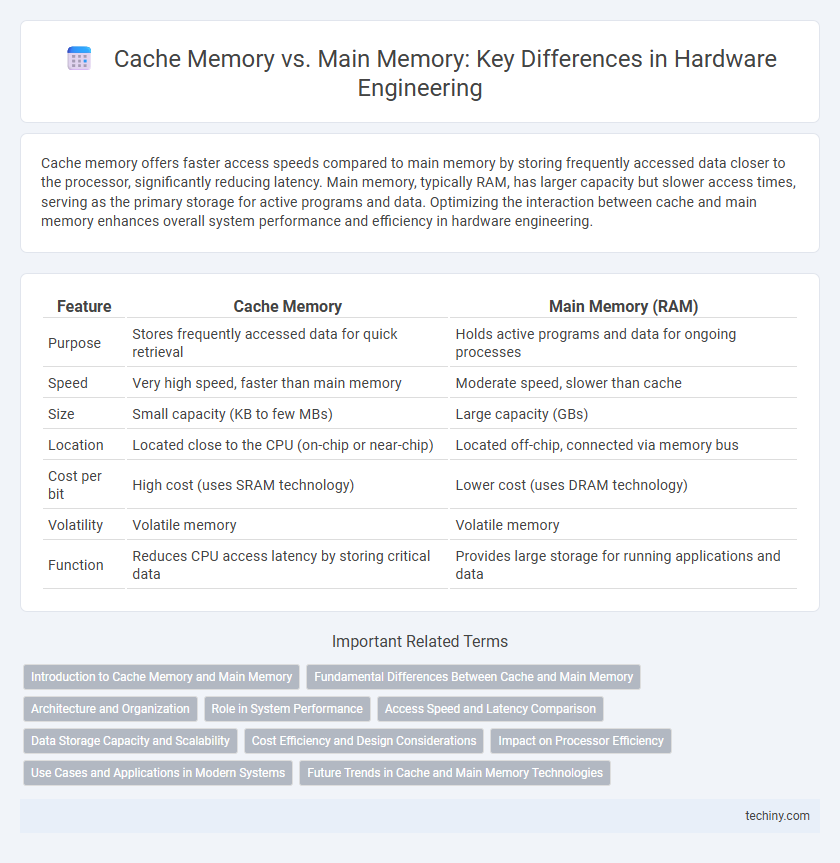

Table of Comparison

| Feature | Cache Memory | Main Memory (RAM) |

|---|---|---|

| Purpose | Stores frequently accessed data for quick retrieval | Holds active programs and data for ongoing processes |

| Speed | Very high speed, faster than main memory | Moderate speed, slower than cache |

| Size | Small capacity (KB to few MBs) | Large capacity (GBs) |

| Location | Located close to the CPU (on-chip or near-chip) | Located off-chip, connected via memory bus |

| Cost per bit | High cost (uses SRAM technology) | Lower cost (uses DRAM technology) |

| Volatility | Volatile memory | Volatile memory |

| Function | Reduces CPU access latency by storing critical data | Provides large storage for running applications and data |

Introduction to Cache Memory and Main Memory

Cache memory is a small, high-speed storage located close to the CPU, designed to temporarily hold frequently accessed data and instructions, significantly reducing access time compared to main memory. Main memory, or RAM, provides larger storage capacity for active programs and data but operates at slower speeds relative to cache memory. The effective interplay between cache and main memory enhances overall system performance by balancing speed and capacity in hardware architecture.

Fundamental Differences Between Cache and Main Memory

Cache memory operates as a small, high-speed storage located closer to the CPU, designed to hold frequently accessed data and instructions for rapid retrieval, whereas main memory (RAM) provides larger, slower storage for all active processes and data. Cache utilizes faster SRAM technology with lower latency, while main memory typically uses DRAM, balancing capacity and cost. The fundamental difference lies in access speed, size, and purpose: cache enhances CPU performance by minimizing access time to critical data, whereas main memory offers a broader workspace for ongoing computations.

Architecture and Organization

Cache memory is a small, high-speed storage located close to the CPU, designed to store frequently accessed data and instructions, organized using various mapping techniques such as direct-mapped, fully associative, and set-associative to optimize access time. Main memory, or RAM, is larger and slower, structured in a hierarchical fashion with addressable locations accessed via a memory controller, relying on rows and columns organization for efficient data retrieval. The architecture of cache includes multiple levels (L1, L2, L3) with varying sizes and speeds, while main memory architecture emphasizes capacity and latency trade-offs to support overall system performance.

Role in System Performance

Cache memory significantly enhances system performance by providing high-speed data access closer to the CPU, reducing latency compared to main memory (RAM). Main memory offers larger capacity but slower access times, making it crucial for storing active programs and data that exceed cache size. Efficient cache management and hierarchy directly impact CPU efficiency, minimizing bottlenecks caused by slower main memory access.

Access Speed and Latency Comparison

Cache memory offers significantly faster access speeds, measured in nanoseconds, compared to main memory, which operates at microsecond latencies. The low latency of cache is achieved through close proximity to the CPU and smaller, high-speed SRAM cells, whereas main memory relies on slower DRAM technology. This speed differential is critical in reducing processor stall cycles and enhancing overall system performance.

Data Storage Capacity and Scalability

Cache memory features limited data storage capacity, typically ranging from a few kilobytes to several megabytes, optimized for speed and immediate data access. Main memory offers significantly larger storage capacity, measured in gigabytes, supporting extensive data handling and application execution. Scalability in main memory is higher due to modular design enabling easy expansion, whereas cache memory scalability is constrained by on-chip space and cost considerations.

Cost Efficiency and Design Considerations

Cache memory offers significantly higher cost efficiency per access time compared to main memory, as its smaller size and proximity to the CPU reduce latency and power consumption. Design considerations involve balancing cache size, associativity, and block size to optimize hit rates while minimizing complexity and cost. Main memory, though larger and cheaper per unit storage, incurs higher latency and requires slower access protocols, impacting overall system performance and design trade-offs.

Impact on Processor Efficiency

Cache memory, with its low latency and high-speed access, significantly enhances processor efficiency by reducing the time required to fetch frequently used instructions and data compared to main memory. Main memory, despite its larger capacity, exhibits higher access latency and lower bandwidth, causing the processor to stall more often during data retrieval. Efficient cache hierarchies optimize processor cycles by minimizing costly main memory accesses, directly improving overall computational performance.

Use Cases and Applications in Modern Systems

Cache memory accelerates data access by storing frequently used information close to the CPU, optimizing performance in gaming consoles, high-frequency trading platforms, and real-time data processing systems. Main memory, or RAM, supports larger storage capacity vital for running applications, multitasking, and handling operating systems in desktops, servers, and embedded devices. Modern systems integrate both memories to balance speed and capacity, enabling efficient execution of complex computations, multitasking workflows, and large-scale data analysis.

Future Trends in Cache and Main Memory Technologies

Emerging non-volatile memory technologies such as MRAM and ReRAM are poised to revolutionize cache and main memory by offering faster access speeds and reduced power consumption compared to traditional DRAM and SRAM. Advanced cache architectures incorporating machine learning algorithms for adaptive prefetching and replacement policies aim to significantly enhance data locality and reduce latency. Scaling challenges in silicon fabrication are driving innovation in 3D-stacked memory and heterogeneous memory systems to boost bandwidth and capacity for future high-performance computing workloads.

Cache memory vs Main memory Infographic

techiny.com

techiny.com