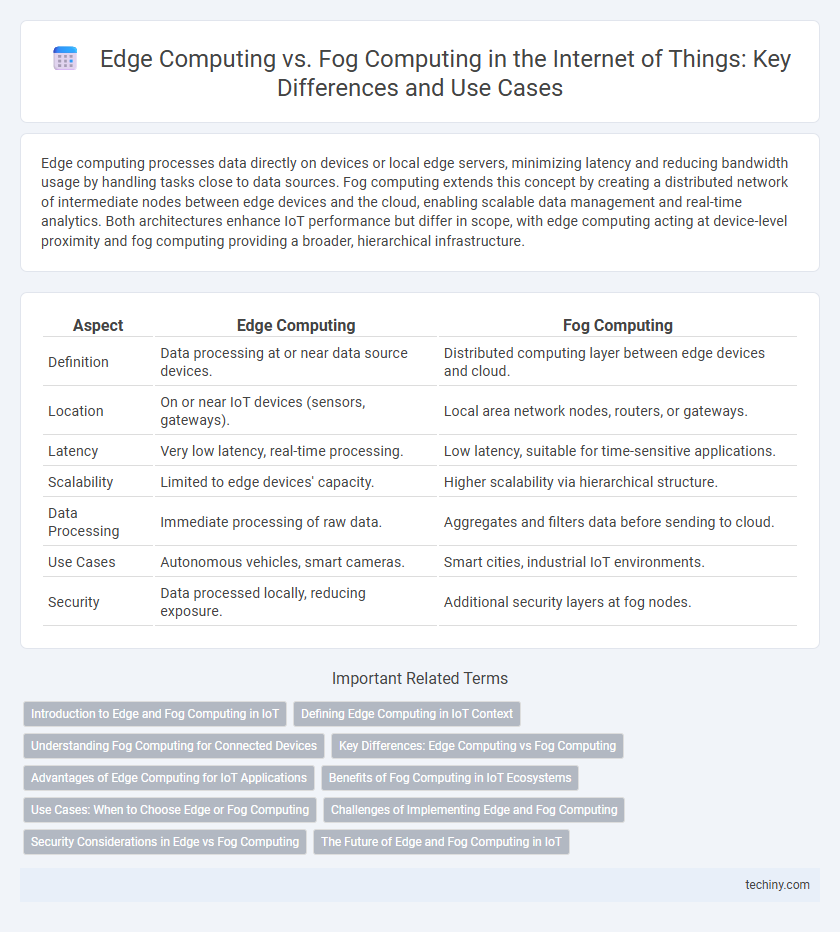

Edge computing processes data directly on devices or local edge servers, minimizing latency and reducing bandwidth usage by handling tasks close to data sources. Fog computing extends this concept by creating a distributed network of intermediate nodes between edge devices and the cloud, enabling scalable data management and real-time analytics. Both architectures enhance IoT performance but differ in scope, with edge computing acting at device-level proximity and fog computing providing a broader, hierarchical infrastructure.

Table of Comparison

| Aspect | Edge Computing | Fog Computing |

|---|---|---|

| Definition | Data processing at or near data source devices. | Distributed computing layer between edge devices and cloud. |

| Location | On or near IoT devices (sensors, gateways). | Local area network nodes, routers, or gateways. |

| Latency | Very low latency, real-time processing. | Low latency, suitable for time-sensitive applications. |

| Scalability | Limited to edge devices' capacity. | Higher scalability via hierarchical structure. |

| Data Processing | Immediate processing of raw data. | Aggregates and filters data before sending to cloud. |

| Use Cases | Autonomous vehicles, smart cameras. | Smart cities, industrial IoT environments. |

| Security | Data processed locally, reducing exposure. | Additional security layers at fog nodes. |

Introduction to Edge and Fog Computing in IoT

Edge computing processes data directly on IoT devices or local edge servers, reducing latency and bandwidth use by minimizing data transmission to central cloud servers. Fog computing extends this concept by creating a distributed network of intermediate nodes between devices and the cloud, enabling more scalable data processing and real-time analytics closer to data sources. Both paradigms enhance IoT efficiency by decentralizing computing resources, improving response times, and supporting complex, data-intensive applications.

Defining Edge Computing in IoT Context

Edge computing in the Internet of Things (IoT) context refers to processing data near the source of data generation, such as IoT devices and sensors, to reduce latency and bandwidth usage. It enables real-time analytics and decision-making by minimizing the need to transmit large volumes of data to centralized cloud servers. This localized processing enhances the efficiency, security, and responsiveness of IoT systems, especially in applications like smart cities, industrial automation, and autonomous vehicles.

Understanding Fog Computing for Connected Devices

Fog computing extends cloud capabilities by processing data closer to connected devices, reducing latency and improving real-time decision-making in Internet of Things (IoT) environments. It operates on a distributed network of edge nodes, enabling efficient data filtering, storage, and analysis before sending relevant information to the cloud. This approach enhances security, bandwidth management, and system reliability for large-scale IoT deployments.

Key Differences: Edge Computing vs Fog Computing

Edge computing processes data directly on devices or near the source of data generation, minimizing latency by reducing the distance data travels. Fog computing extends this concept by distributing data processing across multiple nodes between the edge devices and the cloud, enabling more scalable and flexible orchestration of resources. Key differences include edge computing's emphasis on real-time processing at or near sensors and fog computing's layered architecture that supports complex analytics and data management across a broader network.

Advantages of Edge Computing for IoT Applications

Edge computing enhances IoT applications by processing data closer to the source, reducing latency and improving real-time responsiveness essential for time-sensitive tasks. It decreases bandwidth usage and lowers cloud dependency, ensuring efficient data management in environments with limited connectivity. Enhanced security features in edge computing minimize data exposure by localizing processing, which is critical for protecting sensitive IoT data.

Benefits of Fog Computing in IoT Ecosystems

Fog computing significantly reduces latency in IoT ecosystems by processing data closer to the source, enabling real-time analytics and faster decision-making. It enhances security through localized data handling, minimizing exposure to centralized breaches while supporting scalability by distributing computing tasks across multiple nodes. This proximity to devices also optimizes network bandwidth, reducing data load on centralized cloud servers and improving overall system reliability.

Use Cases: When to Choose Edge or Fog Computing

Edge computing excels in real-time applications requiring ultra-low latency like autonomous vehicles and industrial robotics by processing data close to the source. Fog computing suits scenarios involving large-scale, distributed IoT networks such as smart cities and agriculture, offering enhanced data aggregation and intermediate processing between edge devices and the cloud. Organizations should select edge computing for immediate, localized data analysis and fog computing for broader, multi-node coordination and preprocessing in complex IoT environments.

Challenges of Implementing Edge and Fog Computing

Implementing edge and fog computing presents challenges such as managing data security and privacy across distributed nodes, ensuring real-time data processing with minimal latency, and maintaining seamless interoperability among heterogeneous IoT devices and network protocols. Infrastructure scalability can be constrained by limited computational resources at edge locations, complicating load balancing and fault tolerance. Additionally, orchestrating complex analytics tasks between fog and cloud layers requires sophisticated resource allocation and network management strategies.

Security Considerations in Edge vs Fog Computing

Edge computing processes data locally on IoT devices, reducing latency but increasing vulnerability due to limited security resources at the edge. Fog computing extends security by distributing data processing across multiple nodes within the network, enabling enhanced threat detection and mitigation closer to data sources. Both architectures require robust encryption, authentication protocols, and real-time anomaly detection to safeguard sensitive IoT information effectively.

The Future of Edge and Fog Computing in IoT

Edge computing and fog computing will play pivotal roles in the future of Internet of Things by enhancing real-time data processing and reducing latency across distributed networks. Edge computing focuses on processing data near the source at IoT devices, while fog computing extends this capability by creating a layered architecture that supports additional intermediate nodes for better scalability and security. The integration of AI and 5G technologies will further accelerate the deployment of edge and fog computing, enabling smarter, faster, and more efficient IoT applications across industries.

Edge Computing vs Fog Computing Infographic

techiny.com

techiny.com