Latency-sensitive IoT applications, such as autonomous vehicles and remote surgery, require real-time data processing to ensure immediate responsiveness and safety. Throughput-sensitive applications, like video streaming and large-scale sensor networks, prioritize high data transfer rates to handle massive volumes of information efficiently. Balancing these needs involves optimizing network resources and employing edge computing to reduce latency while maintaining sufficient bandwidth for data-intensive tasks.

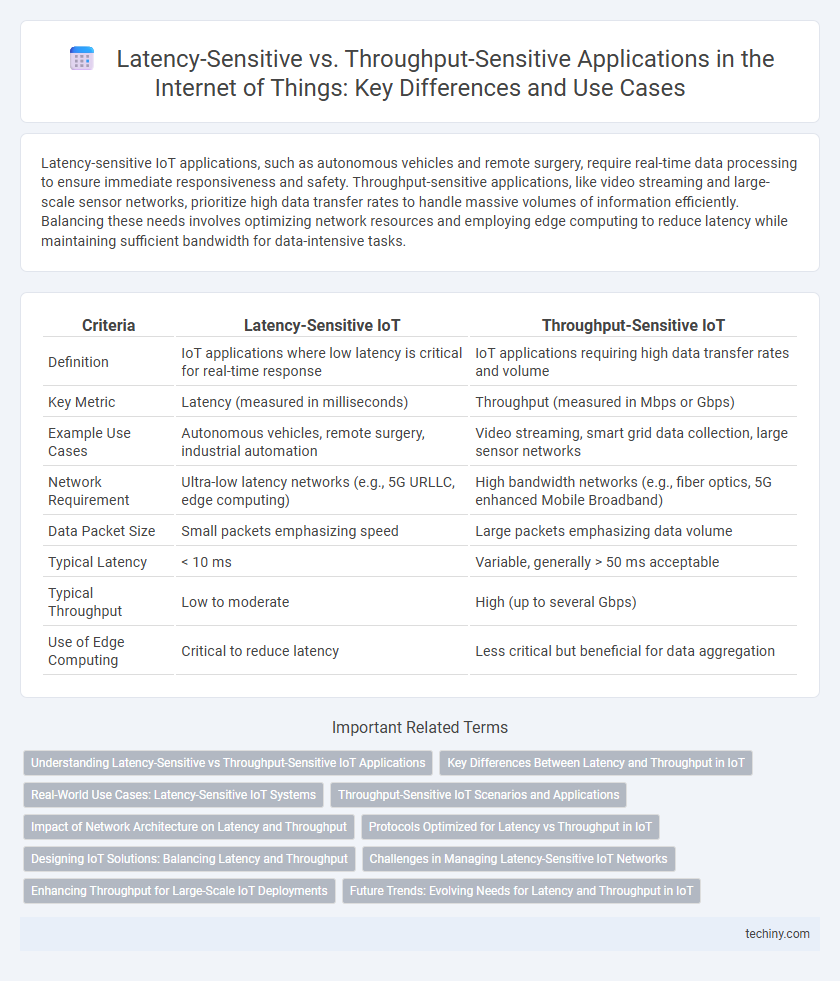

Table of Comparison

| Criteria | Latency-Sensitive IoT | Throughput-Sensitive IoT |

|---|---|---|

| Definition | IoT applications where low latency is critical for real-time response | IoT applications requiring high data transfer rates and volume |

| Key Metric | Latency (measured in milliseconds) | Throughput (measured in Mbps or Gbps) |

| Example Use Cases | Autonomous vehicles, remote surgery, industrial automation | Video streaming, smart grid data collection, large sensor networks |

| Network Requirement | Ultra-low latency networks (e.g., 5G URLLC, edge computing) | High bandwidth networks (e.g., fiber optics, 5G enhanced Mobile Broadband) |

| Data Packet Size | Small packets emphasizing speed | Large packets emphasizing data volume |

| Typical Latency | < 10 ms | Variable, generally > 50 ms acceptable |

| Typical Throughput | Low to moderate | High (up to several Gbps) |

| Use of Edge Computing | Critical to reduce latency | Less critical but beneficial for data aggregation |

Understanding Latency-Sensitive vs Throughput-Sensitive IoT Applications

Latency-sensitive IoT applications, such as autonomous vehicles and remote surgery, require real-time data processing to ensure immediate responsiveness and safety. Throughput-sensitive applications, including video streaming and large-scale sensor data analytics, prioritize high data transfer rates to handle massive volumes of information efficiently. Understanding the distinct requirements of latency-sensitive versus throughput-sensitive IoT applications is critical for optimizing network design and resource allocation in smart environments.

Key Differences Between Latency and Throughput in IoT

Latency-sensitive IoT applications prioritize minimal delay in data transmission to ensure real-time responsiveness, essential for autonomous vehicles and remote surgeries. Throughput-sensitive IoT systems focus on maximizing data transfer rates to handle large volumes of information, critical in video surveillance and smart city infrastructure. Key differences between latency and throughput in IoT include their impact on network design, where low latency demands edge computing and fast processing, while high throughput requires robust bandwidth and efficient data aggregation.

Real-World Use Cases: Latency-Sensitive IoT Systems

Latency-sensitive IoT systems, such as autonomous vehicles and industrial automation, require rapid data processing to ensure real-time responsiveness and safety. In smart healthcare applications, low latency enables immediate critical interventions by monitoring vital signs and triggering alerts without delay. These use cases prioritize minimizing communication delays over maximizing data throughput to maintain system reliability and operational efficiency.

Throughput-Sensitive IoT Scenarios and Applications

Throughput-sensitive IoT scenarios prioritize high data transfer rates to support applications such as video streaming, industrial automation, and smart city infrastructure. These applications require robust network capacity to handle large volumes of real-time data without bottlenecks or delays. Optimizing network throughput ensures seamless operation in environments with extensive sensor arrays and continuous data flows, essential for performance-critical IoT systems.

Impact of Network Architecture on Latency and Throughput

Network architecture significantly influences latency and throughput in Internet of Things (IoT) deployments, with edge computing reducing latency for latency-sensitive applications by processing data closer to the source. Throughput-sensitive IoT systems benefit from centralized cloud architectures that provide scalable bandwidth and high data handling capacity. Hybrid architectures that integrate edge and cloud resources optimize performance by balancing real-time responsiveness with substantial data throughput.

Protocols Optimized for Latency vs Throughput in IoT

Protocols optimized for latency in IoT, such as MQTT-SN and CoAP, prioritize minimal delay and rapid response times essential for real-time applications like industrial automation and healthcare monitoring. Throughput-sensitive protocols like HTTP/2 and AMQP focus on maximizing data transfer rates, suitable for applications involving large data volumes such as video streaming and smart city analytics. Selecting the appropriate protocol depends on the specific IoT use case requirements, balancing latency and throughput to enhance overall network performance.

Designing IoT Solutions: Balancing Latency and Throughput

Designing IoT solutions requires carefully balancing latency-sensitive applications like real-time monitoring with throughput-sensitive tasks such as large-scale data aggregation. Optimizing network protocols and edge computing placement minimizes latency while ensuring sufficient bandwidth for data-heavy transmissions. Prioritizing device capabilities and communication technologies enhances overall system responsiveness and efficiency in diverse IoT environments.

Challenges in Managing Latency-Sensitive IoT Networks

Latency-sensitive IoT networks face challenges such as ensuring real-time data processing and minimizing transmission delays, which are critical for applications like autonomous vehicles and remote healthcare monitoring. Limited bandwidth, network congestion, and fluctuating wireless signal quality exacerbate latency issues, often leading to packet loss and degraded performance. Efficient resource allocation, edge computing integration, and adaptive routing protocols are essential strategies to address these challenges and maintain low-latency communication.

Enhancing Throughput for Large-Scale IoT Deployments

Optimizing throughput in large-scale IoT deployments requires advanced network architectures such as mesh and edge computing to handle massive data traffic efficiently. Implementing scalable protocols like MQTT and CoAP enhances data transmission rates while minimizing congestion and packet loss. Leveraging high-bandwidth technologies like 5G and Wi-Fi 6 further supports real-time data exchange and device scalability in dense IoT environments.

Future Trends: Evolving Needs for Latency and Throughput in IoT

Future trends in IoT emphasize the growing demand for balancing latency-sensitive and throughput-sensitive applications as edge computing and 5G networks mature. Latency-sensitive use cases like autonomous vehicles and remote surgery require ultra-low latency to ensure real-time responsiveness, while throughput-sensitive applications such as video surveillance and big data analytics demand high data transfer rates for efficient processing. Emerging technologies including AI-driven network management and network slicing optimize resource allocation, addressing evolving needs for seamless connectivity and improved QoS in diverse IoT ecosystems.

latency-sensitive vs throughput-sensitive Infographic

techiny.com

techiny.com