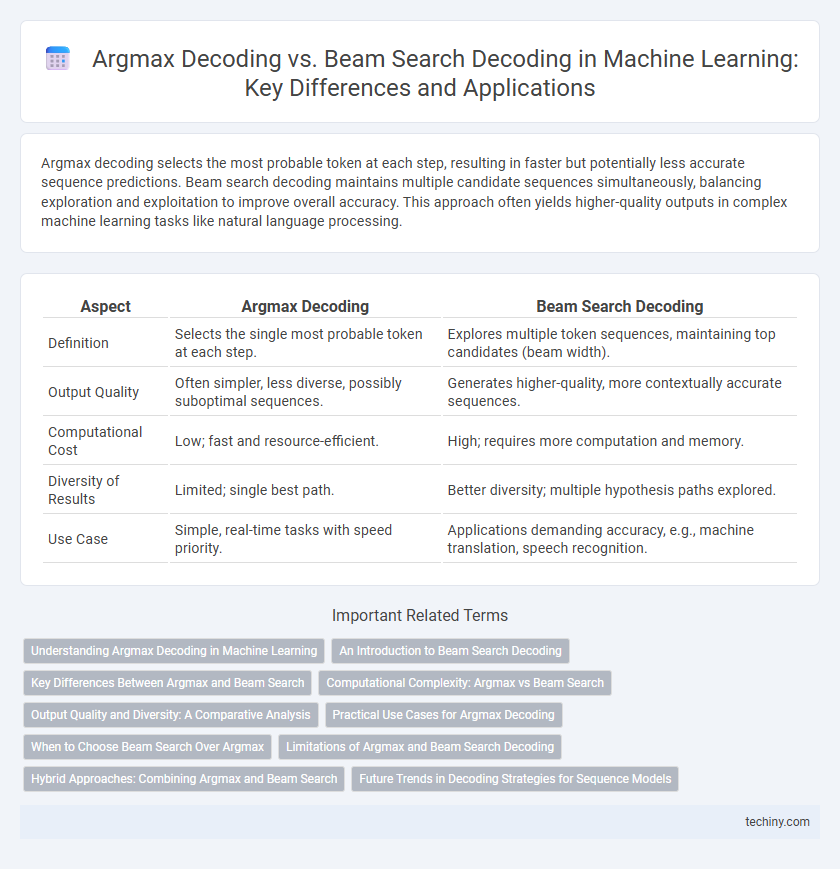

Argmax decoding selects the most probable token at each step, resulting in faster but potentially less accurate sequence predictions. Beam search decoding maintains multiple candidate sequences simultaneously, balancing exploration and exploitation to improve overall accuracy. This approach often yields higher-quality outputs in complex machine learning tasks like natural language processing.

Table of Comparison

| Aspect | Argmax Decoding | Beam Search Decoding |

|---|---|---|

| Definition | Selects the single most probable token at each step. | Explores multiple token sequences, maintaining top candidates (beam width). |

| Output Quality | Often simpler, less diverse, possibly suboptimal sequences. | Generates higher-quality, more contextually accurate sequences. |

| Computational Cost | Low; fast and resource-efficient. | High; requires more computation and memory. |

| Diversity of Results | Limited; single best path. | Better diversity; multiple hypothesis paths explored. |

| Use Case | Simple, real-time tasks with speed priority. | Applications demanding accuracy, e.g., machine translation, speech recognition. |

Understanding Argmax Decoding in Machine Learning

Argmax decoding in machine learning selects the most probable output at each step, maximizing individual token likelihood without considering sequence context. This greedy approach often leads to suboptimal sequences compared to beam search, which evaluates multiple hypotheses simultaneously to find globally higher-probability outputs. Understanding argmax decoding highlights its computational efficiency and simplicity, making it suitable for real-time applications where speed outweighs optimality.

An Introduction to Beam Search Decoding

Beam search decoding improves upon argmax decoding by exploring multiple hypothesis paths instead of selecting only the highest probability token at each step, enhancing the likelihood of finding a globally optimal sequence in machine learning models. It maintains a fixed-size set of top candidate sequences (beam width) and expands them iteratively, balancing exploration and computational efficiency. This approach is widely used in natural language processing tasks such as machine translation and speech recognition to generate more accurate and coherent outputs.

Key Differences Between Argmax and Beam Search

Argmax decoding selects the most probable token at each step, resulting in a greedy and fast prediction but potentially missing globally optimal sequences. Beam search decoding maintains multiple hypotheses by exploring several top candidate sequences, improving accuracy through broader search at the cost of increased computational complexity. The key difference lies in argmax's single-path focus versus beam search's multi-path exploration for sequence generation.

Computational Complexity: Argmax vs Beam Search

Argmax decoding operates with linear computational complexity O(T|V|), where T is sequence length and |V| is vocabulary size, selecting the highest probability token at each step. Beam search decoding involves higher computational complexity O(T|V|B), with B representing beam width, as it maintains multiple hypotheses to balance exploration and exploitation. The increased complexity of beam search often leads to more accurate sequence predictions but requires significantly more processing power compared to the simpler argmax approach.

Output Quality and Diversity: A Comparative Analysis

Argmax decoding selects the highest-probability output at each step, often resulting in more deterministic but less diverse sequences, which can limit output quality in complex tasks. Beam search decoding maintains multiple hypotheses simultaneously, maximizing overall sequence probability and enhancing output diversity and quality by exploring a broader search space. Empirical studies show beam search tends to produce more accurate and varied results in natural language generation and sequence prediction tasks compared to argmax decoding.

Practical Use Cases for Argmax Decoding

Argmax decoding is widely used in real-time applications where fast inference is critical, such as voice assistants and online translation services, due to its simplicity and low computational cost. It selects the most probable token at each step, making it suitable for scenarios requiring immediate responses without extensive context evaluation. This approach is preferred in edge devices with limited processing power, enabling efficient deployment of machine learning models.

When to Choose Beam Search Over Argmax

Beam search decoding outperforms argmax decoding when handling complex sequence prediction tasks like machine translation or speech recognition, where multiple plausible outcomes exist. It maintains a fixed number of top candidate sequences, efficiently exploring a broader search space to improve prediction accuracy. This method is especially advantageous when the model's conditional probability distribution is flat or multimodal, as argmax decoding may miss high-probability sequences hidden behind locally optimal choices.

Limitations of Argmax and Beam Search Decoding

Argmax decoding selects the highest probability token at each step, leading to suboptimal sequences by ignoring future context and potential alternative paths. Beam search decoding mitigates this by exploring multiple hypotheses simultaneously but suffers from exponentially increasing computational cost and may still miss globally optimal sequences due to heuristic pruning. Both methods struggle with balancing diversity and accuracy, often producing repetitive or generic outputs in complex sequence generation tasks.

Hybrid Approaches: Combining Argmax and Beam Search

Hybrid approaches in machine learning combine argmax decoding's efficiency with beam search decoding's ability to explore multiple sequence hypotheses, enhancing overall prediction accuracy. By using argmax decoding to narrow down high-probability candidates followed by beam search to refine and evaluate these candidates in a broader context, models achieve a balanced trade-off between computational cost and decoding performance. These methods leverage the strengths of both strategies to improve sequence generation tasks in natural language processing and speech recognition.

Future Trends in Decoding Strategies for Sequence Models

Future trends in decoding strategies for sequence models emphasize hybrid approaches combining argmax decoding's efficiency with beam search decoding's accuracy to optimize performance in real-time applications. Research is increasingly directed towards adaptive beam width techniques and transformer-based models integrating dynamic decoding paths to balance computational cost and output quality. Emerging methods leverage reinforcement learning and uncertainty estimation to enhance model robustness and improve sequential prediction fidelity.

argmax decoding vs beam search decoding Infographic

techiny.com

techiny.com