The curse of dimensionality challenges machine learning models by increasing feature space exponentially, leading to sparse data and reduced model performance. Overparameterization occurs when models contain more parameters than necessary, which can surprisingly enhance generalization by enabling better optimization landscapes and implicit regularization. Balancing these phenomena is crucial for developing efficient models that avoid overfitting while maintaining high predictive accuracy.

Table of Comparison

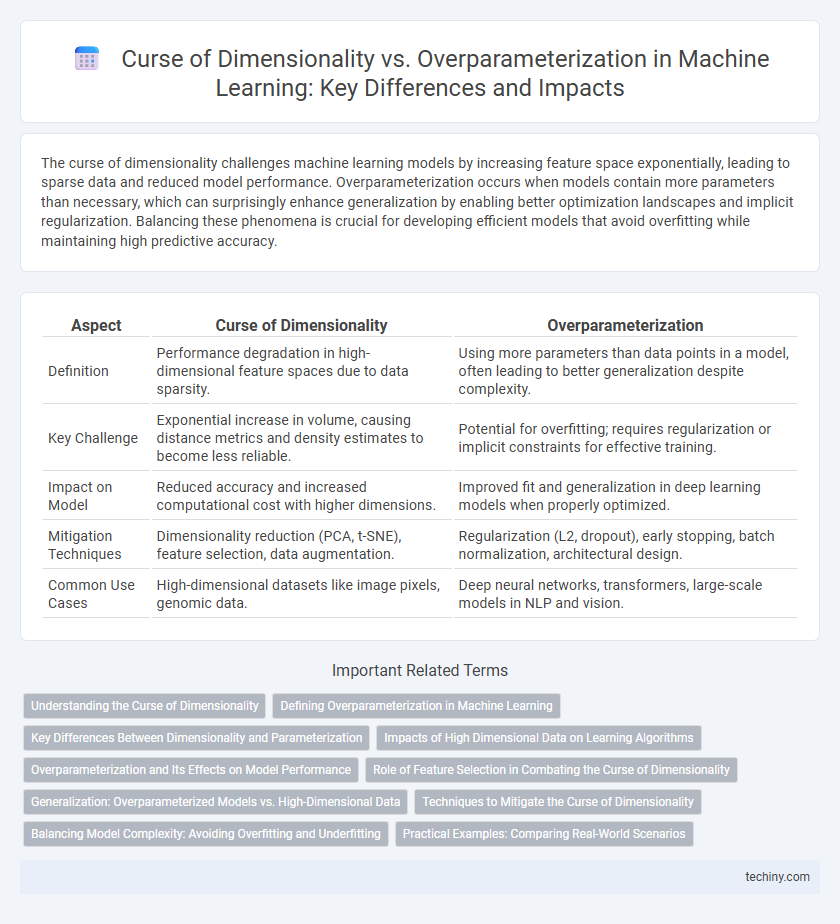

| Aspect | Curse of Dimensionality | Overparameterization |

|---|---|---|

| Definition | Performance degradation in high-dimensional feature spaces due to data sparsity. | Using more parameters than data points in a model, often leading to better generalization despite complexity. |

| Key Challenge | Exponential increase in volume, causing distance metrics and density estimates to become less reliable. | Potential for overfitting; requires regularization or implicit constraints for effective training. |

| Impact on Model | Reduced accuracy and increased computational cost with higher dimensions. | Improved fit and generalization in deep learning models when properly optimized. |

| Mitigation Techniques | Dimensionality reduction (PCA, t-SNE), feature selection, data augmentation. | Regularization (L2, dropout), early stopping, batch normalization, architectural design. |

| Common Use Cases | High-dimensional datasets like image pixels, genomic data. | Deep neural networks, transformers, large-scale models in NLP and vision. |

Understanding the Curse of Dimensionality

The Curse of Dimensionality refers to the exponential increase in data sparsity as the number of features grows, which challenges the effectiveness of machine learning algorithms by degrading model performance and increasing computational complexity. High-dimensional spaces cause distances between data points to become less meaningful, impairing clustering, classification, and regression tasks. Understanding this phenomenon is crucial for feature selection, dimensionality reduction techniques like PCA, and designing models that generalize well in high-dimensional settings.

Defining Overparameterization in Machine Learning

Overparameterization in machine learning refers to models with more parameters than training data points, enabling them to fit complex patterns but also risking overfitting. This phenomenon is especially prevalent in deep neural networks, where layers contain millions of parameters that exceed dataset sizes. Despite the increased risk, overparameterized models often achieve lower training error and better generalization due to implicit regularization and optimization dynamics.

Key Differences Between Dimensionality and Parameterization

Curse of dimensionality refers to challenges that arise when high-dimensional data spaces cause exponential growth in computational complexity and data sparsity, impacting distance metrics and model generalization. Overparameterization occurs when a model has more parameters than necessary, often leading to better training performance but potential overfitting unless mitigated by regularization or implicit biases. The key difference lies in dimensionality pertaining to input data features, while parameterization relates to the number of tunable model parameters influencing capacity and learning dynamics.

Impacts of High Dimensional Data on Learning Algorithms

High dimensional data exacerbates the Curse of Dimensionality by increasing the volume of the feature space, which reduces the density of training samples and impairs the performance of traditional learning algorithms. Overparameterization often counteracts this by enabling models, especially deep neural networks, to capture complex patterns despite the sparse data distribution. However, excessive dimensions still cause challenges like overfitting, increased computational cost, and difficulty in estimating model parameters accurately.

Overparameterization and Its Effects on Model Performance

Overparameterization in machine learning occurs when models have more parameters than necessary, often resulting in excellent training performance but challenging generalization to unseen data. Despite the increased risk of overfitting, deep neural networks with overparameterized structures can achieve superior accuracy due to implicit regularization and optimization dynamics. Understanding the balance between model complexity and data helps mitigate negative effects while leveraging overparameterization for enhanced learning capacity.

Role of Feature Selection in Combating the Curse of Dimensionality

Feature selection plays a critical role in combating the curse of dimensionality by reducing the number of input variables, thereby improving model interpretability and computational efficiency. By selecting the most relevant features, it mitigates overfitting risks commonly associated with high-dimensional spaces and enhances generalization performance in machine learning models. Effective feature selection methods such as LASSO, PCA, and mutual information help to identify and retain only the most informative features, balancing model complexity and predictive accuracy.

Generalization: Overparameterized Models vs. High-Dimensional Data

Overparameterized models can achieve strong generalization despite having more parameters than training samples, leveraging implicit regularization techniques such as early stopping and gradient descent dynamics. In contrast, the curse of dimensionality in high-dimensional data often hampers generalization due to sparse data distribution and increased risk of overfitting. Effective generalization in overparameterized models arises from their ability to navigate complex parameter spaces without succumbing to the challenges posed by high-dimensional feature spaces.

Techniques to Mitigate the Curse of Dimensionality

Dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) effectively address the curse of dimensionality by transforming high-dimensional data into lower-dimensional spaces while preserving essential features. Feature selection methods, including Recursive Feature Elimination (RFE) and LASSO regularization, enhance model performance by identifying and retaining the most informative variables, reducing noise and computational complexity. Sparse coding and manifold learning also provide robust frameworks for capturing intrinsic data structures, mitigating overfitting risks associated with overparameterization in machine learning models.

Balancing Model Complexity: Avoiding Overfitting and Underfitting

Balancing model complexity in machine learning requires mitigating the curse of dimensionality by reducing feature space to prevent sparse data distributions that impair generalization. Overparameterization introduces excess model capacity that can lead to overfitting without proper regularization techniques such as dropout, weight decay, or early stopping. Optimal model performance relies on selecting architecture complexity aligned with dataset size and feature relevance to maintain the trade-off between bias and variance.

Practical Examples: Comparing Real-World Scenarios

High-dimensional datasets in fields like genomics or image recognition often suffer from the curse of dimensionality, leading to sparse data distribution and increased computational complexity. In contrast, overparameterization in deep learning models, such as neural networks with more parameters than training samples, enables better generalization and feature extraction despite the risk of overfitting. Practical examples include genomic data analysis where dimensionality reduction is crucial, versus large-scale natural language processing models like GPT that leverage overparameterization for improved performance.

Curse of Dimensionality vs Overparameterization Infographic

techiny.com

techiny.com