Embedding layers transform categorical data into dense vectors that capture semantic relationships, improving model performance and reducing dimensionality compared to one-hot encoding. One-hot encoding creates sparse, high-dimensional vectors with no inherent relationship between categories, often leading to inefficient learning and increased computational cost. Embedding layers are particularly effective in natural language processing tasks where understanding context and similarity is crucial.

Table of Comparison

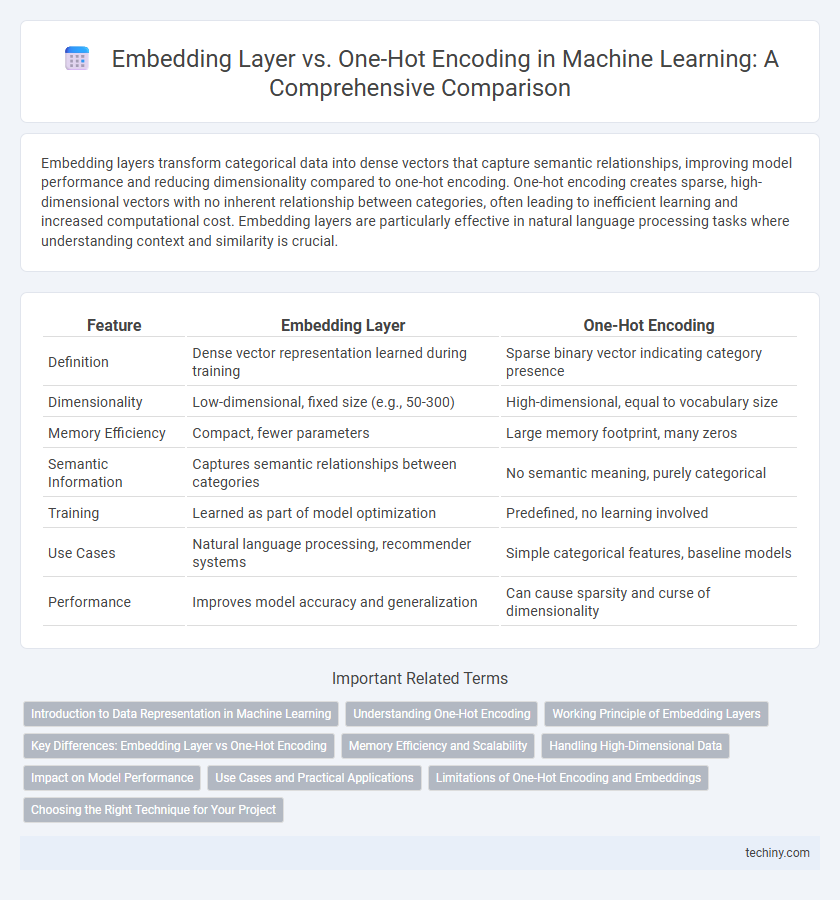

| Feature | Embedding Layer | One-Hot Encoding |

|---|---|---|

| Definition | Dense vector representation learned during training | Sparse binary vector indicating category presence |

| Dimensionality | Low-dimensional, fixed size (e.g., 50-300) | High-dimensional, equal to vocabulary size |

| Memory Efficiency | Compact, fewer parameters | Large memory footprint, many zeros |

| Semantic Information | Captures semantic relationships between categories | No semantic meaning, purely categorical |

| Training | Learned as part of model optimization | Predefined, no learning involved |

| Use Cases | Natural language processing, recommender systems | Simple categorical features, baseline models |

| Performance | Improves model accuracy and generalization | Can cause sparsity and curse of dimensionality |

Introduction to Data Representation in Machine Learning

Embedding layers transform categorical data into dense vector representations that capture semantic relationships between categories, enabling models to learn more effectively from data. One-hot encoding converts categories into sparse binary vectors where each dimension corresponds to a unique category, often leading to high-dimensional inputs and limited expressiveness. Embedding layers offer dimensionality reduction and improved generalization compared to one-hot encoding, making them essential for natural language processing and other complex data tasks.

Understanding One-Hot Encoding

One-hot encoding represents categorical variables as binary vectors with a single high (1) bit and all others low (0), allowing machine learning models to process non-numeric data effectively. Each category is assigned a unique vector dimension, resulting in sparse, high-dimensional data that can increase computational complexity and memory usage. While simple and interpretable, one-hot encoding lacks the ability to capture semantic relationships between categories, leading to limitations in tasks requiring deeper understanding.

Working Principle of Embedding Layers

Embedding layers transform categorical variables into dense vectors by mapping each unique category to a continuous vector space, capturing semantic relationships between categories. Unlike one-hot encoding, which produces sparse, high-dimensional vectors with no inherent relationships, embedding layers learn to represent categories as lower-dimensional, dense embeddings during model training. This process enables models to better generalize and extract meaningful patterns from categorical data.

Key Differences: Embedding Layer vs One-Hot Encoding

Embedding layers transform high-dimensional sparse data into dense, lower-dimensional vectors that capture semantic relationships, enabling improved generalization in machine learning models. One-hot encoding represents categorical variables as sparse, high-dimensional vectors with binary values, lacking semantic meaning and leading to increased computational costs. Unlike one-hot encoding, embedding layers are learnable parameters optimized during training, enhancing model performance by capturing latent features and similarities among categories.

Memory Efficiency and Scalability

Embedding layers significantly reduce memory usage compared to one-hot encoding by representing categorical variables as dense vectors rather than sparse high-dimensional vectors. This compact representation allows embedding layers to scale effectively for large vocabularies and datasets without exponential growth in memory requirements. Consequently, embedding layers enable more efficient training and inference in large-scale machine learning models.

Handling High-Dimensional Data

Embedding layers efficiently handle high-dimensional data by transforming sparse, large-dimensional one-hot vectors into dense, lower-dimensional continuous vectors that capture semantic relationships. One-hot encoding represents each category as a sparse vector with a dimension equal to the vocabulary size, leading to memory inefficiency and computational challenges in large datasets. Embeddings reduce dimensionality, improve model performance, and enable meaningful similarity computations essential for natural language processing and recommendation systems.

Impact on Model Performance

Embedding layers transform high-dimensional sparse data into dense vectors, significantly improving model performance by capturing semantic relationships between features. One-hot encoding results in high-dimensional input spaces that increase computational complexity and risk overfitting due to lack of contextual information. Utilizing embedding layers leads to better generalization and faster convergence in machine learning models, especially in natural language processing and recommendation systems.

Use Cases and Practical Applications

Embedding layers effectively capture semantic relationships between categorical variables, making them ideal for natural language processing tasks like word representation in neural networks. One-hot encoding suits scenarios with small, fixed vocabularies such as categorical feature encoding in traditional machine learning models. Embedding layers enhance performance in complex models by reducing dimensionality and enabling generalization, while one-hot encoding remains useful for straightforward, interpretable feature inputs.

Limitations of One-Hot Encoding and Embeddings

One-hot encoding suffers from high dimensionality and sparsity, leading to increased memory usage and computational inefficiency in machine learning models. Embedding layers address these limitations by mapping categorical variables into dense, low-dimensional vectors that capture semantic relationships and enable more effective generalization. However, embeddings require sufficient training data and careful tuning to avoid overfitting and to ensure meaningful representation learning.

Choosing the Right Technique for Your Project

Embedding layers capture semantic relationships between words by mapping discrete tokens to dense vectors, making them ideal for natural language processing tasks requiring contextual understanding. One-hot encoding represents words as sparse binary vectors without capturing similarity or context, which can be computationally expensive and less informative for large vocabularies. Selecting embeddings enhances model performance and efficiency in complex projects, while one-hot encoding suits simpler tasks with limited vocabulary sizes or when interpretability is critical.

embedding layer vs one-hot encoding Infographic

techiny.com

techiny.com