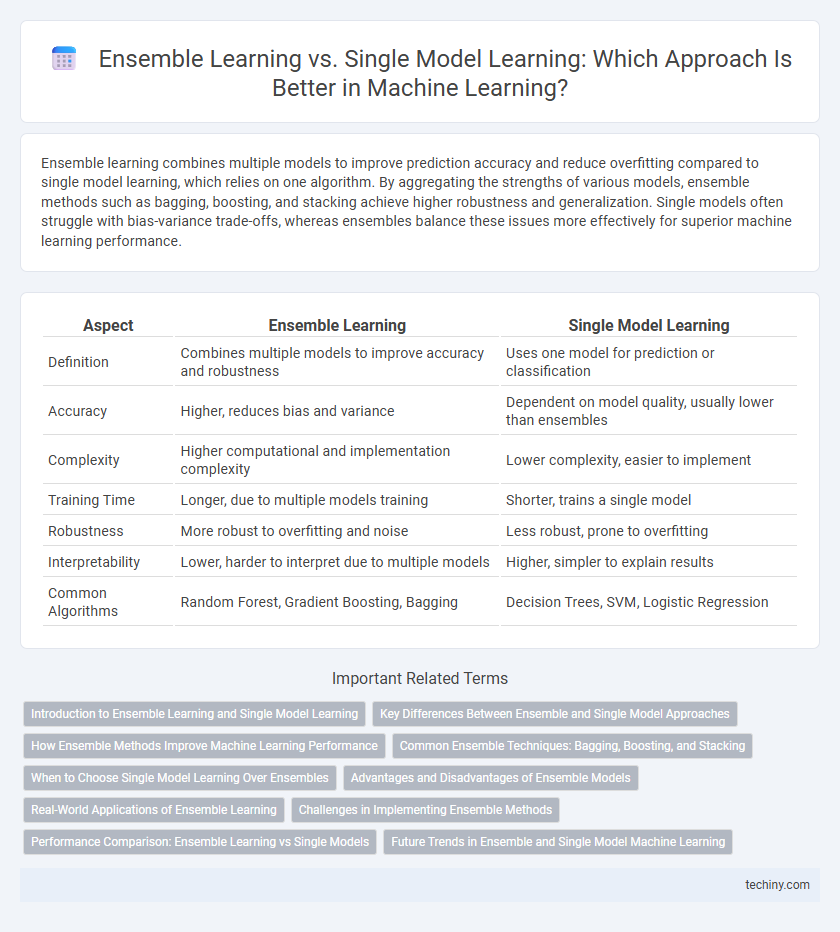

Ensemble learning combines multiple models to improve prediction accuracy and reduce overfitting compared to single model learning, which relies on one algorithm. By aggregating the strengths of various models, ensemble methods such as bagging, boosting, and stacking achieve higher robustness and generalization. Single models often struggle with bias-variance trade-offs, whereas ensembles balance these issues more effectively for superior machine learning performance.

Table of Comparison

| Aspect | Ensemble Learning | Single Model Learning |

|---|---|---|

| Definition | Combines multiple models to improve accuracy and robustness | Uses one model for prediction or classification |

| Accuracy | Higher, reduces bias and variance | Dependent on model quality, usually lower than ensembles |

| Complexity | Higher computational and implementation complexity | Lower complexity, easier to implement |

| Training Time | Longer, due to multiple models training | Shorter, trains a single model |

| Robustness | More robust to overfitting and noise | Less robust, prone to overfitting |

| Interpretability | Lower, harder to interpret due to multiple models | Higher, simpler to explain results |

| Common Algorithms | Random Forest, Gradient Boosting, Bagging | Decision Trees, SVM, Logistic Regression |

Introduction to Ensemble Learning and Single Model Learning

Ensemble learning combines multiple machine learning models to improve predictive performance by reducing bias, variance, or both, leveraging techniques such as bagging, boosting, and stacking. Single model learning relies on one algorithm to build a predictive model, which may risk overfitting or underfitting compared to ensembles. Ensemble methods often achieve higher accuracy and robustness by aggregating diverse model outputs, making them preferable in complex tasks like fraud detection and image classification.

Key Differences Between Ensemble and Single Model Approaches

Ensemble learning combines multiple models to improve predictive performance and reduce overfitting, while single model learning relies on one model, which may be simpler but prone to higher variance or bias. Ensemble methods like bagging, boosting, and stacking leverage diverse hypotheses to achieve better generalization compared to single models such as decision trees or support vector machines. The main differences lie in robustness, accuracy, and complexity, with ensembles typically providing superior results at the cost of increased computational resources.

How Ensemble Methods Improve Machine Learning Performance

Ensemble learning improves machine learning performance by combining multiple models to reduce variance, bias, and improve generalization compared to single model learning. Techniques like bagging, boosting, and stacking leverage diverse model predictions to enhance accuracy, robustness, and resilience to overfitting. Empirical results demonstrate that ensembles often outperform individual classifiers on complex datasets such as ImageNet and CIFAR-10.

Common Ensemble Techniques: Bagging, Boosting, and Stacking

Ensemble learning combines multiple models to improve predictive performance over single model learning by reducing variance, bias, and improving robustness. Common techniques include Bagging, which trains models on random subsets of data to reduce variance; Boosting, which sequentially adjusts weights on misclassified instances to reduce bias; and Stacking, which leverages meta-learners to optimally blend predictions from diverse base models. These methods demonstrate superior accuracy and generalization in tasks like classification and regression when compared to individual models.

When to Choose Single Model Learning Over Ensembles

Single model learning is preferable when computational resources are limited or real-time inference is critical, as it requires less processing power and memory compared to ensemble methods. In scenarios where model interpretability is paramount, single models such as decision trees or linear regression provide clearer insights into feature importance and decision boundaries. Furthermore, if the dataset is small or the problem is simple, single models often avoid the complexity and overfitting risks associated with ensembles.

Advantages and Disadvantages of Ensemble Models

Ensemble learning combines multiple machine learning models to improve predictive performance, reduce overfitting, and increase robustness compared to single model learning. These models, such as Random Forests or Gradient Boosting Machines, tend to achieve higher accuracy by aggregating diverse predictions but require more computational resources and longer training times. However, ensemble models can become less interpretable and more complex to deploy, posing challenges for applications needing model transparency.

Real-World Applications of Ensemble Learning

Ensemble learning enhances predictive accuracy by combining multiple models, making it highly effective in complex real-world applications such as fraud detection, medical diagnosis, and financial forecasting. Techniques like Random Forests and Gradient Boosting improve robustness and reduce overfitting compared to single model learning approaches. This collective decision-making process leverages diverse algorithms to handle large-scale datasets with higher precision and reliability.

Challenges in Implementing Ensemble Methods

Implementing ensemble methods in machine learning presents challenges such as increased computational complexity and longer training times compared to single model learning. Managing diverse base models requires careful selection and tuning to avoid redundancy and ensure complementary performance. Additionally, ensemble methods can suffer from interpretability issues, making it difficult to understand individual model contributions within the aggregated predictions.

Performance Comparison: Ensemble Learning vs Single Models

Ensemble learning consistently outperforms single model learning by aggregating predictions from multiple base models, reducing variance and bias while enhancing robustness and accuracy. Techniques such as bagging, boosting, and stacking create diverse model combinations that improve generalization on complex datasets compared to individual models like decision trees or logistic regression. Empirical studies demonstrate ensemble methods significantly increase predictive performance in classification and regression tasks, particularly in noisy or high-dimensional environments.

Future Trends in Ensemble and Single Model Machine Learning

Emerging trends in ensemble learning emphasize hybrid models combining diverse algorithms to boost accuracy and robustness across complex datasets. Single model learning is evolving with advances in neural architecture search and automated hyperparameter tuning to enhance performance and reduce computational costs. Integration of explainability techniques is becoming crucial for both paradigms, addressing transparency and trust in AI-driven decision systems.

Ensemble Learning vs Single Model Learning Infographic

techiny.com

techiny.com