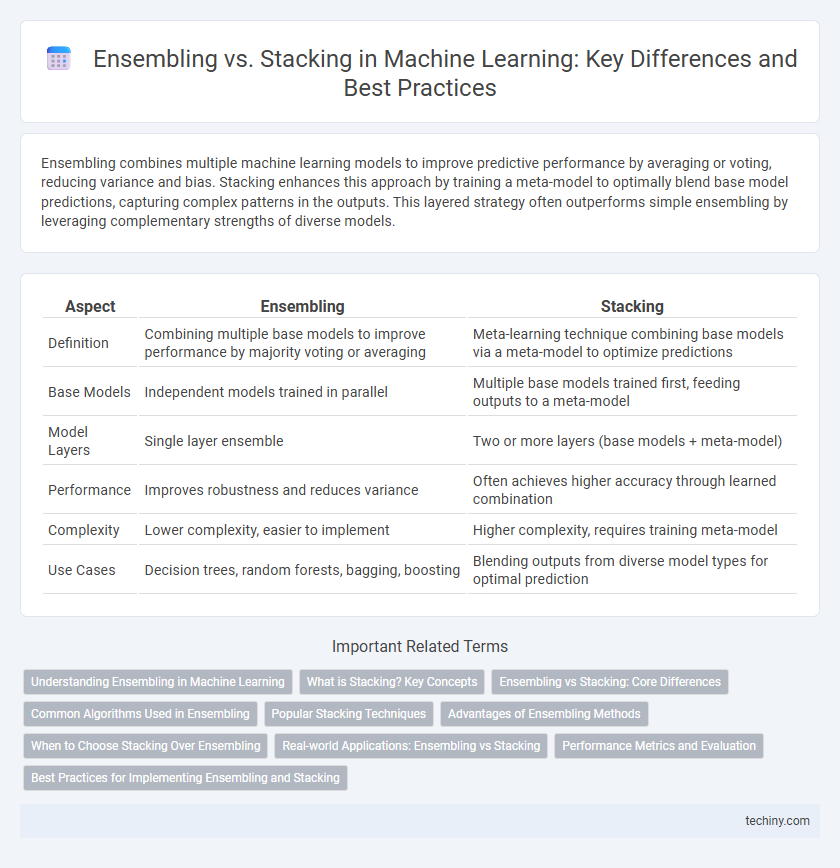

Ensembling combines multiple machine learning models to improve predictive performance by averaging or voting, reducing variance and bias. Stacking enhances this approach by training a meta-model to optimally blend base model predictions, capturing complex patterns in the outputs. This layered strategy often outperforms simple ensembling by leveraging complementary strengths of diverse models.

Table of Comparison

| Aspect | Ensembling | Stacking |

|---|---|---|

| Definition | Combining multiple base models to improve performance by majority voting or averaging | Meta-learning technique combining base models via a meta-model to optimize predictions |

| Base Models | Independent models trained in parallel | Multiple base models trained first, feeding outputs to a meta-model |

| Model Layers | Single layer ensemble | Two or more layers (base models + meta-model) |

| Performance | Improves robustness and reduces variance | Often achieves higher accuracy through learned combination |

| Complexity | Lower complexity, easier to implement | Higher complexity, requires training meta-model |

| Use Cases | Decision trees, random forests, bagging, boosting | Blending outputs from diverse model types for optimal prediction |

Understanding Ensembling in Machine Learning

Ensembling in machine learning combines predictions from multiple models to improve overall accuracy by reducing variance and bias. Common techniques include bagging, boosting, and random forests, which leverage the strengths of diverse models to enhance robustness. This approach typically outperforms individual models by aggregating their predictive power across varied datasets.

What is Stacking? Key Concepts

Stacking is an advanced ensemble learning technique that combines multiple base models to improve predictive performance by training a meta-model on their outputs. Key concepts include base learners, which independently learn from the training data, and a meta-learner, which integrates these predictions to correct individual model errors and capture complementary strengths. This technique leverages diversity among models to reduce bias and variance, enhancing overall accuracy beyond simple averaging or voting methods.

Ensembling vs Stacking: Core Differences

Ensembling combines multiple machine learning models to improve overall performance by averaging or voting, reducing variance and bias. Stacking is a specific ensembling technique that trains a meta-model to optimally blend base models' predictions for enhanced accuracy. While ensembling focuses on combining predictions, stacking emphasizes learning how to best integrate outputs through a second-level model.

Common Algorithms Used in Ensembling

Common algorithms used in ensembling include bagging techniques like Random Forest, which build multiple decision trees on bootstrapped data subsets to reduce variance. Boosting methods such as AdaBoost and Gradient Boosting iteratively weight misclassified instances to improve model accuracy. Voting classifiers combine predictions from diverse models like logistic regression, support vector machines, and k-nearest neighbors to enhance overall performance.

Popular Stacking Techniques

Popular stacking techniques in machine learning include blending, where base models' predictions are combined using a holdout validation set, and multi-level stacking, which involves training multiple layers of models to capture complex patterns. Meta-models like logistic regression or gradient boosting are typically employed to integrate base learners' outputs, optimizing predictive performance. These methods enhance robustness and reduce overfitting by leveraging diverse model strengths in ensemble frameworks.

Advantages of Ensembling Methods

Ensembling methods in machine learning enhance predictive performance by combining multiple base models, which reduces overfitting and improves generalization on unseen data. Techniques like bagging and boosting leverage model diversity to minimize variance and bias, resulting in more stable and robust predictions. Ensembling also mitigates the risk of selecting a poorly performing individual model, increasing overall accuracy and reliability.

When to Choose Stacking Over Ensembling

Stacking outperforms basic ensembling methods when diverse base models generate complementary predictions, enhancing overall accuracy through meta-model learning. It is preferred in complex problems where capturing interactions between models leads to significant performance gains. Choose stacking over simple ensembling when the dataset is sufficiently large to support training multiple layers without overfitting.

Real-world Applications: Ensembling vs Stacking

Ensembling methods, such as bagging and boosting, are widely used in real-world applications like fraud detection and recommendation systems due to their ability to improve model stability and reduce variance. Stacking, which combines multiple heterogeneous models through a meta-learner, excels in complex scenarios like medical diagnosis and financial forecasting by capturing diverse patterns across datasets. Both techniques enhance predictive performance, but stacking often provides superior accuracy in cases where blending varied algorithmic strengths is critical.

Performance Metrics and Evaluation

Ensembling combines predictions from multiple models to improve overall accuracy by reducing variance and bias, often measured through metrics like accuracy, precision, recall, F1 score, and ROC-AUC. Stacking uses a meta-learner to integrate base model outputs, optimizing performance by learning how to best combine predictions, thereby enhancing evaluation metrics especially in complex datasets. Evaluation of both techniques relies on cross-validation and confusion matrices to assess improvements in model generalization and robustness across different performance metrics.

Best Practices for Implementing Ensembling and Stacking

Effective ensembling combines diverse base models to reduce variance and bias, enhancing predictive performance through techniques like bagging and boosting. Stacking integrates multiple models by training a meta-learner on their predictions, requiring careful cross-validation to prevent overfitting and ensure generalization. Best practices include selecting heterogeneous base learners, using proper data splits to maintain independence between training layers, and tuning meta-model complexity for optimal results.

ensembling vs stacking Infographic

techiny.com

techiny.com