Gini impurity measures the likelihood of incorrect classification of a randomly chosen element, emphasizing the probability of mislabeling in a node. Entropy quantifies the disorder or uncertainty within a dataset, capturing the amount of information needed to classify a sample accurately. While Gini impurity tends to favor larger partitions and is computationally simpler, entropy provides a more nuanced assessment of disorder, often resulting in more balanced splits in decision tree algorithms.

Table of Comparison

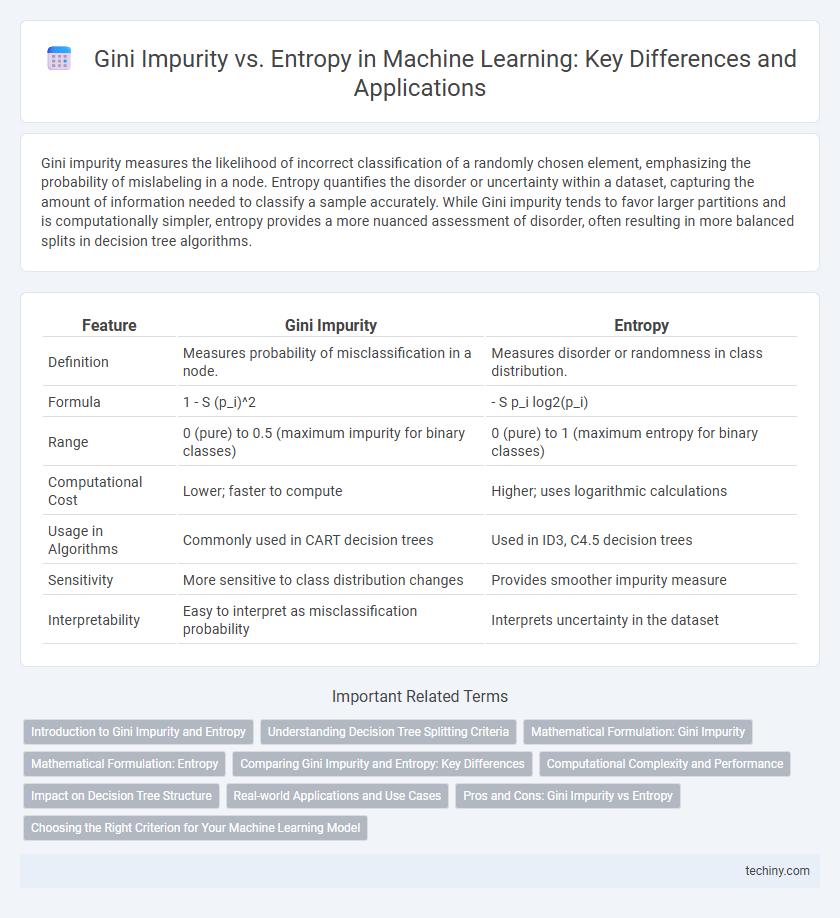

| Feature | Gini Impurity | Entropy |

|---|---|---|

| Definition | Measures probability of misclassification in a node. | Measures disorder or randomness in class distribution. |

| Formula | 1 - S (p_i)^2 | - S p_i log2(p_i) |

| Range | 0 (pure) to 0.5 (maximum impurity for binary classes) | 0 (pure) to 1 (maximum entropy for binary classes) |

| Computational Cost | Lower; faster to compute | Higher; uses logarithmic calculations |

| Usage in Algorithms | Commonly used in CART decision trees | Used in ID3, C4.5 decision trees |

| Sensitivity | More sensitive to class distribution changes | Provides smoother impurity measure |

| Interpretability | Easy to interpret as misclassification probability | Interprets uncertainty in the dataset |

Introduction to Gini Impurity and Entropy

Gini impurity measures the likelihood of incorrect classification of a randomly chosen element by a decision tree, quantifying data purity within a node. Entropy, derived from information theory, evaluates the disorder or uncertainty in a dataset, indicating impurity by calculating the expected amount of information needed to classify a sample. Both metrics guide machine learning algorithms in selecting optimal splits, improving classification accuracy by reducing impurity in decision tree models.

Understanding Decision Tree Splitting Criteria

Gini impurity measures the likelihood of an incorrect classification of a randomly chosen element, favoring splits that result in the most homogeneous child nodes in decision trees. Entropy quantifies the amount of disorder or uncertainty in the dataset, with splits aimed at maximizing information gain and reducing entropy. Both criteria guide the decision tree algorithm to create more pure subsets, enhancing model accuracy through efficient node partitioning.

Mathematical Formulation: Gini Impurity

Gini impurity is mathematically formulated as the sum of the squared probabilities of each class subtracted from one: \( G = 1 - \sum_{i=1}^C p_i^2 \), where \( p_i \) represents the probability of class \( i \) among \( C \) classes. This measure quantifies the likelihood of misclassifying a randomly chosen element if it were labeled according to the distribution of classes in a dataset. Due to its computational simplicity and sensitivity to class distribution, Gini impurity is commonly used in decision tree algorithms like CART for evaluating splits.

Mathematical Formulation: Entropy

Entropy in machine learning quantifies the uncertainty or impurity in a dataset using the formula \( H(S) = - \sum_{i=1}^{c} p_i \log_2 p_i \), where \( p_i \) represents the proportion of class \( i \) in the set \( S \) and \( c \) is the number of classes. This measure captures the expected value of the information contained in each class distribution, reflecting randomness by penalizing mixed classes more heavily than pure ones. The logarithmic base 2 ensures entropy is expressed in bits, aligning with information theory principles for evaluating decision tree splits.

Comparing Gini Impurity and Entropy: Key Differences

Gini impurity measures the probability of misclassifying a randomly chosen element, favoring splits that increase purity in decision trees, while entropy quantifies the disorder or uncertainty in data using information theory principles. Both are used to determine the quality of splits in classification tasks, but Gini impurity tends to be computationally simpler and faster. Entropy often results in more balanced splits by considering the distribution of classes more thoroughly, making it sensitive to changes in class probabilities.

Computational Complexity and Performance

Gini impurity typically requires fewer computations than entropy, making it faster for splitting datasets in decision tree algorithms. While entropy involves logarithmic calculations that increase computational complexity, Gini impurity relies on simpler arithmetic operations, enhancing performance in large-scale machine learning tasks. This difference often results in quicker model training with Gini impurity without significantly compromising accuracy.

Impact on Decision Tree Structure

Gini impurity often leads to slightly faster decision tree training since it requires fewer computations compared to entropy, impacting overall model efficiency. Trees built with Gini impurity tend to produce purer nodes, resulting in potentially shorter and more balanced tree structures. In contrast, entropy-based splits might yield more diverse partitions, influencing model complexity and generalization differently.

Real-world Applications and Use Cases

Gini impurity is frequently utilized in decision tree algorithms like CART due to its computational efficiency, making it suitable for large-scale classification tasks in industries such as finance for credit scoring and fraud detection. Entropy, derived from information theory, is preferred in applications requiring precise information gain calculations, commonly seen in natural language processing and bioinformatics for feature selection. Both metrics enhance model interpretability and accuracy, playing crucial roles in optimizing supervised learning workflows across diverse real-world scenarios.

Pros and Cons: Gini Impurity vs Entropy

Gini impurity offers faster computational efficiency and tends to create pure splits, making it suitable for large datasets and real-time applications, but it is less sensitive to class distribution compared to entropy. Entropy provides a more informative measure of disorder by accounting for the probability distribution of classes, resulting in better split quality in cases with uneven class distributions, although it requires more computational resources. Choosing between Gini impurity and entropy depends on balancing speed versus split precision based on specific dataset characteristics and problem requirements.

Choosing the Right Criterion for Your Machine Learning Model

Gini impurity measures the probability of incorrectly classifying a randomly chosen element, making it computationally faster and effective for decision tree splits. Entropy quantifies the level of disorder or uncertainty in a dataset, often leading to more balanced trees but with higher computational cost. Selecting between Gini impurity and entropy depends on the trade-off between speed and model generalization, with Gini preferred for speed-sensitive applications and entropy for nuanced data distributions.

Gini impurity vs entropy Infographic

techiny.com

techiny.com