Gradient Boosting builds models sequentially by optimizing a differentiable loss function through gradient descent, allowing it to handle a variety of loss functions and produce highly accurate predictions. AdaBoost adjusts the weights of incorrectly classified instances to focus the next learner on harder cases, making it simple and effective for binary classification tasks. While Gradient Boosting offers more flexibility and better performance on complex problems, AdaBoost provides faster training with less risk of overfitting.

Table of Comparison

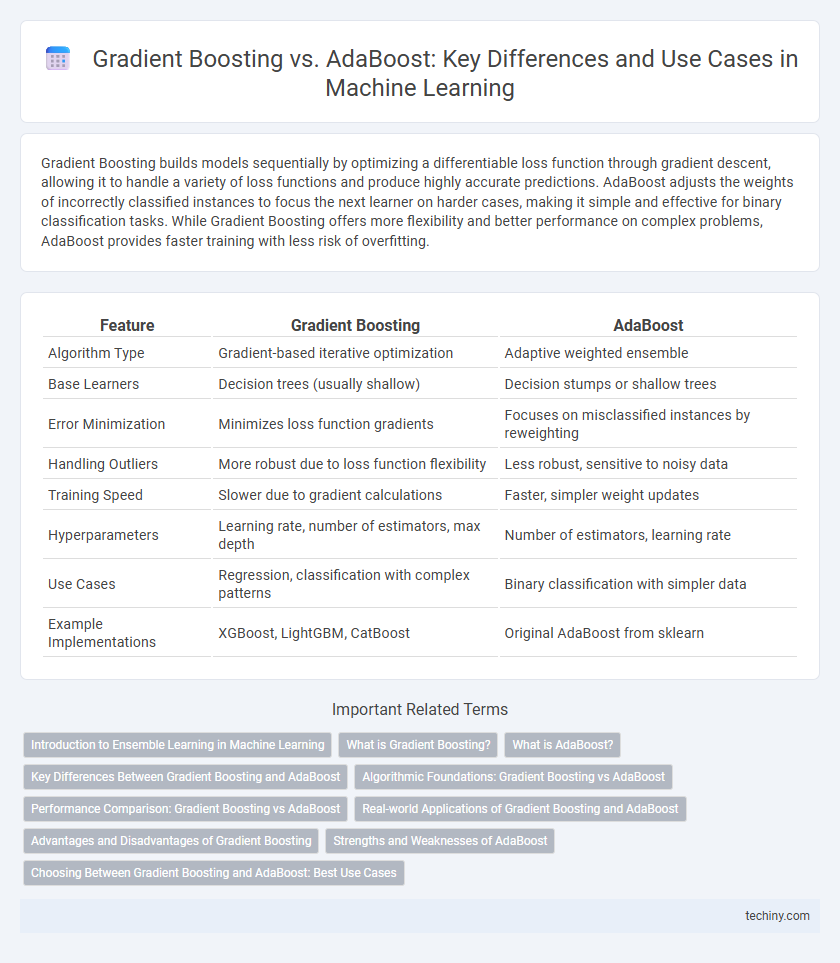

| Feature | Gradient Boosting | AdaBoost |

|---|---|---|

| Algorithm Type | Gradient-based iterative optimization | Adaptive weighted ensemble |

| Base Learners | Decision trees (usually shallow) | Decision stumps or shallow trees |

| Error Minimization | Minimizes loss function gradients | Focuses on misclassified instances by reweighting |

| Handling Outliers | More robust due to loss function flexibility | Less robust, sensitive to noisy data |

| Training Speed | Slower due to gradient calculations | Faster, simpler weight updates |

| Hyperparameters | Learning rate, number of estimators, max depth | Number of estimators, learning rate |

| Use Cases | Regression, classification with complex patterns | Binary classification with simpler data |

| Example Implementations | XGBoost, LightGBM, CatBoost | Original AdaBoost from sklearn |

Introduction to Ensemble Learning in Machine Learning

Ensemble learning in machine learning combines multiple models to improve overall predictive performance by reducing bias and variance. Gradient Boosting builds models sequentially by optimizing a loss function through gradient descent, while AdaBoost focuses on reweighting misclassified instances to enhance weak learners. Both methods create strong predictive models but differ in their approach to error correction and model updating.

What is Gradient Boosting?

Gradient Boosting is a powerful ensemble machine learning technique that builds models sequentially by optimizing a loss function through gradient descent, effectively combining weak learners to create a strong predictive model. It minimizes errors by training each new model to correct the residuals of the previous models, resulting in improved accuracy and robustness. Widely used for regression and classification tasks, Gradient Boosting excels in handling complex patterns and reducing bias in datasets.

What is AdaBoost?

AdaBoost, short for Adaptive Boosting, is an ensemble machine learning algorithm designed to improve the accuracy of weak classifiers by combining them to form a strong classifier. It iteratively adjusts the weights of incorrectly classified instances, focusing subsequent models on harder-to-classify data points. AdaBoost is particularly effective for binary classification tasks and is often used with decision trees as base learners.

Key Differences Between Gradient Boosting and AdaBoost

Gradient Boosting builds models sequentially by optimizing a differentiable loss function through gradient descent, whereas AdaBoost adjusts weights on misclassified instances in each iteration to focus on difficult samples. Gradient Boosting typically handles regression and classification tasks with flexible loss functions, while AdaBoost primarily targets binary classification using exponential loss. The difference in how each algorithm updates model weights and error treatment leads to distinct strengths in robustness and interpretability.

Algorithmic Foundations: Gradient Boosting vs AdaBoost

Gradient Boosting builds models sequentially by optimizing a differentiable loss function using gradient descent, focusing on reducing residual errors through weak learners typically in the form of decision trees. AdaBoost, on the other hand, assigns adaptive weights to training samples, iteratively adjusting them to focus on misclassified instances, combining weak classifiers through weighted majority voting. The core distinction lies in Gradient Boosting's approach to loss function minimization versus AdaBoost's emphasis on reweighting samples to improve classification accuracy.

Performance Comparison: Gradient Boosting vs AdaBoost

Gradient Boosting often outperforms AdaBoost in terms of accuracy and robustness, especially on complex datasets with noisy labels due to its ability to optimize arbitrary differentiable loss functions. AdaBoost tends to be more sensitive to outliers since it assigns higher weights to misclassified samples, potentially leading to overfitting. Gradient Boosting's flexibility and regularization techniques typically result in better generalization and improved performance across diverse machine learning tasks.

Real-world Applications of Gradient Boosting and AdaBoost

Gradient Boosting excels in real-world applications such as credit scoring, fraud detection, and customer churn prediction due to its ability to handle complex datasets and improve accuracy through iterative optimization. AdaBoost is widely used in object detection and face recognition tasks by effectively combining weak classifiers to enhance performance with simpler base learners. Both algorithms are integral in industries like finance, healthcare, and marketing, where predictive accuracy and robustness are critical.

Advantages and Disadvantages of Gradient Boosting

Gradient Boosting offers high predictive accuracy by sequentially correcting errors from previous models, making it ideal for complex datasets with nonlinear relationships. It handles various loss functions and provides robust performance through regularization techniques like shrinkage and subsampling, but this complexity often results in longer training times and higher computational costs. Overfitting can occur without careful parameter tuning, highlighting a trade-off between bias and variance that requires expertise to manage effectively.

Strengths and Weaknesses of AdaBoost

AdaBoost excels in reducing bias by iteratively focusing on misclassified samples, making it highly effective for binary classification problems with clean, balanced datasets. Its sensitivity to noisy data and outliers often leads to overfitting, limiting its robustness in real-world scenarios with data imperfections. The simplicity of its algorithm allows fast convergence but makes it less flexible compared to more complex ensemble methods like Gradient Boosting, which can handle a wider range of loss functions and model complexities.

Choosing Between Gradient Boosting and AdaBoost: Best Use Cases

Gradient Boosting excels in handling complex datasets with noisy features and provides higher predictive accuracy through iterative optimization of loss functions, making it ideal for large-scale regression and classification problems. AdaBoost is better suited for simpler, cleaner datasets where boosting weak classifiers like decision stumps can significantly improve performance without overfitting. Choosing between Gradient Boosting and AdaBoost depends on factors such as dataset size, feature complexity, and desired model interpretability.

Gradient Boosting vs AdaBoost Infographic

techiny.com

techiny.com