Lazy learning algorithms, such as k-nearest neighbors, delay the generalization process until a query is made, storing training data and performing computations only during prediction. In contrast, eager learning methods like decision trees and neural networks build a generalized model from training data before receiving queries, enabling faster predictions at runtime. Understanding the trade-offs between these approaches helps optimize model performance based on the available data and application requirements.

Table of Comparison

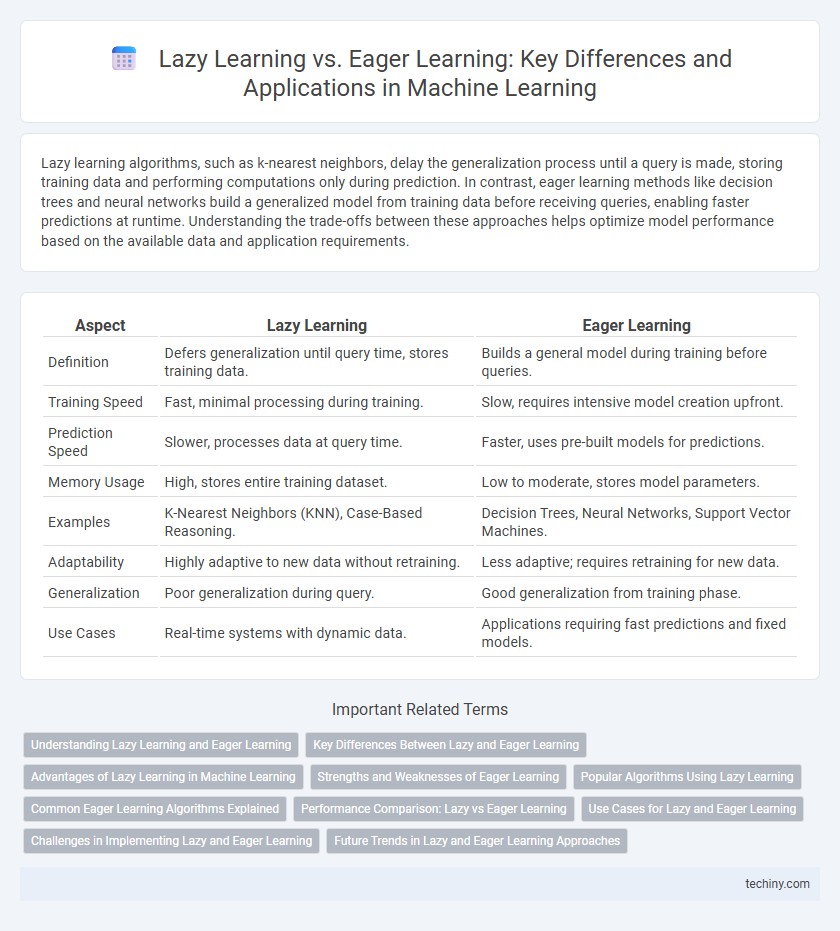

| Aspect | Lazy Learning | Eager Learning |

|---|---|---|

| Definition | Defers generalization until query time, stores training data. | Builds a general model during training before queries. |

| Training Speed | Fast, minimal processing during training. | Slow, requires intensive model creation upfront. |

| Prediction Speed | Slower, processes data at query time. | Faster, uses pre-built models for predictions. |

| Memory Usage | High, stores entire training dataset. | Low to moderate, stores model parameters. |

| Examples | K-Nearest Neighbors (KNN), Case-Based Reasoning. | Decision Trees, Neural Networks, Support Vector Machines. |

| Adaptability | Highly adaptive to new data without retraining. | Less adaptive; requires retraining for new data. |

| Generalization | Poor generalization during query. | Good generalization from training phase. |

| Use Cases | Real-time systems with dynamic data. | Applications requiring fast predictions and fixed models. |

Understanding Lazy Learning and Eager Learning

Lazy Learning stores training data and delays generalization until a query is made, which allows it to adapt flexibly to new instances but requires more computation during prediction. Eager Learning builds a general model from the training data before receiving queries, leading to faster predictions but less adaptability to changes in the data. Algorithms such as k-Nearest Neighbors exemplify Lazy Learning, while decision trees and neural networks are common Eager Learning approaches.

Key Differences Between Lazy and Eager Learning

Lazy learning stores the training data and delays generalization until a query is made, resulting in slower prediction times but faster training processes. Eager learning constructs a general model during the training phase, enabling quick predictions but requiring longer training durations. The main differences lie in their training and prediction efficiency, memory usage, and sensitivity to noisy data, with lazy learning being more flexible and eager learning more computationally efficient during inference.

Advantages of Lazy Learning in Machine Learning

Lazy learning in machine learning offers distinct advantages by postponing model generalization until query time, allowing for greater flexibility and adaptability to new data. This approach excels in handling noisy or dynamic environments, as it does not commit to a fixed model, enabling precise local approximations and reducing bias. Lazy learning methods like k-nearest neighbors (k-NN) maintain high accuracy for diverse datasets without extensive training, thereby saving computational resources during the learning phase.

Strengths and Weaknesses of Eager Learning

Eager learning algorithms build a general model during training, resulting in faster prediction times compared to lazy learning methods that store all training data. Their strengths include efficient use of memory and the ability to generalize well from noisy data, promoting better predictive performance in large datasets. However, eager learners can suffer from long training times and reduced flexibility when adapting to new or evolving data distributions.

Popular Algorithms Using Lazy Learning

Popular algorithms using lazy learning include k-Nearest Neighbors (k-NN) and Instance-Based Learning, which store training data and delay generalization until prediction. These algorithms excel in scenarios requiring adaptability and low training time by leveraging local information at query time. Lazy learning methods contrast with eager learning models like Support Vector Machines and Neural Networks, which create a global model during training.

Common Eager Learning Algorithms Explained

Common eager learning algorithms include decision trees, support vector machines, and neural networks, which build a general model from the training data before making predictions. These algorithms optimize for performance by learning the entire model in advance, reducing prediction time compared to lazy learning methods like k-nearest neighbors. Eager learning is particularly effective in scenarios where quick inference is critical and sufficient labeled data is available for comprehensive model training.

Performance Comparison: Lazy vs Eager Learning

Lazy learning algorithms, such as k-nearest neighbors, delay generalization until query time, resulting in slower prediction but faster training phases. Eager learning models like decision trees and neural networks perform extensive training upfront, enabling rapid predictions but requiring significant computation during the training process. Performance comparison reveals lazy learning excels in environments with dynamic or evolving datasets, while eager learning offers superior efficiency and accuracy when dealing with large, static datasets.

Use Cases for Lazy and Eager Learning

Lazy learning algorithms, such as k-Nearest Neighbors and case-based reasoning, excel in scenarios with dynamic data environments or where real-time model updates are crucial, making them ideal for recommendation systems and personalized search applications. Eager learning algorithms like decision trees, support vector machines, and neural networks are preferred for large-scale, static datasets in applications such as image recognition, fraud detection, and predictive analytics, where fast prediction and model generalization are essential. The choice between lazy and eager learning depends on factors including data volume, computational resources, and the need for real-time adaptability versus pre-trained model efficiency.

Challenges in Implementing Lazy and Eager Learning

Implementing lazy learning encounters challenges such as high prediction latency and extensive memory requirements due to storing the entire training dataset. Eager learning faces difficulties in model retraining and adaptability, as it requires rebuilding the model from scratch when new data arrives. Both approaches demand careful consideration of computational resources and real-time application constraints to optimize performance.

Future Trends in Lazy and Eager Learning Approaches

Emerging trends in lazy learning emphasize adaptive memory management and real-time data querying to enhance model responsiveness and scalability. Eager learning advances focus on integrating deep neural architectures with transfer learning to improve generalization across diverse datasets. Hybrid models combining lazy and eager techniques are anticipated to optimize computational efficiency and predictive accuracy in future machine learning applications.

Lazy Learning vs Eager Learning Infographic

techiny.com

techiny.com