Learning rate controls the step size at each iteration while updating model weights, directly impacting convergence speed and stability. Momentum accelerates gradient descent by dampening oscillations and helps navigate ravines in the loss landscape for smoother updates. Balancing learning rate and momentum optimizes training efficiency, preventing overshooting or slow convergence in machine learning models.

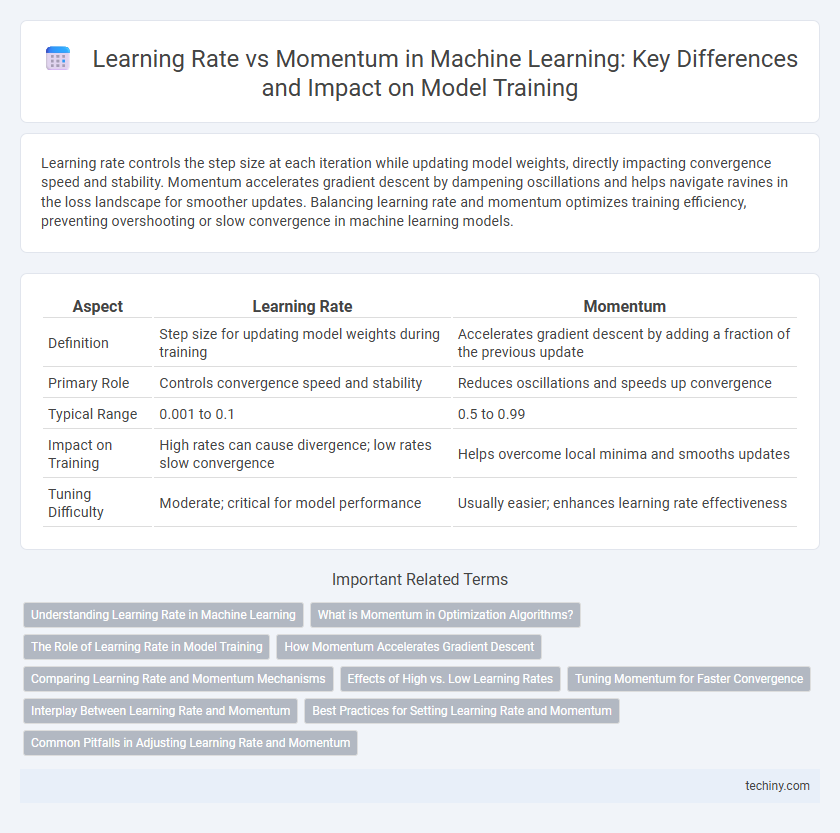

Table of Comparison

| Aspect | Learning Rate | Momentum |

|---|---|---|

| Definition | Step size for updating model weights during training | Accelerates gradient descent by adding a fraction of the previous update |

| Primary Role | Controls convergence speed and stability | Reduces oscillations and speeds up convergence |

| Typical Range | 0.001 to 0.1 | 0.5 to 0.99 |

| Impact on Training | High rates can cause divergence; low rates slow convergence | Helps overcome local minima and smooths updates |

| Tuning Difficulty | Moderate; critical for model performance | Usually easier; enhances learning rate effectiveness |

Understanding Learning Rate in Machine Learning

The learning rate in machine learning controls the step size at each iteration while moving toward a minimum of a loss function, directly influencing how quickly or slowly a model converges. An optimal learning rate balances convergence speed and stability, preventing overshooting or slow training progress. Momentum complements the learning rate by accelerating gradient vectors in consistent directions, thereby smoothing updates and improving convergence especially in non-convex optimization landscapes.

What is Momentum in Optimization Algorithms?

Momentum in optimization algorithms is a technique designed to accelerate gradient descent by accumulating past gradients to smooth out update steps, reducing oscillations and improving convergence speed. It works by adding a fraction of the previous update vector to the current gradient, effectively creating a velocity that helps escape local minima and saddle points. This approach stabilizes updates in high-curvature, noisy, or sparse gradient scenarios, outperforming standard learning rates alone in training deep neural networks.

The Role of Learning Rate in Model Training

The learning rate in model training determines the step size at each iteration while updating model parameters, directly impacting convergence speed and stability. A carefully tuned learning rate can prevent overshooting minima or slow progress, ensuring efficient optimization of loss functions. While momentum helps accelerate gradients in relevant directions, the primary control over training dynamics lies in selecting an appropriate learning rate.

How Momentum Accelerates Gradient Descent

Momentum accelerates gradient descent by smoothing oscillations and enabling faster convergence along relevant directions, especially in regions with steep and shallow curvature. It achieves this by accumulating a velocity vector in parameter space, effectively combining past gradients to maintain consistent updates and overcome small local minima. This mechanism helps avoid the pitfalls of poorly tuned learning rates and enhances stability during training in complex machine learning models.

Comparing Learning Rate and Momentum Mechanisms

Learning rate controls the step size during gradient descent, directly affecting how quickly a model converges to a minimum, while momentum accelerates convergence by accumulating past gradients to smooth updates and avoid local minima. High learning rates can cause overshooting, whereas momentum helps stabilize updates and improves the optimizer's ability to escape shallow valleys. Combining adaptive learning rates with momentum mechanisms like in Adam optimizer enhances training efficiency and model performance.

Effects of High vs. Low Learning Rates

High learning rates can cause training instability, leading to erratic weight updates and potential divergence, while low learning rates result in slow convergence and prolonged training times. Momentum helps smooth out updates by accumulating past gradients, effectively mitigating the oscillations caused by high learning rates and accelerating progress in shallow gradient regions. Balancing learning rate and momentum parameters is crucial for optimizing convergence speed and stability in machine learning models.

Tuning Momentum for Faster Convergence

Tuning momentum in machine learning optimizers significantly accelerates convergence by smoothing updates and preventing oscillations during training. A momentum value typically between 0.8 and 0.99 helps maintain consistent gradient direction, allowing faster progress compared to adjusting learning rate alone. Proper momentum settings combined with an adaptive learning rate lead to more stable and efficient neural network training dynamics.

Interplay Between Learning Rate and Momentum

The interplay between learning rate and momentum critically influences convergence speed and stability in machine learning optimization. Higher momentum values help accelerate gradient descent by dampening oscillations, allowing the learning rate to be effectively increased without destabilizing training. Balancing learning rate and momentum optimizes gradient updates, improving model accuracy and reducing training time.

Best Practices for Setting Learning Rate and Momentum

Optimal learning rate selection involves starting with moderate values, often between 0.001 and 0.01, and adjusting based on the model's convergence speed and stability. Momentum values, typically set between 0.8 and 0.99, help accelerate gradient descent in relevant directions and smooth out oscillations, improving overall training efficiency. Combining adaptive learning rate schedules with momentum tuning can lead to faster convergence and enhanced model performance in various machine learning tasks.

Common Pitfalls in Adjusting Learning Rate and Momentum

Common pitfalls in adjusting learning rate and momentum include setting a high learning rate with high momentum, which can cause oscillations or divergence during training. Using a low learning rate with excessively high momentum may lead to slow convergence or stuck optimization in suboptimal minima. Balancing learning rate decay schedules with momentum adjustments is crucial to avoid unstable gradients and ensure efficient model training.

learning rate vs momentum Infographic

techiny.com

techiny.com