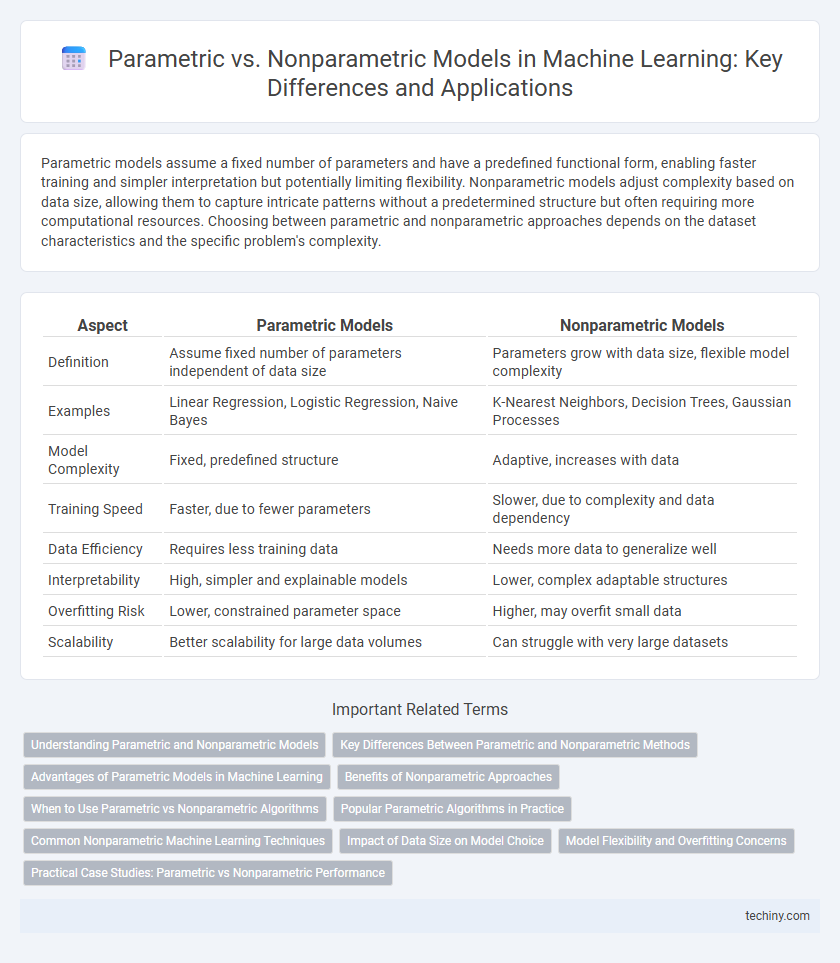

Parametric models assume a fixed number of parameters and have a predefined functional form, enabling faster training and simpler interpretation but potentially limiting flexibility. Nonparametric models adjust complexity based on data size, allowing them to capture intricate patterns without a predetermined structure but often requiring more computational resources. Choosing between parametric and nonparametric approaches depends on the dataset characteristics and the specific problem's complexity.

Table of Comparison

| Aspect | Parametric Models | Nonparametric Models |

|---|---|---|

| Definition | Assume fixed number of parameters independent of data size | Parameters grow with data size, flexible model complexity |

| Examples | Linear Regression, Logistic Regression, Naive Bayes | K-Nearest Neighbors, Decision Trees, Gaussian Processes |

| Model Complexity | Fixed, predefined structure | Adaptive, increases with data |

| Training Speed | Faster, due to fewer parameters | Slower, due to complexity and data dependency |

| Data Efficiency | Requires less training data | Needs more data to generalize well |

| Interpretability | High, simpler and explainable models | Lower, complex adaptable structures |

| Overfitting Risk | Lower, constrained parameter space | Higher, may overfit small data |

| Scalability | Better scalability for large data volumes | Can struggle with very large datasets |

Understanding Parametric and Nonparametric Models

Parametric models in machine learning use a fixed number of parameters to represent the underlying data distribution, enabling faster training and simpler interpretation but potentially limiting flexibility. Nonparametric models, by contrast, do not assume a predefined form or number of parameters, allowing them to adapt more closely to complex datasets at the cost of increased computational resources and risk of overfitting. Understanding the trade-offs between parametric techniques like linear regression and nonparametric methods like k-nearest neighbors is essential for selecting the appropriate model based on dataset size, dimensionality, and specific problem requirements.

Key Differences Between Parametric and Nonparametric Methods

Parametric methods in machine learning rely on a fixed number of parameters and assume a specific form for the underlying data distribution, enabling faster training and easier interpretation but potentially limiting flexibility. Nonparametric methods do not assume a predetermined model structure, allowing them to adapt to complex data patterns and improve accuracy with increasing data size, though often at the cost of higher computational resources. Key differences include model flexibility, scalability with data, interpretability, and sensitivity to the curse of dimensionality.

Advantages of Parametric Models in Machine Learning

Parametric models in machine learning offer advantages such as faster training times and lower computational costs due to their fixed number of parameters. They provide strong interpretability and simpler model structures, making it easier to understand the relationship between inputs and outputs. Parametric approaches also require less training data to achieve effective generalization compared to nonparametric models.

Benefits of Nonparametric Approaches

Nonparametric approaches in machine learning offer flexibility by making fewer assumptions about the underlying data distribution, enabling them to model complex patterns and adapt to varying data structures effectively. These methods, such as k-nearest neighbors and decision trees, often yield higher accuracy in real-world scenarios where data relationships are unknown or highly nonlinear. Their capacity to grow model complexity with data size helps prevent underfitting and enhances predictive performance on diverse datasets.

When to Use Parametric vs Nonparametric Algorithms

Parametric algorithms are preferred when the dataset is small to medium-sized, and the underlying data distribution is known or assumed, enabling faster computation and simpler models like linear regression or logistic regression. Nonparametric algorithms excel with large, complex datasets and unknown distributions, providing flexibility by modeling intricate data patterns through methods such as k-nearest neighbors or decision trees. Choosing between parametric and nonparametric approaches depends on balancing model interpretability, computational efficiency, and the ability to capture data complexity in machine learning tasks.

Popular Parametric Algorithms in Practice

Popular parametric algorithms in machine learning include Linear Regression, Logistic Regression, and Support Vector Machines (SVMs) with linear kernels, known for their fixed number of parameters and efficient computation. These models assume a predefined functional form and often provide faster training times and easier interpretability compared to nonparametric methods. The parametric nature allows them to generalize well on smaller datasets, making them a preferred choice in many practical applications.

Common Nonparametric Machine Learning Techniques

Common nonparametric machine learning techniques include decision trees, k-nearest neighbors (k-NN), and kernel density estimation, which do not assume a fixed form for the underlying data distribution. These methods adapt flexibly to the complexity of the dataset by using the data itself to determine the model structure, allowing them to capture intricate patterns and relationships. Nonparametric models excel in situations with complex or unknown data distributions but typically require larger datasets and greater computational resources compared to parametric approaches.

Impact of Data Size on Model Choice

Parametric models maintain a fixed number of parameters regardless of data size, enabling faster training and lower complexity but potentially limiting flexibility with large datasets. Nonparametric models scale parameters with data volume, improving accuracy by capturing intricate patterns as data increases but requiring more computational resources. Choosing between parametric and nonparametric approaches depends on balancing data size, model interpretability, and computational capacity for optimal machine learning performance.

Model Flexibility and Overfitting Concerns

Parametric models assume a fixed number of parameters which limits model flexibility but reduces overfitting risk in small datasets. Nonparametric models adapt complexity based on data size, offering greater flexibility at the cost of higher overfitting potential. Regularization techniques and cross-validation are essential to balance model flexibility and prevent overfitting in both approaches.

Practical Case Studies: Parametric vs Nonparametric Performance

Parametric models such as linear regression and logistic regression excel in scenarios with smaller, well-defined datasets due to their fixed number of parameters and faster convergence. Nonparametric models like k-nearest neighbors and random forests demonstrate superior performance in complex, high-dimensional data environments by capturing intricate patterns without assuming a predefined form. Practical case studies reveal nonparametric approaches often outperform parametric models in real-world tasks like image recognition and natural language processing where data distribution is unknown or highly variable.

Parametric vs Nonparametric Infographic

techiny.com

techiny.com