SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples by interpolating between minority class examples, enhancing model performance by reducing overfitting commonly caused by random oversampling. Random oversampling duplicates existing minority class instances, which can lead to overfitting and less generalizable models. SMOTE offers a more balanced and informative training dataset by creating new, plausible samples rather than exact copies.

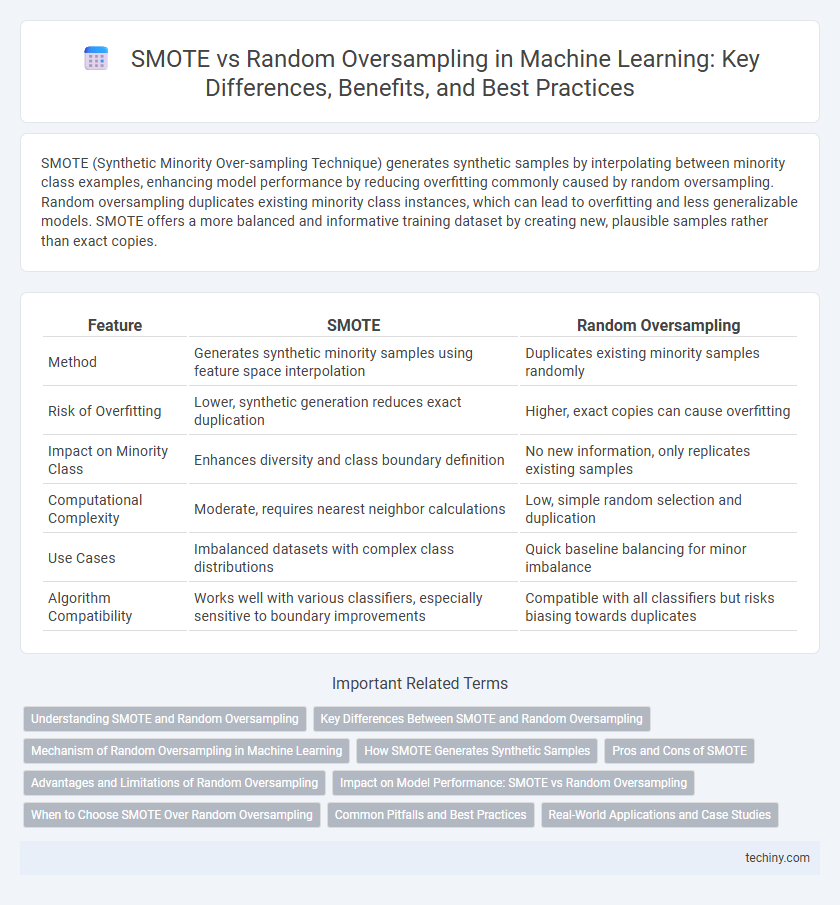

Table of Comparison

| Feature | SMOTE | Random Oversampling |

|---|---|---|

| Method | Generates synthetic minority samples using feature space interpolation | Duplicates existing minority samples randomly |

| Risk of Overfitting | Lower, synthetic generation reduces exact duplication | Higher, exact copies can cause overfitting |

| Impact on Minority Class | Enhances diversity and class boundary definition | No new information, only replicates existing samples |

| Computational Complexity | Moderate, requires nearest neighbor calculations | Low, simple random selection and duplication |

| Use Cases | Imbalanced datasets with complex class distributions | Quick baseline balancing for minor imbalance |

| Algorithm Compatibility | Works well with various classifiers, especially sensitive to boundary improvements | Compatible with all classifiers but risks biasing towards duplicates |

Understanding SMOTE and Random Oversampling

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic examples by interpolating between existing minority class samples, enhancing the diversity of training data and improving model generalization. Random oversampling duplicates minority class instances, which can lead to overfitting due to redundant examples. SMOTE's approach reduces the risk of overfitting compared to random oversampling by creating more representative synthetic data points in the feature space.

Key Differences Between SMOTE and Random Oversampling

SMOTE generates synthetic samples by interpolating between minority class instances, enhancing feature space diversity and reducing overfitting risks compared to random oversampling, which duplicates existing minority samples. SMOTE improves classifier performance on imbalanced datasets by creating more generalized decision boundaries, whereas random oversampling may lead to model bias due to repeated data points. The synthetic data creation in SMOTE enables better representation of minority class distribution, while random oversampling's exact replication can inflate training time without adding novel information.

Mechanism of Random Oversampling in Machine Learning

Random oversampling in machine learning replicates minority class samples to balance imbalanced datasets, enhancing model performance by providing equal class representation. This method duplicates existing instances without generating new synthetic samples, which can lead to overfitting due to redundancy. Compared to SMOTE, random oversampling is simpler but less effective in creating diverse data points that improve generalization.

How SMOTE Generates Synthetic Samples

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples by interpolating between existing minority class examples and their nearest neighbors in feature space, creating new data points along the line segments connecting these instances. This approach enhances the diversity of the minority class without simply duplicating samples, which helps to reduce overfitting common in random oversampling. By synthesizing realistic, new minority class examples, SMOTE improves classifier performance on imbalanced datasets through more representative training data.

Pros and Cons of SMOTE

SMOTE (Synthetic Minority Over-sampling Technique) generates synthetic samples to balance imbalanced datasets, improving model performance by reducing bias towards the majority class. It effectively addresses overfitting issues common in random oversampling, which simply duplicates minority samples. However, SMOTE can introduce noise and increase class overlap by creating synthetic points along feature space lines, potentially degrading model accuracy in complex datasets.

Advantages and Limitations of Random Oversampling

Random oversampling increases the size of the minority class by duplicating existing samples, which is simple to implement and helps balance imbalanced datasets in machine learning. However, this technique may lead to overfitting since it replicates identical minority class instances without introducing new information, reducing model generalization. Unlike SMOTE, random oversampling does not create synthetic samples, limiting diversity but maintaining dataset integrity without altering feature distributions.

Impact on Model Performance: SMOTE vs Random Oversampling

SMOTE (Synthetic Minority Over-sampling Technique) improves model performance by generating synthetic samples, leading to better generalization and reduced overfitting compared to random oversampling, which simply duplicates minority class instances. Studies show SMOTE enhances precision, recall, and F1-score by creating more diverse data points, whereas random oversampling risks reinforcing noise and bias. The balanced dataset produced by SMOTE supports algorithms in learning more robust decision boundaries, ultimately improving prediction accuracy on imbalanced classification tasks.

When to Choose SMOTE Over Random Oversampling

SMOTE is preferred over random oversampling when addressing imbalanced datasets that require synthetic data generation to improve model generalization and reduce the risk of overfitting to duplicated samples. Unlike random oversampling, which merely replicates minority class examples, SMOTE creates new, synthetic instances by interpolating between existing minority samples, enhancing class boundary representation. This technique is especially beneficial for complex classification tasks where preserving the minority class's feature space diversity is critical.

Common Pitfalls and Best Practices

SMOTE generates synthetic samples by interpolating between minority class instances, reducing the risk of overfitting that random oversampling often causes due to simple duplication of data. Common pitfalls include potential noise amplification with SMOTE and the difficulty of handling high-dimensional data, while best practices recommend combining SMOTE with undersampling and applying it within cross-validation pipelines to prevent data leakage. Random oversampling suits straightforward cases but requires careful use of regularization to mitigate overfitting and may be less effective on highly imbalanced datasets compared to SMOTE's sophisticated interpolation approach.

Real-World Applications and Case Studies

In real-world machine learning applications, SMOTE (Synthetic Minority Over-sampling Technique) outperforms random oversampling by generating synthetic samples that enhance model generalization and reduce overfitting, particularly in imbalanced datasets like fraud detection and medical diagnosis. Case studies in credit scoring demonstrate SMOTE's ability to improve predictive accuracy by creating more informative minority class instances, while random oversampling often leads to duplicated records that do not add value. These empirical results highlight SMOTE's effectiveness for improving classifier performance in diverse industries facing class imbalance issues.

SMOTE vs random oversampling Infographic

techiny.com

techiny.com