Stochastic gradient descent updates model parameters using a single training example at a time, which introduces noise but accelerates convergence and helps escape local minima. Mini-batch gradient descent processes small subsets of data, balancing the variance reduction of batch methods with the computational efficiency of stochastic updates. This approach often results in faster training with improved stability and better generalization compared to purely stochastic or full-batch methods.

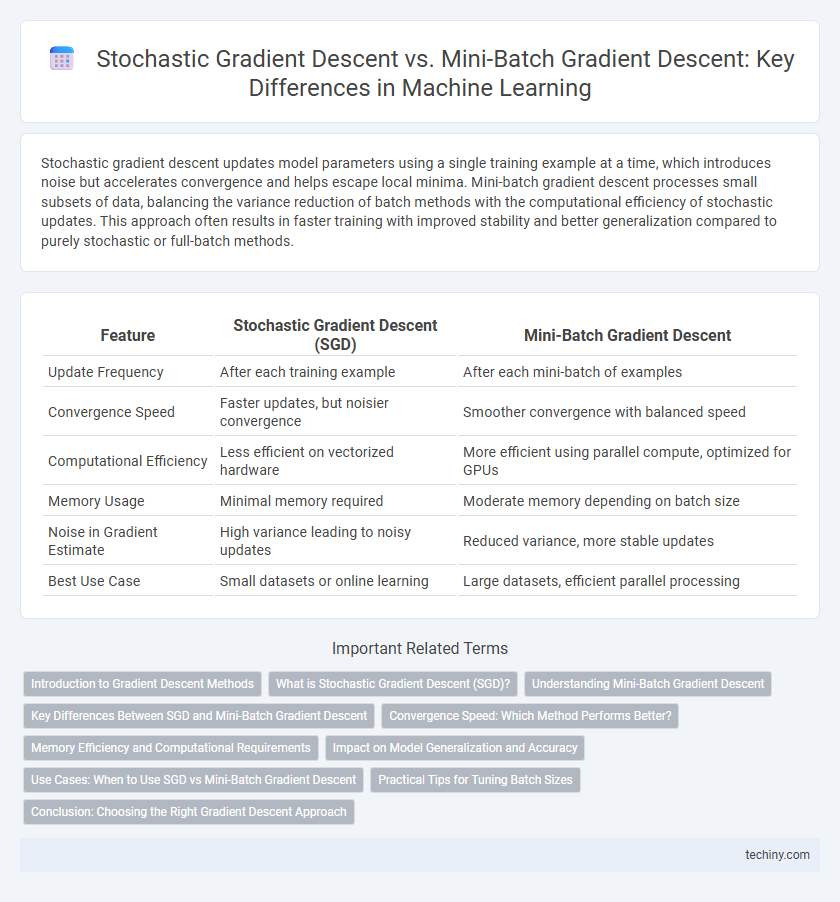

Table of Comparison

| Feature | Stochastic Gradient Descent (SGD) | Mini-Batch Gradient Descent |

|---|---|---|

| Update Frequency | After each training example | After each mini-batch of examples |

| Convergence Speed | Faster updates, but noisier convergence | Smoother convergence with balanced speed |

| Computational Efficiency | Less efficient on vectorized hardware | More efficient using parallel compute, optimized for GPUs |

| Memory Usage | Minimal memory required | Moderate memory depending on batch size |

| Noise in Gradient Estimate | High variance leading to noisy updates | Reduced variance, more stable updates |

| Best Use Case | Small datasets or online learning | Large datasets, efficient parallel processing |

Introduction to Gradient Descent Methods

Stochastic Gradient Descent (SGD) updates model parameters using one training sample at a time, enabling faster convergence on large datasets but introducing higher variance in updates. Mini-batch Gradient Descent balances this by processing small batches of data simultaneously, reducing variance while maintaining computational efficiency and stable convergence. Both methods optimize the cost function iteratively, crucial for training deep learning models effectively.

What is Stochastic Gradient Descent (SGD)?

Stochastic Gradient Descent (SGD) is an optimization algorithm used in machine learning to minimize the loss function by updating model parameters iteratively using a single randomly selected training example at each step. This approach introduces noise into the parameter updates, enabling faster convergence on large datasets compared to full-batch gradient descent. SGD is particularly effective for training deep neural networks where computational efficiency and scalability are critical.

Understanding Mini-Batch Gradient Descent

Mini-batch gradient descent combines the advantages of stochastic gradient descent and batch gradient descent by processing small subsets of the training data, typically between 32 and 256 samples, per iteration. This approach balances the trade-off between noisy updates of stochastic gradient descent and the computational inefficiency of full-batch processing, resulting in faster convergence and more stable gradient estimates. By effectively leveraging parallel computation on GPUs, mini-batch gradient descent accelerates training in deep learning models while maintaining sufficient gradient accuracy for robust optimization.

Key Differences Between SGD and Mini-Batch Gradient Descent

Stochastic Gradient Descent (SGD) updates model parameters using a single training example per iteration, enabling faster but noisier convergence with high variance in gradient estimates. Mini-batch Gradient Descent processes small batches of data samples in each iteration, balancing computational efficiency and gradient estimate stability to achieve smoother and more reliable updates. SGD is preferred for large-scale, online learning scenarios due to its rapid updates, while mini-batch methods are widely used for parallel training and improved convergence in deep learning tasks.

Convergence Speed: Which Method Performs Better?

Stochastic Gradient Descent (SGD) often converges faster initially due to its frequent updates based on single samples, which helps escape local minima and explore the loss surface more effectively. Mini-batch Gradient Descent balances convergence speed and stability by averaging gradients over a batch of samples, leading to more reliable updates and smoother convergence. In practice, mini-batch gradient descent with appropriately chosen batch sizes typically outperforms pure SGD in convergence speed for large-scale machine learning models.

Memory Efficiency and Computational Requirements

Stochastic gradient descent (SGD) processes one data point at a time, resulting in low memory usage but noisy gradient estimates that can slow convergence. Mini-batch gradient descent balances memory efficiency and computational overhead by processing small batches of data, improving gradient stability and leveraging parallel hardware acceleration. While SGD requires minimal memory, mini-batch methods demand more resources but achieve faster training through optimized matrix operations and improved cache utilization.

Impact on Model Generalization and Accuracy

Stochastic gradient descent (SGD) introduces high variance in parameter updates, often leading to better generalization due to its inherent noise that helps escape local minima. Mini-batch gradient descent balances this by reducing variance through averaging gradients over batches, which stabilizes convergence and can improve accuracy on training data. However, smaller mini-batches tend to retain some stochasticity, preserving generalization benefits while maintaining faster and more reliable convergence than pure SGD.

Use Cases: When to Use SGD vs Mini-Batch Gradient Descent

Stochastic Gradient Descent (SGD) is ideal for large-scale machine learning problems with massive datasets where faster, noisier updates enhance model generalization and convergence speed. Mini-batch Gradient Descent balances computational efficiency and gradient stability by processing small subsets of data, making it suitable for moderate-sized datasets and parallel hardware optimizations like GPUs. Choosing between them depends on the trade-off between convergence speed, memory constraints, and model accuracy requirements in specific applications.

Practical Tips for Tuning Batch Sizes

Choosing the optimal batch size in stochastic gradient descent (SGD) versus mini-batch gradient descent significantly impacts model convergence and training efficiency. Smaller batch sizes like 32 or 64 improve generalization by introducing noise in gradient estimates, while larger sizes (128 to 512) accelerate computation but risk poorer generalization. Practical tuning involves balancing hardware constraints, convergence speed, and validation accuracy, often requiring empirical testing with adaptive learning rate schedules such as learning rate warm-up or decay for best performance.

Conclusion: Choosing the Right Gradient Descent Approach

Stochastic gradient descent (SGD) excels in faster convergence on large, noisy datasets by updating weights per sample, making it ideal for online learning scenarios. Mini-batch gradient descent balances the computational efficiency of batch processing with the noise reduction of SGD by updating weights on small data batches, enhancing stability and convergence speed. Selecting the right gradient descent method depends on dataset size, memory constraints, and the trade-off between convergence speed and stability.

stochastic gradient descent vs mini-batch gradient descent Infographic

techiny.com

techiny.com