On-device AI in mobile technology pet applications enables real-time processing and enhanced privacy by analyzing data locally on the device, reducing latency and dependence on internet connectivity. In contrast, cloud AI offers powerful computational resources and continuous learning from aggregated user data, allowing more complex model updates and improvements. Balancing on-device AI with cloud AI ensures efficient performance, secure data handling, and personalized user experiences in mobile pet technology.

Table of Comparison

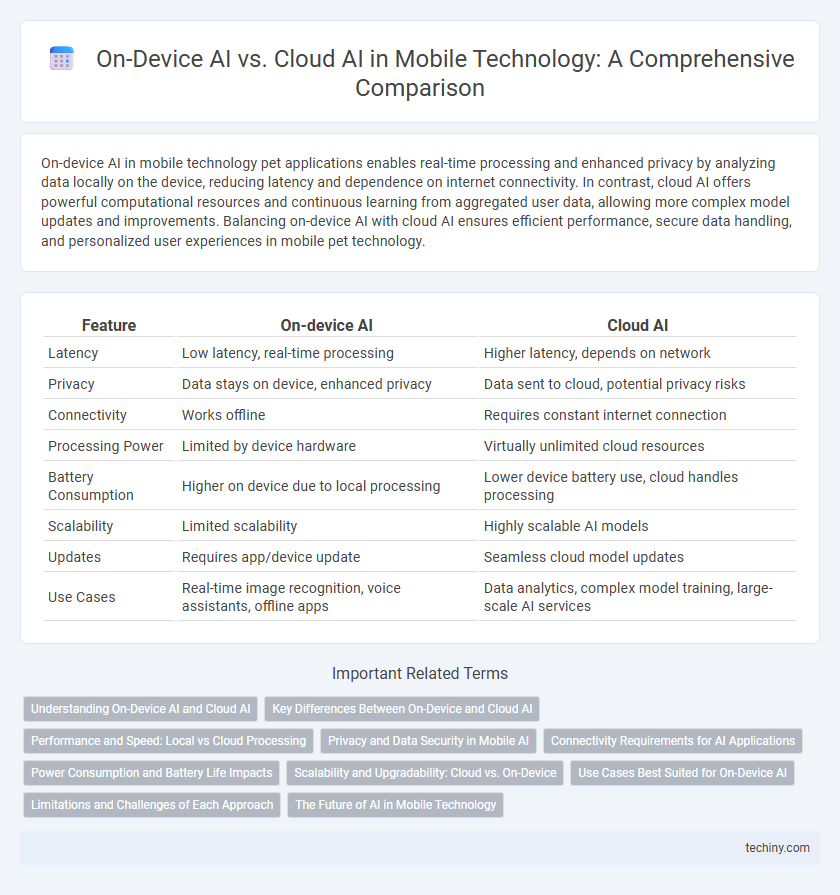

| Feature | On-device AI | Cloud AI |

|---|---|---|

| Latency | Low latency, real-time processing | Higher latency, depends on network |

| Privacy | Data stays on device, enhanced privacy | Data sent to cloud, potential privacy risks |

| Connectivity | Works offline | Requires constant internet connection |

| Processing Power | Limited by device hardware | Virtually unlimited cloud resources |

| Battery Consumption | Higher on device due to local processing | Lower device battery use, cloud handles processing |

| Scalability | Limited scalability | Highly scalable AI models |

| Updates | Requires app/device update | Seamless cloud model updates |

| Use Cases | Real-time image recognition, voice assistants, offline apps | Data analytics, complex model training, large-scale AI services |

Understanding On-Device AI and Cloud AI

On-device AI processes data locally on smartphones, tablets, and other mobile devices, enabling real-time responses and enhanced privacy by minimizing data transmission. Cloud AI leverages powerful remote servers to perform complex computations, offering scalable resources and continuous updates but relies on stable internet connectivity. Understanding the trade-offs between on-device AI's low latency and data security versus cloud AI's computational strength is crucial for optimizing mobile technology applications.

Key Differences Between On-Device and Cloud AI

On-device AI processes data directly on smartphones or IoT devices, ensuring faster response times and enhanced privacy by minimizing data transfer. Cloud AI leverages powerful remote servers for extensive data analysis and complex computations, enabling scalable and continuous learning across diverse datasets. The fundamental differences lie in latency, data security, computational capacity, and connectivity dependence, shaping their distinct applications and user experiences.

Performance and Speed: Local vs Cloud Processing

On-device AI delivers faster response times and reduced latency by processing data locally on smartphones or IoT devices, eliminating dependence on network speed. Cloud AI leverages powerful remote servers to perform intensive computations but often experiences delays due to data transmission and network variability. Optimizing performance and speed involves balancing local processing capabilities with cloud resources to meet real-time application needs and energy efficiency.

Privacy and Data Security in Mobile AI

On-device AI processes data locally on mobile devices, significantly reducing the risk of data breaches by eliminating the need to transmit sensitive information to external servers. Cloud AI relies on centralized servers for data processing, which increases vulnerability to cyberattacks and potential unauthorized access to personal data. Mobile AI solutions prioritize privacy and data security through encryption, secure hardware enclaves, and strict data handling protocols to protect user information at both local and cloud levels.

Connectivity Requirements for AI Applications

On-device AI significantly reduces dependency on continuous internet connectivity by processing data locally, enabling real-time decision-making even in low or no network conditions. Cloud AI relies heavily on stable, high-speed internet connections to transmit data to remote servers for analysis, which can introduce latency and privacy concerns. Mobile applications that demand fast responsiveness and enhanced data security often benefit from on-device AI's minimal connectivity requirements.

Power Consumption and Battery Life Impacts

On-device AI significantly reduces power consumption by processing data locally, minimizing the need for continuous data transmission to the cloud, which drains battery faster. Cloud AI relies heavily on wireless connectivity, causing frequent energy-intensive data exchanges, leading to accelerated battery depletion. Optimizing AI workloads for on-device execution prolongs battery life while maintaining responsive performance in mobile devices.

Scalability and Upgradability: Cloud vs. On-Device

On-device AI offers limited scalability due to hardware constraints, restricting its ability to handle growing data and complex models compared to cloud AI, which leverages virtually unlimited cloud infrastructure to scale dynamically. Cloud AI enables seamless upgradability by deploying model updates centrally without user intervention, whereas on-device AI requires local updates that can be slower and fragmented across devices. Enterprises prioritize cloud AI for rapid scaling and continuous improvements, while on-device AI benefits applications demanding low latency and privacy.

Use Cases Best Suited for On-Device AI

On-device AI excels in use cases requiring low latency, enhanced privacy, and offline functionality, such as real-time language translation, facial recognition, and personalized fitness tracking. It processes sensitive data locally, reducing the risk of data breaches and dependence on network connectivity. Applications in autonomous vehicles, augmented reality, and smart wearables benefit significantly from on-device AI due to its immediate response capabilities and energy efficiency.

Limitations and Challenges of Each Approach

On-device AI faces limitations in processing power, memory capacity, and energy consumption, restricting complex computations and continuous learning capabilities. Cloud AI encounters challenges related to latency, data privacy, and dependency on reliable network connectivity, which can hinder real-time responsiveness and secure data handling. Balancing these constraints is critical for optimizing mobile technology performance and user experience.

The Future of AI in Mobile Technology

On-device AI enhances mobile technology by enabling real-time data processing, improved privacy, and reduced latency compared to Cloud AI, which relies on remote servers for computation. Advances in AI chipsets and machine learning models facilitate more powerful and efficient on-device AI capabilities in smartphones and wearables. The future of AI in mobile technology will likely hinge on a hybrid approach, leveraging both on-device AI for speed and security and Cloud AI for complex data analysis and continuous learning.

On-device AI vs Cloud AI Infographic

techiny.com

techiny.com