Hot standby networking pet systems maintain a backup connection that activates only when the primary link fails, ensuring uninterrupted service with minimal downtime. Load balancing distributes network traffic evenly across multiple connections, optimizing resource use and improving overall performance and reliability. Choosing between hot standby and load balancing depends on whether priority is fault tolerance or maximizing throughput in a pet-focused network environment.

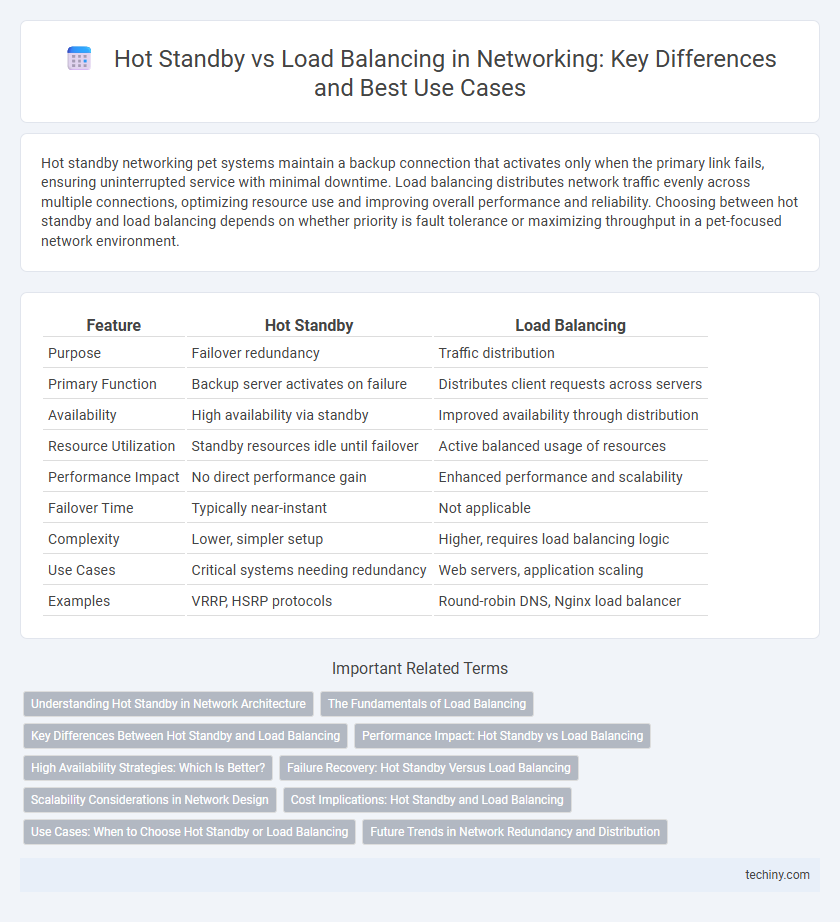

Table of Comparison

| Feature | Hot Standby | Load Balancing |

|---|---|---|

| Purpose | Failover redundancy | Traffic distribution |

| Primary Function | Backup server activates on failure | Distributes client requests across servers |

| Availability | High availability via standby | Improved availability through distribution |

| Resource Utilization | Standby resources idle until failover | Active balanced usage of resources |

| Performance Impact | No direct performance gain | Enhanced performance and scalability |

| Failover Time | Typically near-instant | Not applicable |

| Complexity | Lower, simpler setup | Higher, requires load balancing logic |

| Use Cases | Critical systems needing redundancy | Web servers, application scaling |

| Examples | VRRP, HSRP protocols | Round-robin DNS, Nginx load balancer |

Understanding Hot Standby in Network Architecture

Hot standby in network architecture refers to a redundancy technique where a secondary system or device remains fully operational and synchronized with the primary, ready to take over instantly if the primary fails, minimizing downtime. This approach enhances network reliability by providing an automatic failover mechanism, ensuring continuous availability of critical services without load distribution. Unlike load balancing, which distributes network traffic across multiple devices to optimize performance, hot standby prioritizes seamless failover and fault tolerance in network infrastructure.

The Fundamentals of Load Balancing

Load balancing distributes network traffic across multiple servers to optimize resource use, maximize throughput, and minimize response time, ensuring high availability and reliability. It operates by dynamically allocating connections based on predefined algorithms such as round-robin, least connections, or IP hash, adapting to server health and load conditions. Hot standby, in contrast, relies on a passive backup server that activates only upon failure, lacking the real-time traffic distribution and scalability inherent in load balancing frameworks.

Key Differences Between Hot Standby and Load Balancing

Hot standby involves one primary server actively handling traffic while a secondary server remains idle, ready to take over if the primary fails, ensuring high availability and minimal downtime. Load balancing distributes network or application traffic across multiple servers simultaneously to optimize resource use, increase throughput, and prevent overload. Key differences include failover strategy in hot standby versus traffic distribution in load balancing, leading to distinct use cases in fault tolerance versus performance enhancement.

Performance Impact: Hot Standby vs Load Balancing

Hot standby ensures high availability by maintaining a backup system ready to take over instantly, minimizing downtime but not enhancing overall throughput. Load balancing distributes network traffic across multiple servers, significantly improving performance and resource utilization. While hot standby prioritizes fault tolerance with minimal performance gain, load balancing actively optimizes response times and scalability under heavy loads.

High Availability Strategies: Which Is Better?

High availability strategies often compare hot standby and load balancing for network reliability. Hot standby provides immediate failover by maintaining a duplicate system ready to take over during failure, ensuring minimal downtime. Load balancing distributes traffic across multiple servers to optimize resource use and performance while offering redundancy, making it superior for scalable environments.

Failure Recovery: Hot Standby Versus Load Balancing

Hot standby offers rapid failure recovery by maintaining a fully synchronized backup server that immediately takes over if the primary fails, minimizing downtime. Load balancing distributes traffic across multiple servers, improving fault tolerance since other servers handle requests if one fails, but recovery may involve session redistribution. Both techniques enhance network reliability, with hot standby favoring instant failover and load balancing providing scalable load distribution and redundancy.

Scalability Considerations in Network Design

Hot standby provides limited scalability by maintaining a single backup system ready to take over, ensuring high availability but restricting capacity expansion. Load balancing distributes traffic across multiple servers, enhancing scalability by allowing dynamic allocation of resources based on demand. Network designs aiming for robust scalability typically favor load balancing to efficiently manage increased workloads and optimize resource utilization.

Cost Implications: Hot Standby and Load Balancing

Hot standby incurs higher costs due to dedicated hardware resources reserved for failover, leading to underutilized infrastructure during normal operation. Load balancing optimizes resource use by distributing traffic across multiple servers, reducing the need for idle backup units and improving overall cost efficiency. Organizations must weigh the upfront investment and ongoing maintenance expenses of hot standby against the scalable, dynamic allocation benefits of load balancing.

Use Cases: When to Choose Hot Standby or Load Balancing

Hot standby is ideal for critical systems requiring immediate failover with minimal downtime, such as financial transaction servers or emergency communication systems. Load balancing suits environments with high traffic volume like web servers and cloud services, distributing workloads to optimize resource use and enhance performance. Selecting between hot standby and load balancing depends on prioritizing system availability versus scalability and performance under varying load conditions.

Future Trends in Network Redundancy and Distribution

Future trends in network redundancy emphasize the integration of hot standby configurations with intelligent load balancing algorithms to enhance fault tolerance and resource utilization. Emerging technologies like AI-driven predictive analytics enable dynamic traffic distribution and proactive failover, minimizing downtime and optimizing network performance. The convergence of software-defined networking (SDN) and edge computing further supports scalable, adaptive redundancy models that address increasing demands for low latency and high availability in distributed network environments.

hot standby vs load balancing Infographic

techiny.com

techiny.com