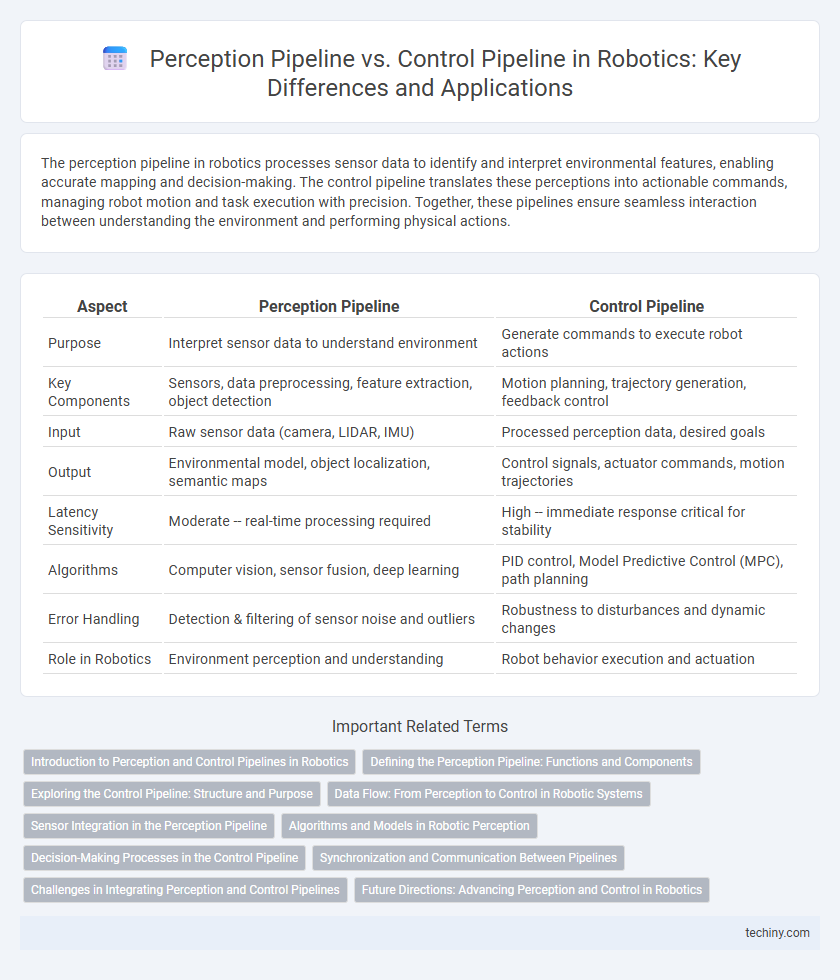

The perception pipeline in robotics processes sensor data to identify and interpret environmental features, enabling accurate mapping and decision-making. The control pipeline translates these perceptions into actionable commands, managing robot motion and task execution with precision. Together, these pipelines ensure seamless interaction between understanding the environment and performing physical actions.

Table of Comparison

| Aspect | Perception Pipeline | Control Pipeline |

|---|---|---|

| Purpose | Interpret sensor data to understand environment | Generate commands to execute robot actions |

| Key Components | Sensors, data preprocessing, feature extraction, object detection | Motion planning, trajectory generation, feedback control |

| Input | Raw sensor data (camera, LIDAR, IMU) | Processed perception data, desired goals |

| Output | Environmental model, object localization, semantic maps | Control signals, actuator commands, motion trajectories |

| Latency Sensitivity | Moderate -- real-time processing required | High -- immediate response critical for stability |

| Algorithms | Computer vision, sensor fusion, deep learning | PID control, Model Predictive Control (MPC), path planning |

| Error Handling | Detection & filtering of sensor noise and outliers | Robustness to disturbances and dynamic changes |

| Role in Robotics | Environment perception and understanding | Robot behavior execution and actuation |

Introduction to Perception and Control Pipelines in Robotics

Perception pipelines in robotics process sensor data to build an understanding of the environment, enabling tasks such as object detection, localization, and mapping. Control pipelines use this environmental information to generate and execute motor commands that guide a robot's movements and interactions. Integrating robust perception with precise control algorithms ensures effective navigation and manipulation in dynamic and unstructured settings.

Defining the Perception Pipeline: Functions and Components

The perception pipeline in robotics processes sensor data to extract meaningful information such as object recognition, localization, and environment mapping, enabling the robot to understand its surroundings. Core components include sensors (LiDAR, cameras, IMUs), data preprocessing, feature extraction, and interpretation modules that generate actionable insights. This pipeline contrasts with the control pipeline, which uses perception outputs to generate movement commands and execute tasks.

Exploring the Control Pipeline: Structure and Purpose

The control pipeline in robotics translates sensory input into precise motor commands, enabling robots to execute tasks with accuracy and adaptability. It typically consists of modules for decision-making, motion planning, and actuator control, ensuring real-time responsiveness to dynamic environments. Emphasizing feedback loops, the control pipeline maintains stability and optimizes performance by continuously adjusting robot actions based on sensor data.

Data Flow: From Perception to Control in Robotic Systems

The perception pipeline in robotic systems processes raw sensor data, converting it into actionable information such as object recognition, localization, and environment mapping. This processed data is then transmitted to the control pipeline, which uses algorithms to generate motor commands and plan movements based on the perceived environment. Efficient data flow between perception and control ensures real-time responsiveness and accuracy in robotic navigation and task execution.

Sensor Integration in the Perception Pipeline

The perception pipeline in robotics integrates diverse sensors such as LiDAR, cameras, and IMUs to construct an accurate environmental model. Sensor fusion algorithms process heterogeneous data streams to enhance object detection, localization, and mapping accuracy. Robust sensor integration enables real-time situational awareness, which is critical for effective decision-making in autonomous navigation systems.

Algorithms and Models in Robotic Perception

The perception pipeline in robotics relies heavily on advanced algorithms such as convolutional neural networks (CNNs) and probabilistic models like Kalman filters to interpret sensor data and construct an accurate representation of the environment. In contrast, the control pipeline utilizes mathematical models including PID controllers and reinforcement learning frameworks to execute precise actions based on the perceived information. Efficient integration of these algorithms and models enhances autonomous robot navigation and decision-making capabilities in dynamic environments.

Decision-Making Processes in the Control Pipeline

The control pipeline in robotics centralizes decision-making processes by integrating sensor data and executing algorithms to determine precise motor commands, enabling real-time responses to environmental changes. Unlike the perception pipeline, which processes raw sensory inputs to create an environmental model, the control pipeline transforms this model into actionable commands through feedback loops and predictive control strategies. Advanced control systems utilize techniques such as model predictive control (MPC) and reinforcement learning to optimize robot behavior, enhancing accuracy and adaptability in dynamic environments.

Synchronization and Communication Between Pipelines

Synchronization between perception and control pipelines in robotics is crucial for real-time decision-making and accurate task execution. Effective communication protocols such as ROS messages or shared memory buffers ensure timely data exchange, minimizing latency and preventing data inconsistencies. Implementing time-stamping and sensor fusion techniques aligns sensor inputs with control commands, enhancing system responsiveness and reliability.

Challenges in Integrating Perception and Control Pipelines

Integrating perception and control pipelines in robotics presents challenges such as latency in processing sensory data, which impacts real-time decision-making and actuator responsiveness. Discrepancies in data formats and synchronization between perception modules and control algorithms complicate seamless communication and coordination. Ensuring robust performance under uncertain and dynamic environmental conditions further requires sophisticated sensor fusion and adaptive control strategies.

Future Directions: Advancing Perception and Control in Robotics

Future advancements in robotics focus on integrating deep learning with sensor fusion to enhance the perception pipeline's accuracy and real-time environmental understanding. Simultaneously, the control pipeline will benefit from adaptive algorithms and reinforcement learning to improve decision-making and autonomous navigation in complex, dynamic environments. Emerging trends emphasize the synergy between perception and control, enabling robots to execute precise actions based on enriched sensory inputs and predictive modeling.

Perception pipeline vs Control pipeline Infographic

techiny.com

techiny.com