Simultaneous Localization and Mapping (SLAM) integrates sensor data to build a map of an unknown environment while tracking the robot's position within it, offering robust navigation in complex terrains. Visual odometry estimates the robot's movement by analyzing changes in camera images, providing real-time pose information but often accumulating drift without loop closure or global correction. SLAM generally surpasses visual odometry in accuracy and map consistency by incorporating mapping and error correction techniques essential for autonomous robotic navigation.

Table of Comparison

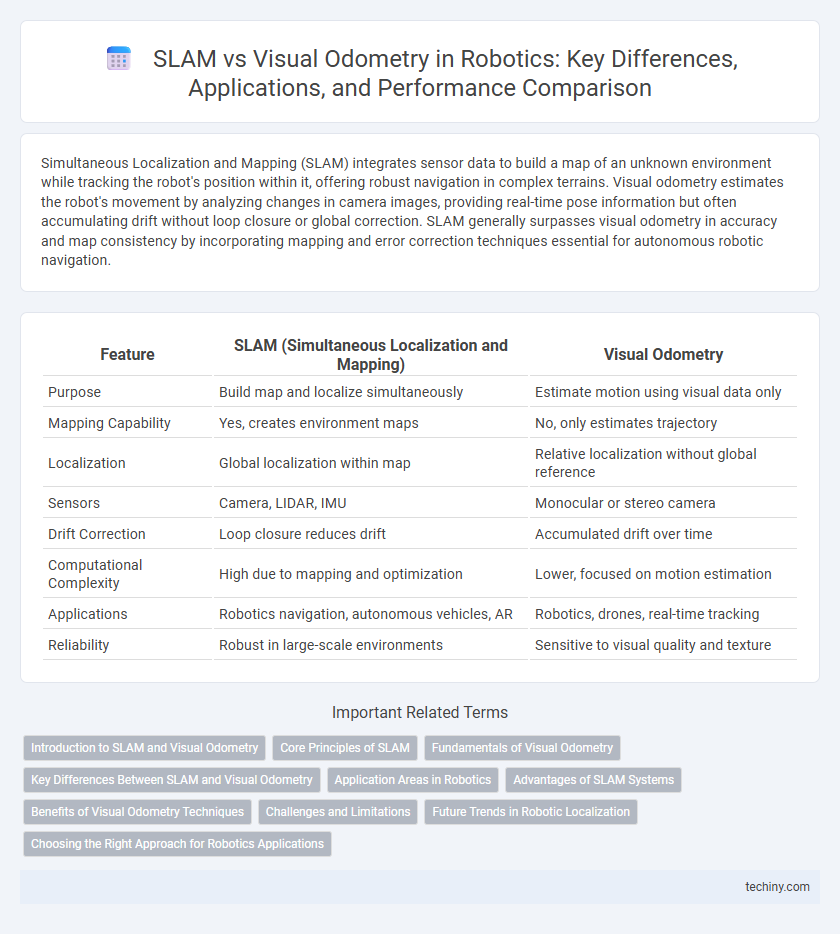

| Feature | SLAM (Simultaneous Localization and Mapping) | Visual Odometry |

|---|---|---|

| Purpose | Build map and localize simultaneously | Estimate motion using visual data only |

| Mapping Capability | Yes, creates environment maps | No, only estimates trajectory |

| Localization | Global localization within map | Relative localization without global reference |

| Sensors | Camera, LIDAR, IMU | Monocular or stereo camera |

| Drift Correction | Loop closure reduces drift | Accumulated drift over time |

| Computational Complexity | High due to mapping and optimization | Lower, focused on motion estimation |

| Applications | Robotics navigation, autonomous vehicles, AR | Robotics, drones, real-time tracking |

| Reliability | Robust in large-scale environments | Sensitive to visual quality and texture |

Introduction to SLAM and Visual Odometry

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while tracking their position within it, integrating sensor data for real-time navigation. Visual Odometry estimates a robot's motion by analyzing sequential camera images to determine displacement, providing essential pose information without a prior map. Both techniques are fundamental in autonomous robotics, with SLAM emphasizing environment reconstruction and Visual Odometry focusing on motion estimation.

Core Principles of SLAM

Simultaneous Localization and Mapping (SLAM) integrates data from various sensors to construct a map of an unknown environment while simultaneously determining the robot's position within it. Unlike Visual Odometry, which estimates motion solely through sequential camera images, SLAM fuses information from lidar, IMU, and sonar to enhance accuracy and robustness in dynamic or feature-sparse environments. Core principles of SLAM include probabilistic state estimation, loop closure detection for error correction, and incremental map optimization, enabling reliable navigation in complex, unmapped spaces.

Fundamentals of Visual Odometry

Visual odometry estimates a robot's position and orientation by analyzing sequential camera images to track feature points and calculate motion. Unlike SLAM, which simultaneously builds a map and localizes the robot within it, visual odometry focuses solely on continuous pose estimation without map optimization. Core algorithms involve feature detection, matching, and motion estimation using geometric constraints to provide real-time localization in dynamic environments.

Key Differences Between SLAM and Visual Odometry

SLAM (Simultaneous Localization and Mapping) combines mapping an unknown environment with localizing the robot simultaneously, while Visual Odometry focuses solely on estimating the robot's motion by analyzing visual input frames. SLAM integrates sensor data to build a consistent environmental map, correcting cumulative drift errors, whereas Visual Odometry relies on frame-to-frame feature tracking without providing a global map. The computational complexity is higher in SLAM due to loop closure detection and map optimization, making Visual Odometry more lightweight but less robust for long-term navigation.

Application Areas in Robotics

Simultaneous Localization and Mapping (SLAM) is extensively used in autonomous robotics for real-time environment mapping and navigation in unknown or dynamic settings, such as warehouse automation and planetary exploration. Visual odometry, relying on camera images to estimate motion, finds critical applications in resource-constrained robots like drones and autonomous vehicles where lightweight and computational efficiency are essential. Both techniques enhance spatial awareness but are chosen based on the specific robotic platform's sensor availability and operational requirements.

Advantages of SLAM Systems

SLAM systems provide robust localization and mapping simultaneously, enabling autonomous robots to navigate unknown environments with high accuracy. Unlike visual odometry, SLAM incorporates loop closure detection and map optimization, which significantly reduce cumulative drift over time. This capability enhances long-term navigation reliability and situational awareness in complex and dynamic settings.

Benefits of Visual Odometry Techniques

Visual odometry techniques offer precise real-time motion estimation by analyzing image sequences from cameras, enabling efficient navigation in GPS-denied environments. These methods provide rich environmental detail and are cost-effective compared to LiDAR-based SLAM, making them suitable for lightweight, resource-constrained robotic platforms. Enhanced feature detection and deep learning integration further improve robustness against dynamic lighting and texture-poor scenes.

Challenges and Limitations

SLAM (Simultaneous Localization and Mapping) faces challenges such as computational complexity and map consistency in dynamic environments, which can degrade real-time performance. Visual odometry struggles with scale drift, sensitivity to lighting changes, and feature-poor scenes, limiting its accuracy over long trajectories. Both methods require robust sensor fusion and error correction techniques to mitigate cumulative drift and improve localization reliability in robotics applications.

Future Trends in Robotic Localization

Future trends in robotic localization emphasize the integration of Simultaneous Localization and Mapping (SLAM) with advanced Visual Odometry techniques to enhance real-time accuracy and environmental understanding. Innovations in machine learning and sensor fusion are driving improvements in robustness, enabling robots to operate reliably in dynamic, GPS-denied environments. The shift towards lightweight, low-power embedded systems and cloud-assisted processing is expected to accelerate the deployment of SLAM and Visual Odometry solutions in autonomous navigation and augmented reality applications.

Choosing the Right Approach for Robotics Applications

Selecting between SLAM and visual odometry for robotics depends on the application's mapping and localization demands. SLAM excels in complex, unknown environments by simultaneously building maps and localizing the robot, making it ideal for autonomous navigation and exploration. Visual odometry offers efficient, real-time motion estimation suitable for scenarios requiring rapid position tracking with limited computational resources.

SLAM vs Visual odometry Infographic

techiny.com

techiny.com