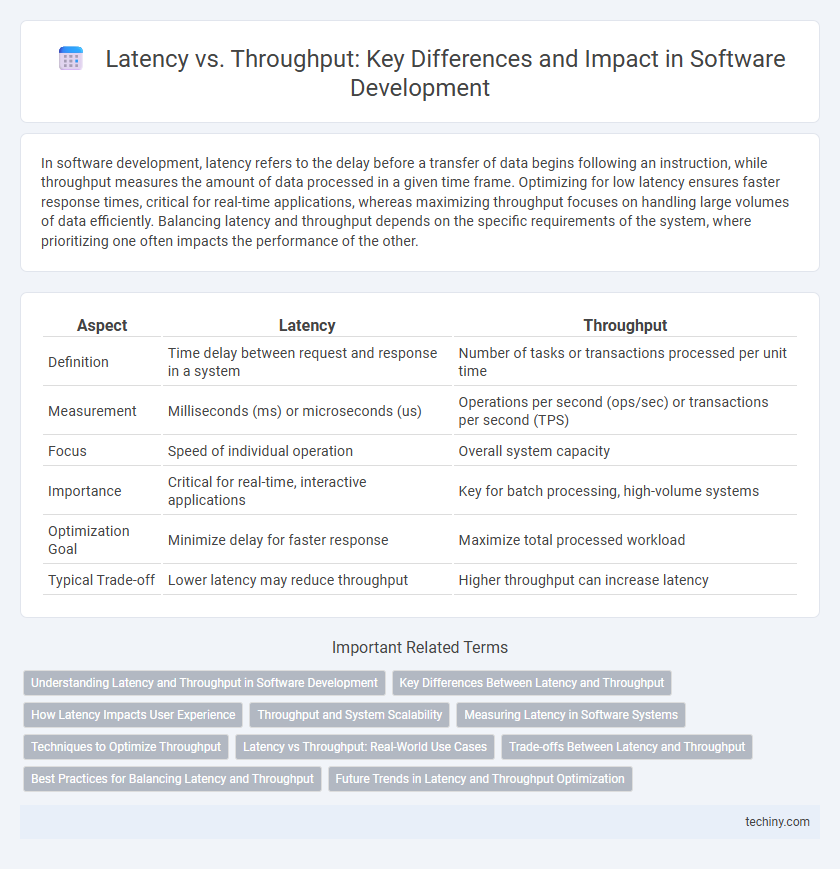

In software development, latency refers to the delay before a transfer of data begins following an instruction, while throughput measures the amount of data processed in a given time frame. Optimizing for low latency ensures faster response times, critical for real-time applications, whereas maximizing throughput focuses on handling large volumes of data efficiently. Balancing latency and throughput depends on the specific requirements of the system, where prioritizing one often impacts the performance of the other.

Table of Comparison

| Aspect | Latency | Throughput |

|---|---|---|

| Definition | Time delay between request and response in a system | Number of tasks or transactions processed per unit time |

| Measurement | Milliseconds (ms) or microseconds (us) | Operations per second (ops/sec) or transactions per second (TPS) |

| Focus | Speed of individual operation | Overall system capacity |

| Importance | Critical for real-time, interactive applications | Key for batch processing, high-volume systems |

| Optimization Goal | Minimize delay for faster response | Maximize total processed workload |

| Typical Trade-off | Lower latency may reduce throughput | Higher throughput can increase latency |

Understanding Latency and Throughput in Software Development

Latency in software development refers to the time delay between a request and the corresponding response, critically impacting user experience and system responsiveness. Throughput measures the number of transactions or processes completed within a specific time frame, reflecting the system's capacity and efficiency under workload. Optimizing both latency and throughput is essential for achieving balanced performance, where low latency ensures quick interactions and high throughput supports robust processing capabilities.

Key Differences Between Latency and Throughput

Latency measures the time delay between a request and its corresponding response in software systems, while throughput quantifies the number of transactions or data units processed per second. Low latency is critical for real-time applications requiring immediate responsiveness, whereas high throughput is essential for batch processing and data-heavy workloads. Understanding the trade-off between latency and throughput helps optimize system performance based on specific application demands.

How Latency Impacts User Experience

Latency, the delay before a transfer of data begins following an instruction, directly affects user experience by causing noticeable lags in application responsiveness and interaction. High latency results in slower load times and delayed feedback, leading to user frustration and decreased engagement. Optimizing latency through efficient coding, network improvements, and server placement enhances real-time responsiveness and overall satisfaction.

Throughput and System Scalability

Throughput measures the number of tasks a system can process within a given time, directly impacting system scalability by determining how effectively resources handle increased workloads. High throughput indicates efficient resource utilization and supports horizontal scaling by enabling systems to manage more concurrent requests without degradation. Optimizing throughput involves balancing system components such as CPU, memory, and I/O to maintain steady performance as demand grows.

Measuring Latency in Software Systems

Measuring latency in software systems involves recording the time taken for a request to traverse from initiation to completion, often using high-resolution timers or specialized profiling tools. Key metrics include average latency, percentiles (such as p95 or p99 latency), and maximum latency to identify performance bottlenecks and ensure responsiveness. Accurate latency measurement requires consistent monitoring under realistic workloads to capture temporal variations and system behaviors impacting user experience.

Techniques to Optimize Throughput

Optimizing throughput in software development involves techniques such as parallel processing, efficient resource allocation, and load balancing across multiple servers. Implementing asynchronous programming and non-blocking I/O operations can significantly increase the number of tasks processed per unit time. Leveraging caching strategies and minimizing context switching also contribute to maximizing system throughput for high-performance applications.

Latency vs Throughput: Real-World Use Cases

Latency measures the time taken to process a single request, crucial in real-time applications like gaming and video conferencing where immediate response is essential. Throughput represents the number of processes handled per unit time, vital for batch processing systems such as data analytics and large-scale transactions that prioritize volume over speed. Balancing latency and throughput depends on specific use cases, with low latency preferred in interactive environments and high throughput optimized for bulk data handling.

Trade-offs Between Latency and Throughput

Latency in software development refers to the time delay between a request and its response, while throughput measures the number of tasks processed within a given timeframe. Optimizing for low latency often reduces system throughput due to the need for faster, smaller, and more frequent operations, whereas maximizing throughput may increase latency by batching processes to handle more tasks concurrently. Balancing these trade-offs depends on application requirements, such as real-time responsiveness versus bulk data processing efficiency, requiring strategic resource allocation and architectural adjustments.

Best Practices for Balancing Latency and Throughput

Optimizing software systems requires balancing latency and throughput to ensure responsive and efficient performance. Techniques such as asynchronous processing, load balancing, and caching reduce latency without sacrificing throughput, while parallelism and batching improve throughput with minimal latency impact. Monitoring real-time metrics and tuning resource allocation dynamically helps maintain the optimal trade-off between fast response times and high data processing rates.

Future Trends in Latency and Throughput Optimization

Emerging technologies like edge computing and 5G networks are set to drastically reduce latency by processing data closer to the source, enabling near real-time responsiveness in software applications. Concurrently, advancements in parallel processing and cloud-native architectures will enhance throughput by maximizing resource utilization and scaling efficiently. AI-driven performance tuning and adaptive algorithms will further optimize both latency and throughput, ensuring software systems meet growing demands for speed and capacity.

Latency vs Throughput Infographic

techiny.com

techiny.com