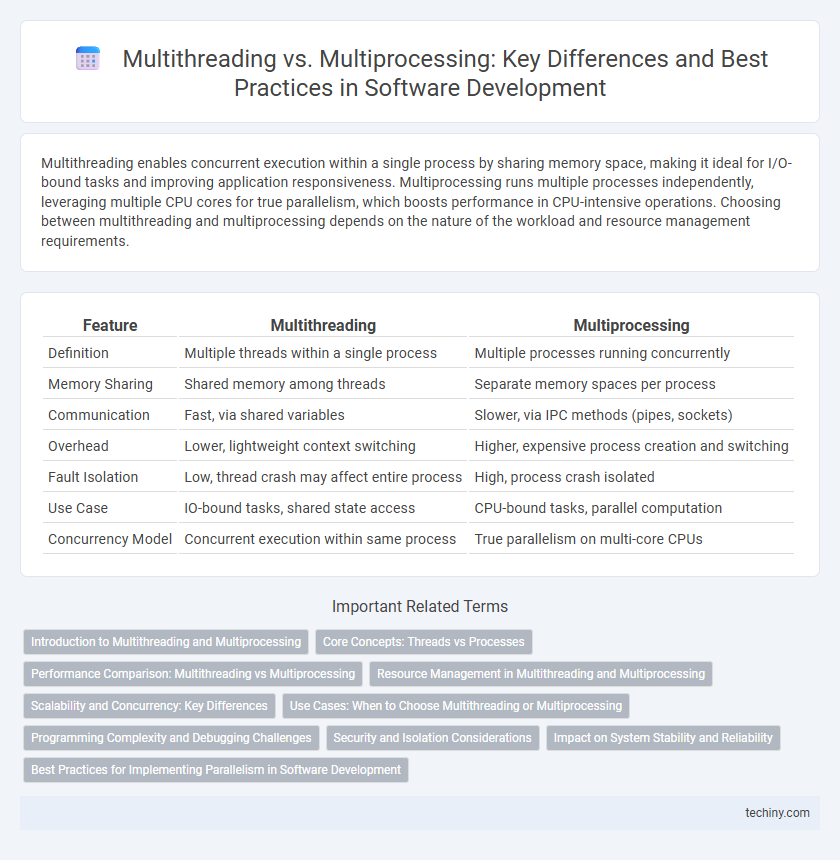

Multithreading enables concurrent execution within a single process by sharing memory space, making it ideal for I/O-bound tasks and improving application responsiveness. Multiprocessing runs multiple processes independently, leveraging multiple CPU cores for true parallelism, which boosts performance in CPU-intensive operations. Choosing between multithreading and multiprocessing depends on the nature of the workload and resource management requirements.

Table of Comparison

| Feature | Multithreading | Multiprocessing |

|---|---|---|

| Definition | Multiple threads within a single process | Multiple processes running concurrently |

| Memory Sharing | Shared memory among threads | Separate memory spaces per process |

| Communication | Fast, via shared variables | Slower, via IPC methods (pipes, sockets) |

| Overhead | Lower, lightweight context switching | Higher, expensive process creation and switching |

| Fault Isolation | Low, thread crash may affect entire process | High, process crash isolated |

| Use Case | IO-bound tasks, shared state access | CPU-bound tasks, parallel computation |

| Concurrency Model | Concurrent execution within same process | True parallelism on multi-core CPUs |

Introduction to Multithreading and Multiprocessing

Multithreading enables a single process to run multiple threads concurrently, sharing the same memory space for efficient data exchange and reduced overhead. Multiprocessing involves running multiple processes simultaneously, each with its own memory space, improving performance on multi-core CPUs by parallelizing tasks. Both techniques optimize resource utilization but differ in memory management, communication complexity, and fault isolation.

Core Concepts: Threads vs Processes

Threads are the smallest units of execution within a process, sharing the same memory space and enabling efficient communication and resource sharing. Processes operate independently with separate memory spaces, providing isolation and stability but requiring more overhead for communication. Multithreading maximizes CPU utilization by running multiple threads concurrently within a single process, while multiprocessing leverages multiple CPU cores by running separate processes in parallel.

Performance Comparison: Multithreading vs Multiprocessing

Multithreading enhances performance by allowing multiple threads to run concurrently within a single process, sharing memory space and reducing overhead. Multiprocessing achieves higher performance gains on multi-core systems by running separate processes simultaneously, each with its own memory, minimizing contention but increasing resource usage. In CPU-bound tasks, multiprocessing typically outperforms multithreading due to true parallelism, whereas multithreading excels in I/O-bound scenarios by efficiently managing waiting times.

Resource Management in Multithreading and Multiprocessing

Multithreading efficiently shares the same memory space, allowing threads to communicate and access resources directly without the overhead of inter-process communication, which results in faster context switching and lower resource consumption. Multiprocessing runs independent processes with separate memory spaces, providing better isolation and fault tolerance but requiring more resources and complex inter-process communication for sharing data. Effective resource management in multithreading involves synchronization primitives like mutexes and semaphores to avoid race conditions, while multiprocessing relies on mechanisms such as pipes, message queues, or shared memory to coordinate processes.

Scalability and Concurrency: Key Differences

Multithreading allows multiple threads within a single process to run concurrently, sharing the same memory space, which enhances scalability in I/O-bound tasks but is limited by the Global Interpreter Lock (GIL) in languages like Python. Multiprocessing involves multiple processes running independently with separate memory, providing true parallelism and superior scalability for CPU-bound tasks by leveraging multiple cores effectively. Concurrency in multithreading is lightweight and suited for tasks with high I/O wait times, whereas multiprocessing excels in computation-heavy workloads requiring isolated execution and fault tolerance.

Use Cases: When to Choose Multithreading or Multiprocessing

Multithreading excels in I/O-bound applications such as web servers and real-time user interfaces where lightweight task management and shared memory benefit responsiveness. Multiprocessing is optimal for CPU-bound tasks like data analysis, scientific computing, or complex algorithm execution, leveraging multiple cores to avoid the Global Interpreter Lock limitations. Choosing between them depends on whether the workload is I/O intensive or requires parallel computation for performance scalability.

Programming Complexity and Debugging Challenges

Multithreading in software development often results in higher programming complexity due to the need for careful synchronization and shared memory management to avoid race conditions and deadlocks. Multiprocessing, while isolating processes and reducing shared state issues, introduces challenges in managing inter-process communication and higher overhead for context switching. Debugging multithreaded applications is typically more difficult because of nondeterministic thread interactions, whereas multiprocessing debugging is more straightforward but can be complicated by message passing and process coordination.

Security and Isolation Considerations

Multithreading allows threads to share the same memory space, which can lead to security risks such as data races and unauthorized access if synchronization is not properly managed. Multiprocessing, by running separate processes with independent memory, offers stronger isolation, reducing the risk of data corruption and enhancing security boundaries between concurrent tasks. This isolation makes multiprocessing more suitable for applications requiring robust security and fault tolerance in software development.

Impact on System Stability and Reliability

Multithreading enhances system responsiveness by allowing concurrent execution within a single process but can introduce risks such as race conditions and deadlocks that compromise stability. Multiprocessing isolates processes with separate memory spaces, significantly improving reliability by preventing one process failure from affecting others. Choosing multiprocessing over multithreading is crucial in systems demanding high fault tolerance and stability, as it reduces the likelihood of system-wide crashes.

Best Practices for Implementing Parallelism in Software Development

Effective implementation of parallelism in software development requires choosing between multithreading and multiprocessing based on the application's concurrency needs and resource constraints. Best practices include minimizing thread contention and synchronization overhead in multithreading, while leveraging isolated memory spaces in multiprocessing to avoid shared state issues and enhance fault tolerance. Profiling and benchmarking help identify the optimal parallelism strategy, ensuring efficient CPU utilization and scalable performance in both approaches.

Multithreading vs Multiprocessing Infographic

techiny.com

techiny.com