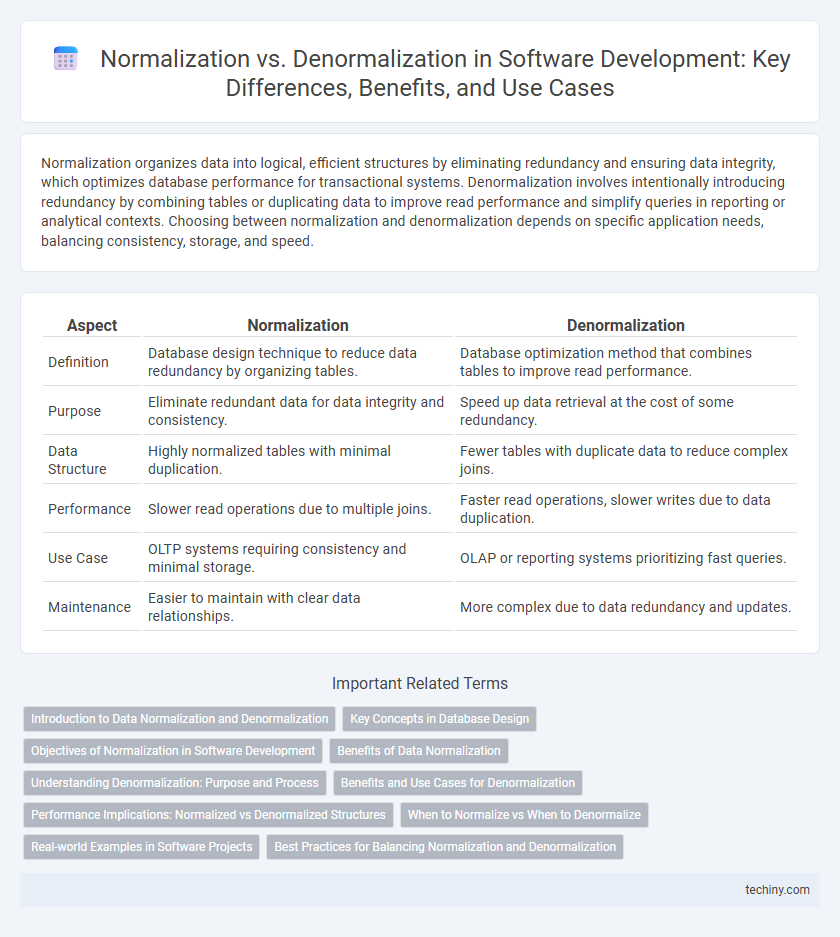

Normalization organizes data into logical, efficient structures by eliminating redundancy and ensuring data integrity, which optimizes database performance for transactional systems. Denormalization involves intentionally introducing redundancy by combining tables or duplicating data to improve read performance and simplify queries in reporting or analytical contexts. Choosing between normalization and denormalization depends on specific application needs, balancing consistency, storage, and speed.

Table of Comparison

| Aspect | Normalization | Denormalization |

|---|---|---|

| Definition | Database design technique to reduce data redundancy by organizing tables. | Database optimization method that combines tables to improve read performance. |

| Purpose | Eliminate redundant data for data integrity and consistency. | Speed up data retrieval at the cost of some redundancy. |

| Data Structure | Highly normalized tables with minimal duplication. | Fewer tables with duplicate data to reduce complex joins. |

| Performance | Slower read operations due to multiple joins. | Faster read operations, slower writes due to data duplication. |

| Use Case | OLTP systems requiring consistency and minimal storage. | OLAP or reporting systems prioritizing fast queries. |

| Maintenance | Easier to maintain with clear data relationships. | More complex due to data redundancy and updates. |

Introduction to Data Normalization and Denormalization

Data normalization organizes database tables to reduce redundancy and improve data integrity by dividing large tables into smaller, related ones using rules like 1NF, 2NF, and 3NF. Denormalization, in contrast, intentionally introduces redundancy by merging tables to optimize read performance and simplify complex queries in high-demand applications. Understanding the balance between normalization and denormalization is crucial for designing efficient database schemas tailored to specific workloads and performance requirements.

Key Concepts in Database Design

Normalization organizes database tables to reduce redundancy and ensure data integrity by dividing data into related tables using primary and foreign keys. Denormalization intentionally introduces redundancy by merging tables to improve query performance and simplify data retrieval in complex applications. Effective database design balances normalization for consistency and denormalization for speed based on specific application requirements and workload patterns.

Objectives of Normalization in Software Development

Normalization in software development aims to eliminate data redundancy and ensure data integrity by organizing database structures into well-defined tables and relationships. This process promotes efficient data management, reduces update anomalies, and enhances consistency across transactional operations. Proper normalization supports scalability and maintainability in complex software systems by enforcing clear data dependencies.

Benefits of Data Normalization

Data normalization in software development enhances database efficiency by minimizing data redundancy, which reduces storage costs and prevents data anomalies. It improves data integrity, enabling consistent, accurate retrieval and updates across relational database systems. Structured normalization fosters scalability and easier maintenance, streamlining query performance and supporting complex data relationships effectively.

Understanding Denormalization: Purpose and Process

Denormalization enhances database performance by intentionally introducing redundancy through the merging of normalized tables to reduce complex joins and speed up data retrieval. This process involves adding duplicate data or aggregating related information into a single table, trading off some data integrity to optimize read-heavy workloads. Understanding the purpose of denormalization is crucial for balancing efficient data access and maintaining acceptable levels of consistency in software development projects.

Benefits and Use Cases for Denormalization

Denormalization improves query performance by reducing the need for complex joins in large-scale databases. It is beneficial in read-heavy applications such as data warehousing, real-time analytics, and reporting systems where fast data retrieval is critical. Use cases for denormalization include optimizing performance for dashboards, aggregating data for business intelligence, and enhancing user experience in high-traffic web applications.

Performance Implications: Normalized vs Denormalized Structures

Normalized database structures enhance data integrity and reduce redundancy, resulting in efficient storage but may cause slower query performance due to multiple table joins. Denormalized structures improve read performance by consolidating data into fewer tables, reducing join operations and accelerating retrieval times at the expense of increased storage and potential data anomalies. Choosing between normalization and denormalization depends on specific application performance requirements, data consistency priorities, and workload characteristics.

When to Normalize vs When to Denormalize

Normalize databases during software development to reduce data redundancy, improve data integrity, and simplify updates in transactional systems. Denormalize when optimizing for read performance in analytical queries or reporting systems, where faster data retrieval outweighs storage efficiency. Choose normalization for consistent, dynamic data environments and denormalization for static or heavily read-focused workloads to balance performance and maintainability.

Real-world Examples in Software Projects

Normalization in software development ensures data integrity and reduces redundancy by structuring databases into related tables, exemplified by an e-commerce platform where customer information, orders, and products are stored separately. Denormalization improves performance by combining tables to minimize complex joins, often used in real-time analytics dashboards where aggregated sales data is accessed rapidly. Selecting between normalization and denormalization depends on the application's need for data consistency versus speed, as seen in financial systems prioritizing normalization and social media feeds favoring denormalization.

Best Practices for Balancing Normalization and Denormalization

Balancing normalization and denormalization in software development requires prioritizing database performance without sacrificing data integrity; normalization minimizes redundancy and enhances consistency, while denormalization improves query speed by introducing controlled redundancy. Best practices include analyzing workload patterns to decide which tables benefit from normalization versus denormalization, implementing indexing strategies, and continuously monitoring query performance to adjust the schema dynamically. Employing a hybrid approach, where core transactional systems are normalized and reporting or analytical databases are denormalized, optimizes both maintenance ease and read efficiency.

Normalization vs Denormalization Infographic

techiny.com

techiny.com