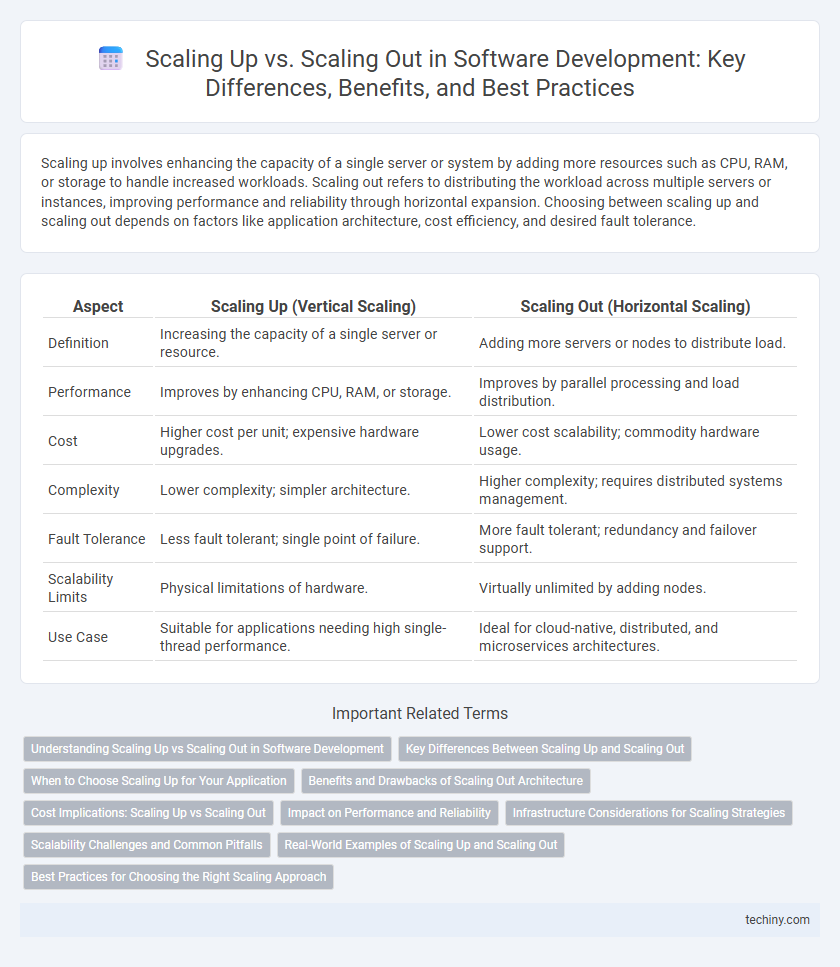

Scaling up involves enhancing the capacity of a single server or system by adding more resources such as CPU, RAM, or storage to handle increased workloads. Scaling out refers to distributing the workload across multiple servers or instances, improving performance and reliability through horizontal expansion. Choosing between scaling up and scaling out depends on factors like application architecture, cost efficiency, and desired fault tolerance.

Table of Comparison

| Aspect | Scaling Up (Vertical Scaling) | Scaling Out (Horizontal Scaling) |

|---|---|---|

| Definition | Increasing the capacity of a single server or resource. | Adding more servers or nodes to distribute load. |

| Performance | Improves by enhancing CPU, RAM, or storage. | Improves by parallel processing and load distribution. |

| Cost | Higher cost per unit; expensive hardware upgrades. | Lower cost scalability; commodity hardware usage. |

| Complexity | Lower complexity; simpler architecture. | Higher complexity; requires distributed systems management. |

| Fault Tolerance | Less fault tolerant; single point of failure. | More fault tolerant; redundancy and failover support. |

| Scalability Limits | Physical limitations of hardware. | Virtually unlimited by adding nodes. |

| Use Case | Suitable for applications needing high single-thread performance. | Ideal for cloud-native, distributed, and microservices architectures. |

Understanding Scaling Up vs Scaling Out in Software Development

Scaling up in software development involves increasing the capacity of existing hardware or software resources, such as adding more CPU power or memory to a single server, to handle higher workloads. Scaling out refers to distributing workloads across multiple machines or instances, improving performance and fault tolerance by adding more servers to a system. Understanding the differences between scaling up and scaling out is critical for designing scalable, efficient, and cost-effective software architectures.

Key Differences Between Scaling Up and Scaling Out

Scaling up involves increasing the capacity of a single server by adding more resources such as CPU, RAM, or storage to enhance performance. Scaling out distributes workloads across multiple servers or machines, improving redundancy and fault tolerance while enabling horizontal expansion. Key differences include cost efficiency, with scaling up often being more expensive but simpler, and scaling out offering greater flexibility and resilience in cloud-native and distributed systems.

When to Choose Scaling Up for Your Application

Scaling up is ideal when your application requires enhanced performance on a single server, especially if it benefits from increased CPU power, RAM, or faster storage without the complexity of distributing workloads. This approach suits workloads with tightly coupled processes or legacy systems that aren't designed for horizontal distribution. Choosing scaling up avoids the overhead of multi-node synchronization and is effective when latency and simplicity are critical factors.

Benefits and Drawbacks of Scaling Out Architecture

Scaling out architecture improves system availability and fault tolerance by distributing workloads across multiple servers, reducing the risk of a single point of failure. It offers cost efficiency by enabling incremental resource additions, thus avoiding the large upfront investment typical of scaling up. However, scaling out increases system complexity due to the need for load balancing, data synchronization, and potential network latency challenges.

Cost Implications: Scaling Up vs Scaling Out

Scaling up involves increasing the capacity of a single server, often leading to higher costs due to expensive hardware upgrades and potential downtime during upgrades. Scaling out distributes workloads across multiple servers, offering cost efficiency through the use of commodity hardware and improved fault tolerance. While scaling up may incur steep upfront investment, scaling out can reduce long-term operational expenses through easier maintenance and incremental resource addition.

Impact on Performance and Reliability

Scaling up enhances performance by upgrading a single system's resources, such as CPU, RAM, or storage, leading to faster processing speeds and reduced latency; however, it creates a single point of failure impacting overall reliability. Scaling out improves reliability through distributed systems by adding more nodes or servers, enabling load balancing and fault tolerance, which prevents system downtime and maintains consistent performance under high demand. While scaling out offers superior horizontal expansion and resilience, it may introduce network latency and complexity affecting efficiency in some scenarios.

Infrastructure Considerations for Scaling Strategies

Scaling up involves enhancing the capacity of existing hardware by upgrading components such as CPUs, memory, and storage to handle increased workloads efficiently. Scaling out requires adding more machines or nodes to distribute the load across multiple servers, improving redundancy and fault tolerance. Infrastructure considerations include evaluating network bandwidth, virtualization capabilities, and the cost implications of hardware upgrades versus maintaining multiple servers for optimal performance.

Scalability Challenges and Common Pitfalls

Scaling up involves enhancing the capacity of a single server by upgrading hardware, which often faces limitations due to physical constraints and escalating costs. Scaling out distributes workloads across multiple machines, introducing challenges in data consistency, load balancing, and network latency. Common pitfalls include underestimating complexity, neglecting horizontal scalability in early architecture, and failing to implement robust monitoring and fault tolerance mechanisms.

Real-World Examples of Scaling Up and Scaling Out

Scaling up in software development often involves enhancing the capabilities of a single server or system, exemplified by Netflix upgrading its primary database servers to handle billions of daily transactions. Scaling out is demonstrated by Amazon Web Services (AWS), which distributes workloads across thousands of commodity servers to ensure high availability and fault tolerance. Both methods are crucial for addressing increasing user demand, with scaling up improving performance on powerful hardware and scaling out enabling seamless distribution of services across multiple nodes.

Best Practices for Choosing the Right Scaling Approach

Choosing the right scaling approach in software development depends on workload characteristics and system architecture; scaling up enhances hardware capabilities like CPU and memory for performance boost in monolithic applications, while scaling out distributes load across multiple servers to increase availability and fault tolerance for microservices or distributed systems. Best practices include analyzing current bottlenecks, forecasting future demand, and considering cost-effectiveness and latency requirements. Incorporating automation tools for load balancing and monitoring ensures seamless scalability aligned with business objectives.

Scaling Up vs Scaling Out Infographic

techiny.com

techiny.com