Bit Error Rate (BER) measures the ratio of erroneous bits to total transmitted bits, providing a fundamental assessment of data integrity at the physical layer in telecommunications. Packet Error Rate (PER) evaluates the proportion of data packets with errors, reflecting the quality of higher-layer data transmission and influencing overall network performance. Understanding the relationship between BER and PER is crucial for optimizing error correction schemes and ensuring reliable communication in telecommunications systems.

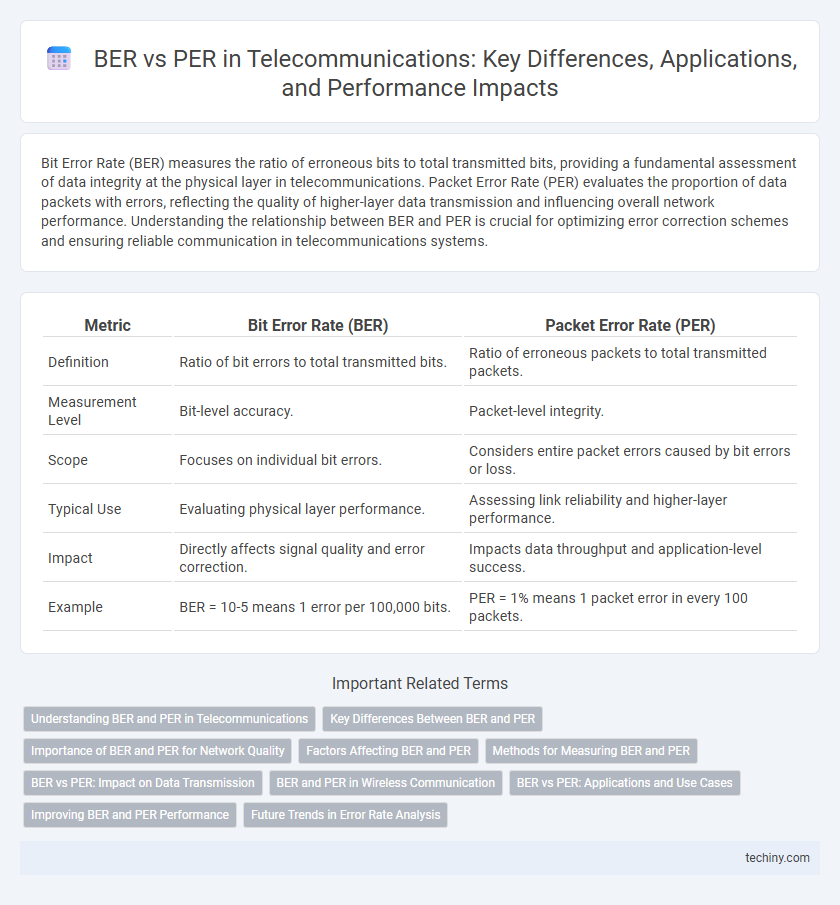

Table of Comparison

| Metric | Bit Error Rate (BER) | Packet Error Rate (PER) |

|---|---|---|

| Definition | Ratio of bit errors to total transmitted bits. | Ratio of erroneous packets to total transmitted packets. |

| Measurement Level | Bit-level accuracy. | Packet-level integrity. |

| Scope | Focuses on individual bit errors. | Considers entire packet errors caused by bit errors or loss. |

| Typical Use | Evaluating physical layer performance. | Assessing link reliability and higher-layer performance. |

| Impact | Directly affects signal quality and error correction. | Impacts data throughput and application-level success. |

| Example | BER = 10-5 means 1 error per 100,000 bits. | PER = 1% means 1 packet error in every 100 packets. |

Understanding BER and PER in Telecommunications

Bit Error Rate (BER) measures the ratio of incorrectly received bits to the total transmitted bits, serving as a critical indicator of signal integrity in telecommunications. Packet Error Rate (PER) quantifies the number of corrupted or lost packets over the total transmitted packets, reflecting the overall network reliability and quality. Understanding BER and PER enables network engineers to diagnose transmission errors and optimize system performance in various communication protocols.

Key Differences Between BER and PER

Bit Error Rate (BER) measures the ratio of erroneous bits to the total transmitted bits in a communication system, highlighting the accuracy of individual bit transmission. Packet Error Rate (PER) quantifies the proportion of data packets that contain errors, impacting overall data integrity and retransmission requirements. BER focuses on the low-level signal quality, whereas PER reflects higher-level protocol performance and network reliability in telecommunications.

Importance of BER and PER for Network Quality

Bit Error Rate (BER) and Packet Error Rate (PER) are critical metrics for assessing network quality in telecommunications, directly impacting data integrity and transmission reliability. BER quantifies the ratio of errored bits to the total transmitted bits, making it essential for evaluating physical layer performance and signal quality. PER measures the percentage of incorrectly received data packets, reflecting higher-layer network efficiency and user experience in real-time communication systems.

Factors Affecting BER and PER

Bit Error Rate (BER) and Packet Error Rate (PER) are significantly influenced by signal-to-noise ratio (SNR), modulation schemes, and channel conditions such as multipath fading and interference. High noise levels and poor synchronization increase BER by causing bit misinterpretation, while PER can escalate due to packet collisions and error propagation in packet-based transmissions. Error correction techniques like Forward Error Correction (FEC) impact both BER and PER by detecting and correcting errors, thereby enhancing overall communication reliability.

Methods for Measuring BER and PER

Bit Error Rate (BER) measurement methods often involve using a pseudo-random binary sequence (PRBS) generator and an error detector to compare transmitted and received data for precise error quantification. Packet Error Rate (PER) testing typically employs protocol-specific test packets transmitted over the network, with error counting performed by monitoring packet loss or corrupted packets using network analyzers or test equipment. Both BER and PER measurement techniques rely on specialized test sets and software tools to ensure accurate simulation of real-world telecommunications conditions.

BER vs PER: Impact on Data Transmission

Bit Error Rate (BER) measures the rate of bit errors in a transmitted data stream, representing the quality of the physical layer in telecommunications. Packet Error Rate (PER) evaluates the frequency of corrupted or lost packets at the data link layer, directly affecting higher-level protocols and overall communication reliability. High BER often leads to increased PER, causing retransmissions, reduced throughput, and degraded data transmission efficiency in wireless and wired networks.

BER and PER in Wireless Communication

Bit Error Rate (BER) quantifies the ratio of incorrectly received bits to the total transmitted bits, serving as a critical metric in evaluating wireless communication system performance. Packet Error Rate (PER) measures the frequency of packet transmission errors, encompassing multiple bit errors within a single packet and reflecting the cumulative effect of these errors on data integrity. Accurate assessment of BER and PER is essential in optimizing modulation schemes, error correction techniques, and overall reliability of wireless networks.

BER vs PER: Applications and Use Cases

BER (Bit Error Rate) and PER (Packet Error Rate) serve distinct roles in telecommunications; BER is critical for assessing the quality of physical layer transmission in applications like fiber optics and wireless communication, where individual bit accuracy impacts signal integrity. PER focuses on higher-layer protocols, directly affecting real-time services such as VoIP, video streaming, and data packet transmission, where packet loss can degrade user experience. Selecting BER or PER metrics depends on system design priorities, with BER vital for hardware performance tuning and PER essential for evaluating end-to-end network reliability and application-level error handling.

Improving BER and PER Performance

Improving Bit Error Rate (BER) and Packet Error Rate (PER) performance in telecommunications requires advanced error correction techniques such as Forward Error Correction (FEC) and adaptive modulation schemes. Implementing robust coding algorithms like Turbo codes and Low-Density Parity-Check (LDPC) codes significantly reduces transmission errors, enhancing signal integrity in noisy environments. Optimizing receiver sensitivity and employing link adaptation mechanisms dynamically adjust system parameters to maintain minimal BER and PER under varying channel conditions.

Future Trends in Error Rate Analysis

Emerging trends in telecommunications emphasize integrating artificial intelligence and machine learning algorithms to enhance Bit Error Rate (BER) and Packet Error Rate (PER) analysis, enabling predictive error correction and adaptive modulation schemes. Innovations in 5G and upcoming 6G networks prioritize ultra-reliable low-latency communications (URLLC), where precise BER and PER measurements are critical for maintaining quality of service in real-time applications. Advanced error detection techniques leveraging quantum computing and edge analytics are set to revolutionize error rate optimization, reducing retransmissions and improving overall network efficiency.

BER vs PER Infographic

techiny.com

techiny.com