Gaze-based interaction in virtual reality enables users to navigate and select objects simply by looking at them, offering a hands-free experience that enhances accessibility and reduces physical fatigue. Gesture-based interaction relies on hand movements and gestures to manipulate virtual environments, providing intuitive and natural control that mimics real-world actions. Combining both methods can create immersive and versatile VR experiences by leveraging the precision of gaze tracking and the expressiveness of gestures.

Table of Comparison

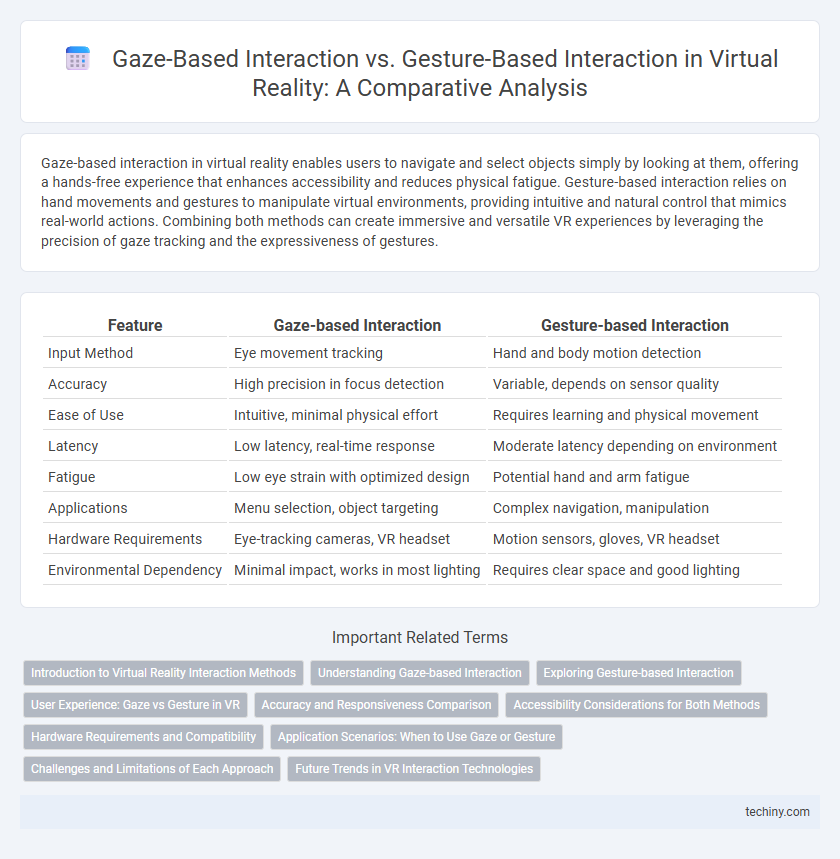

| Feature | Gaze-based Interaction | Gesture-based Interaction |

|---|---|---|

| Input Method | Eye movement tracking | Hand and body motion detection |

| Accuracy | High precision in focus detection | Variable, depends on sensor quality |

| Ease of Use | Intuitive, minimal physical effort | Requires learning and physical movement |

| Latency | Low latency, real-time response | Moderate latency depending on environment |

| Fatigue | Low eye strain with optimized design | Potential hand and arm fatigue |

| Applications | Menu selection, object targeting | Complex navigation, manipulation |

| Hardware Requirements | Eye-tracking cameras, VR headset | Motion sensors, gloves, VR headset |

| Environmental Dependency | Minimal impact, works in most lighting | Requires clear space and good lighting |

Introduction to Virtual Reality Interaction Methods

Gaze-based interaction leverages eye-tracking technology to enable users to select and manipulate virtual objects simply by looking at them, offering hands-free navigation in immersive environments. Gesture-based interaction relies on detecting hand and body movements through sensors or cameras, allowing intuitive control through natural motions. Both methods enhance user engagement in virtual reality by providing distinct ways to interact with digital content, tailored to various applications and user preferences.

Understanding Gaze-based Interaction

Gaze-based interaction in virtual reality leverages eye-tracking technology to enable users to navigate and select objects by simply looking at them, enhancing immersion and reducing physical effort. This method offers higher precision and faster response times compared to gesture-based interaction, making it ideal for applications requiring subtle control and quick decision-making. Understanding gaze-based interaction involves recognizing its potential to improve accessibility and streamline user experiences by minimizing the need for complex hand movements.

Exploring Gesture-based Interaction

Gesture-based interaction in virtual reality leverages hand and body movements to create immersive and intuitive user experiences, enabling natural control without handheld devices. Studies show this method enhances spatial awareness and reduces cognitive load compared to gaze-based interaction, which primarily relies on eye-tracking technology for selection and navigation. Advanced hand-tracking sensors and machine learning algorithms have significantly improved gesture recognition accuracy, making it a preferred choice for complex VR applications in gaming, training, and design.

User Experience: Gaze vs Gesture in VR

Gaze-based interaction in VR offers intuitive navigation by allowing users to select objects simply by looking at them, enhancing precision and reducing physical strain during extended sessions. Gesture-based interaction provides a more immersive and natural experience by mimicking real-world hand movements, increasing user engagement and expressive control. Both methods impact user experience uniquely, with gaze excelling in accuracy and ease of use, while gestures deliver richer interactivity and embodiment.

Accuracy and Responsiveness Comparison

Gaze-based interaction in virtual reality offers high accuracy by leveraging eye-tracking technology that precisely detects user focus, enabling seamless selection and navigation. Gesture-based interaction, while intuitive and natural, often faces challenges with responsiveness due to variability in hand movements and sensor limitations, potentially causing delays or misinterpretations. Comparing both, gaze-based systems typically provide faster response times and higher precision, making them preferable for tasks requiring detailed interaction within VR environments.

Accessibility Considerations for Both Methods

Gaze-based interaction in virtual reality offers hands-free control, enhancing accessibility for users with limited motor skills or mobility impairments by relying on eye movement tracking. Gesture-based interaction provides intuitive control but may pose challenges for users with restricted arm or hand mobility, requiring adaptable calibration and customizable gesture sets. Both methods necessitate inclusive design strategies, such as adjustable sensitivity settings and alternative input options, to ensure broad usability across diverse user abilities.

Hardware Requirements and Compatibility

Gaze-based interaction in virtual reality primarily relies on eye-tracking hardware integrated into VR headsets, requiring precise sensors and calibration to accurately detect user focus, which can increase device cost and complexity. Gesture-based interaction demands external cameras or motion sensors, such as Leap Motion or Kinect, to capture hand and body movements, often necessitating additional peripherals and potentially limiting compatibility with existing VR systems. Compatibility challenges arise when hardware-specific drivers and software protocols vary, influencing the smooth integration of gaze and gesture controls across different VR platforms and applications.

Application Scenarios: When to Use Gaze or Gesture

Gaze-based interaction excels in scenarios requiring precision and minimal physical effort, such as menu navigation or selection tasks in VR training simulations and medical applications. Gesture-based interaction is preferable for immersive experiences demanding natural, expressive control, including gaming, virtual fitness, and collaborative design environments. Combining gaze for targeting and gestures for action execution enhances usability in complex VR workflows.

Challenges and Limitations of Each Approach

Gaze-based interaction in virtual reality faces challenges such as limited precision due to eye-tracking calibration errors and difficulty distinguishing intentional focus from natural eye movement, which can lead to unintended selections. Gesture-based interaction struggles with occlusion issues, varying user ergonomics, and the need for high-fidelity motion tracking to accurately interpret complex gestures, often resulting in latency and recognition errors. Both approaches demand significant hardware accuracy and user adaptability, impacting overall system usability and immersive experience quality.

Future Trends in VR Interaction Technologies

Gaze-based interaction in virtual reality utilizes eye-tracking to enable seamless and intuitive control, enhancing user immersion with minimal physical effort. Gesture-based interaction leverages advanced motion sensors and AI to recognize complex hand and body movements, offering natural and expressive communication within VR environments. Future trends indicate a hybrid approach combining gaze and gesture inputs, augmented by machine learning algorithms, to create highly responsive and adaptive VR interfaces that improve accessibility and user experience.

Gaze-based Interaction vs Gesture-based Interaction Infographic

techiny.com

techiny.com