Gaze input in virtual reality offers hands-free interaction by tracking where the user is looking, enabling intuitive selection and navigation within VR environments. Gesture input relies on hand movements and poses to control actions, providing a more natural and immersive way to manipulate virtual objects. Combining gaze and gesture inputs enhances precision and usability, creating a seamless VR experience adaptable to various applications.

Table of Comparison

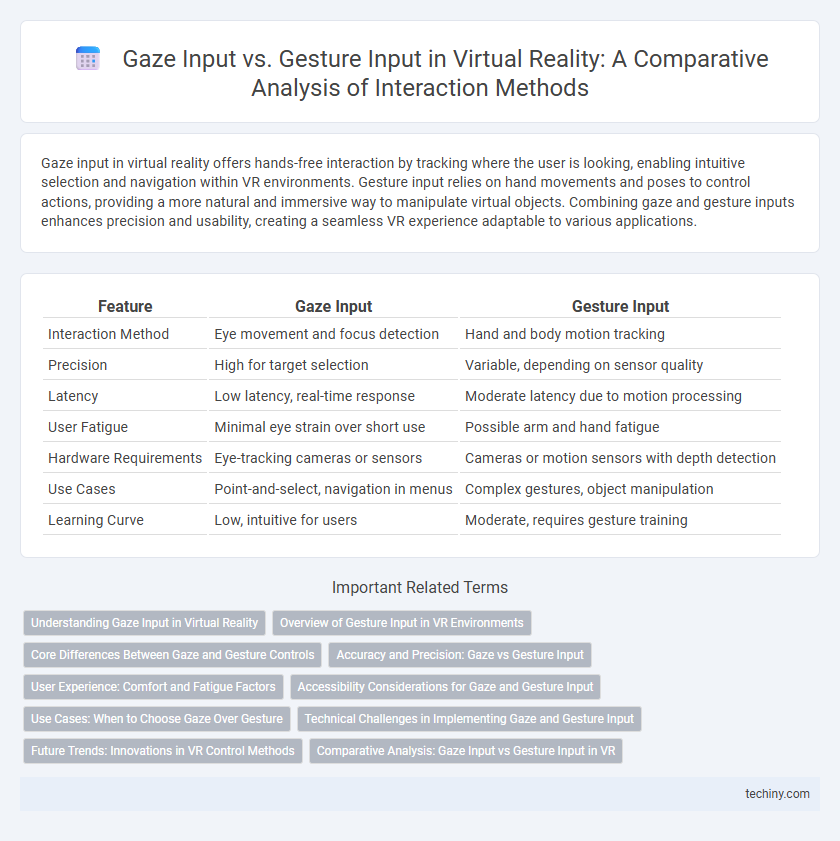

| Feature | Gaze Input | Gesture Input |

|---|---|---|

| Interaction Method | Eye movement and focus detection | Hand and body motion tracking |

| Precision | High for target selection | Variable, depending on sensor quality |

| Latency | Low latency, real-time response | Moderate latency due to motion processing |

| User Fatigue | Minimal eye strain over short use | Possible arm and hand fatigue |

| Hardware Requirements | Eye-tracking cameras or sensors | Cameras or motion sensors with depth detection |

| Use Cases | Point-and-select, navigation in menus | Complex gestures, object manipulation |

| Learning Curve | Low, intuitive for users | Moderate, requires gesture training |

Understanding Gaze Input in Virtual Reality

Gaze input in virtual reality leverages eye-tracking technology to enable intuitive user interactions by detecting where a user's attention is focused within a VR environment. This method offers precise, hands-free navigation and selection capabilities, enhancing immersion and reducing physical strain compared to gesture input, which relies on hand movements detected by sensors. Understanding gaze input's responsiveness and accuracy is crucial for developing seamless VR experiences that prioritize user comfort and interaction efficiency.

Overview of Gesture Input in VR Environments

Gesture input in VR environments enables intuitive interaction by tracking hand and body movements to manipulate virtual objects and navigate interfaces. Advanced sensors and machine learning algorithms interpret complex gestures, enhancing immersion and reducing reliance on controllers. This interaction method increases accessibility and natural engagement, critical for applications in gaming, training, and remote collaboration.

Core Differences Between Gaze and Gesture Controls

Gaze input in virtual reality uses eye-tracking technology to detect where a user is looking, enabling intuitive and hands-free interaction by focusing on specific objects or UI elements. Gesture input relies on hand movements and body gestures captured by sensors or cameras, allowing more expressive and deliberate commands but requiring physical space and clear visibility. Core differences include the speed and subtlety of gaze control versus the physical expressiveness and complexity of gesture control, with each method suited to different interaction contexts and user preferences.

Accuracy and Precision: Gaze vs Gesture Input

Gaze input in virtual reality offers higher precision by tracking eye movements with millisecond accuracy, enabling fine control in interactions. Gesture input relies on hand and body movements, which can vary in consistency and often introduce latency, affecting accuracy. For tasks demanding exact selection or targeting, gaze input typically outperforms gesture input in both accuracy and precision.

User Experience: Comfort and Fatigue Factors

Gaze input in virtual reality reduces physical exertion by allowing users to navigate and select options through eye movement, minimizing arm and hand fatigue during extended sessions. Gesture input offers intuitive interaction but can lead to discomfort and muscle strain, especially with repetitive or prolonged use. Combining gaze input with subtle gestures optimizes user comfort and reduces fatigue, enhancing overall VR experience sustainability.

Accessibility Considerations for Gaze and Gesture Input

Gaze input in virtual reality offers hands-free interaction, enhancing accessibility for users with limited motor skills or mobility impairments by enabling control through eye movement alone. Gesture input requires precise physical movements, which may pose challenges for individuals with motor function limitations, potentially restricting their ability to fully engage with VR environments. Designing VR interfaces with customizable gaze sensitivity and simplified gestures can improve accessibility, ensuring inclusive and user-friendly experiences for diverse user abilities.

Use Cases: When to Choose Gaze Over Gesture

Gaze input excels in hands-free environments where user mobility and precision are crucial, such as in medical surgeries or complex machinery operation. Gesture input proves more effective in immersive gaming or creative design tasks that require expressive, intuitive controls. Choosing gaze over gesture is optimal when minimizing physical effort and reducing input latency enhances user experience.

Technical Challenges in Implementing Gaze and Gesture Input

Gaze input systems face technical challenges such as accurately tracking eye movement despite variable lighting conditions and user calibration differences, which can lead to imprecise interactions. Gesture input encounters difficulties in recognizing diverse hand shapes and movements in real-time, compounded by occlusions and environmental noise interfering with sensor accuracy. Both input methods require advanced machine learning algorithms and high-fidelity sensors to ensure responsiveness and reduce latency in immersive virtual reality environments.

Future Trends: Innovations in VR Control Methods

Gaze input is evolving with advanced eye-tracking technology enabling hands-free interactions and precise user intent detection, enhancing immersive experiences in VR environments. Gesture input is advancing through improved machine learning algorithms and wearable sensors that capture nuanced hand movements, expanding intuitive control capabilities. Emerging trends indicate hybrid control systems combining gaze and gesture inputs will drive future VR interfaces, offering seamless, natural user engagement and increased accessibility.

Comparative Analysis: Gaze Input vs Gesture Input in VR

Gaze input in VR leverages eye-tracking technology to enable hands-free interaction, offering faster target selection and reducing user fatigue compared to gesture input, which relies on hand movements and can be less precise and more physically demanding. Gesture input provides intuitive and expressive control, ideal for complex commands and immersive experiences, but may suffer from occlusion issues and require extensive calibration. Evaluating usability metrics such as accuracy, response time, and user comfort reveals gaze input excels in efficiency and minimal effort, while gesture input offers richer interaction modalities suited for creative and social VR applications.

Gaze input vs Gesture input Infographic

techiny.com

techiny.com