Gaze interaction in virtual reality enables users to navigate and select objects simply by looking at them, offering a hands-free and intuitive experience that reduces physical fatigue. Gesture interaction allows more expressive control through hand movements, providing a natural and immersive way to manipulate virtual environments but may require more space and can be less precise. Combining both gaze and gesture interactions enhances user engagement by leveraging the strengths of each method for seamless and versatile VR experiences.

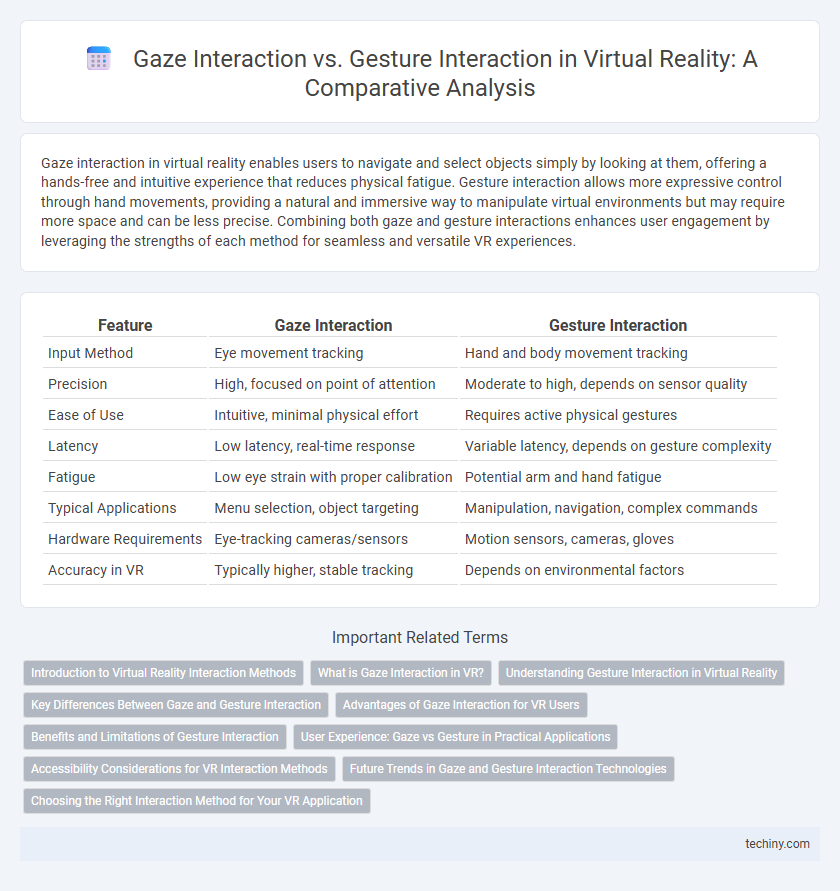

Table of Comparison

| Feature | Gaze Interaction | Gesture Interaction |

|---|---|---|

| Input Method | Eye movement tracking | Hand and body movement tracking |

| Precision | High, focused on point of attention | Moderate to high, depends on sensor quality |

| Ease of Use | Intuitive, minimal physical effort | Requires active physical gestures |

| Latency | Low latency, real-time response | Variable latency, depends on gesture complexity |

| Fatigue | Low eye strain with proper calibration | Potential arm and hand fatigue |

| Typical Applications | Menu selection, object targeting | Manipulation, navigation, complex commands |

| Hardware Requirements | Eye-tracking cameras/sensors | Motion sensors, cameras, gloves |

| Accuracy in VR | Typically higher, stable tracking | Depends on environmental factors |

Introduction to Virtual Reality Interaction Methods

Gaze Interaction in virtual reality leverages eye-tracking technology to enable users to select or manipulate objects simply by looking at them, offering intuitive and hands-free control. Gesture Interaction utilizes motion sensors and cameras to recognize hand and body movements, allowing users to interact naturally within the VR environment. These interaction methods enhance immersion by providing diverse input options tailored to different use cases and user preferences.

What is Gaze Interaction in VR?

Gaze Interaction in VR tracks the user's eye movements to enable hands-free navigation and selection within virtual environments. This technology uses advanced eye-tracking sensors to determine where the user is looking, allowing seamless control without physical controllers. Gaze Interaction enhances immersion and accessibility by simplifying user input and reducing fatigue during extended VR sessions.

Understanding Gesture Interaction in Virtual Reality

Gesture interaction in virtual reality enables users to manipulate digital environments through natural hand and body movements, enhancing immersion without relying on external controllers. Advanced sensors and machine learning algorithms decode precise gestures, allowing intuitive commands such as grabbing, pointing, and swiping. This interaction method reduces cognitive load by leveraging innate motor skills, improving user engagement and accessibility in VR applications.

Key Differences Between Gaze and Gesture Interaction

Gaze interaction utilizes eye-tracking technology to select and manipulate objects by focusing the user's gaze, offering hands-free control and reducing physical effort. Gesture interaction relies on hand and body movements detected by sensors to execute commands, providing more expressive and intuitive input methods. Key differences include input modality--eye movement versus physical gestures--and varying levels of precision, fatigue, and contextual applicability in virtual reality environments.

Advantages of Gaze Interaction for VR Users

Gaze interaction in virtual reality offers precise and intuitive control by tracking eye movement, allowing users to select objects with minimal physical effort and reducing hand fatigue during extended sessions. This method enhances accessibility for users with limited mobility or dexterity, providing a seamless and natural interface that improves immersion and user comfort. Furthermore, gaze interaction enables faster response times and smoother navigation within VR environments by eliminating the need for complex hand gestures.

Benefits and Limitations of Gesture Interaction

Gesture interaction in virtual reality offers intuitive control and natural communication by allowing users to manipulate objects and navigate environments without physical controllers. Benefits include enhanced immersion and reduced learning curves for new users since gestures mimic real-world actions. Limitations involve potential fatigue from prolonged arm movements, variability in gesture recognition accuracy, and constraints in complex command execution compared to more precise input devices.

User Experience: Gaze vs Gesture in Practical Applications

Gaze interaction offers intuitive, hands-free control in virtual reality by tracking users' eye movements for seamless selection and navigation, enhancing comfort during prolonged use. Gesture interaction enables natural, expressive communication through hand and body movements, providing immersive engagement in VR environments but may cause fatigue over extended sessions. Practical applications show gaze interaction excels in precision tasks and accessibility, while gesture interaction thrives in interactive gaming and creative design scenarios.

Accessibility Considerations for VR Interaction Methods

Gaze interaction in VR offers significant accessibility benefits by enabling users with limited hand mobility to navigate and select objects using eye movement, reducing physical strain. Gesture interaction, while intuitive for many, can pose challenges for individuals with motor impairments or limited dexterity, restricting their ability to engage fully. Incorporating customizable sensitivity settings and multimodal input options enhances accessibility, ensuring VR environments are usable for diverse user needs.

Future Trends in Gaze and Gesture Interaction Technologies

Gaze interaction technology in virtual reality is advancing with enhanced eye-tracking accuracy and predictive algorithms, enabling more intuitive and seamless user experiences. Gesture interaction is evolving through improved sensor precision and machine learning models that support complex, natural hand movements for immersive control. Future trends indicate a convergence of gaze and gesture inputs, creating multi-modal interfaces that increase interaction efficiency and accessibility in virtual environments.

Choosing the Right Interaction Method for Your VR Application

Selecting the appropriate interaction method for VR applications hinges on user experience goals and technical requirements, with gaze interaction offering intuitive control through eye movements and reduced physical effort, while gesture interaction provides more natural, expressive input through hand motions. Gaze interaction excels in scenarios demanding minimal user fatigue and precise targeting, often enhanced by eye-tracking hardware, whereas gesture interaction suits applications requiring rich, dynamic input and haptic feedback integration. Evaluating factors such as latency, accuracy, hardware compatibility, and user accessibility is crucial for optimizing immersion and engagement in virtual environments.

Gaze Interaction vs Gesture Interaction Infographic

techiny.com

techiny.com