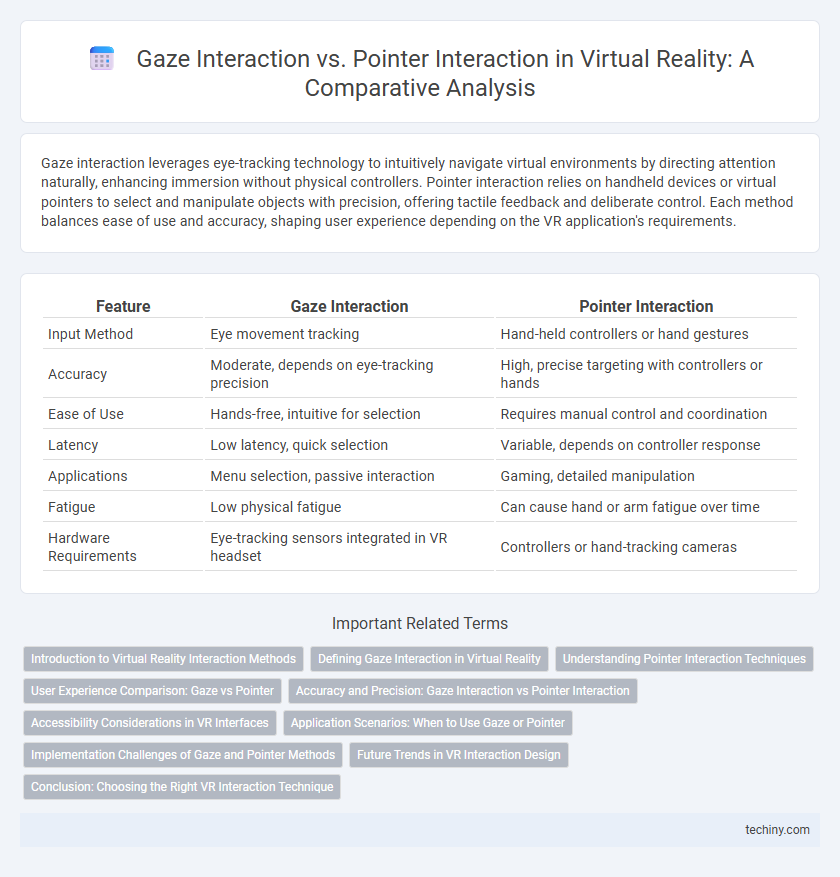

Gaze interaction leverages eye-tracking technology to intuitively navigate virtual environments by directing attention naturally, enhancing immersion without physical controllers. Pointer interaction relies on handheld devices or virtual pointers to select and manipulate objects with precision, offering tactile feedback and deliberate control. Each method balances ease of use and accuracy, shaping user experience depending on the VR application's requirements.

Table of Comparison

| Feature | Gaze Interaction | Pointer Interaction |

|---|---|---|

| Input Method | Eye movement tracking | Hand-held controllers or hand gestures |

| Accuracy | Moderate, depends on eye-tracking precision | High, precise targeting with controllers or hands |

| Ease of Use | Hands-free, intuitive for selection | Requires manual control and coordination |

| Latency | Low latency, quick selection | Variable, depends on controller response |

| Applications | Menu selection, passive interaction | Gaming, detailed manipulation |

| Fatigue | Low physical fatigue | Can cause hand or arm fatigue over time |

| Hardware Requirements | Eye-tracking sensors integrated in VR headset | Controllers or hand-tracking cameras |

Introduction to Virtual Reality Interaction Methods

Gaze interaction leverages eye-tracking technology to enable users to control virtual environments by simply looking at objects, reducing the need for physical controllers and enhancing immersion. Pointer interaction relies on handheld devices or motion controllers to direct a virtual cursor, offering precise selection but potentially limiting natural movement. Both methods play crucial roles in virtual reality, balancing ease of use and accuracy depending on the application context.

Defining Gaze Interaction in Virtual Reality

Gaze interaction in virtual reality involves using the user's eye movement to control and navigate within the virtual environment, enabling hands-free interaction. This method leverages eye-tracking technology to detect where the user is looking, allowing precise selection or activation of objects by simply focusing on them. Compared to pointer interaction, which requires handheld controllers or devices to point and click, gaze interaction offers a more natural and intuitive user experience, especially in scenarios requiring minimal physical input.

Understanding Pointer Interaction Techniques

Pointer interaction techniques in virtual reality utilize handheld controllers or virtual pointers to enable precise selection and manipulation of objects within the 3D environment. These methods offer greater accuracy and tactile feedback compared to gaze interaction, making them ideal for complex tasks that require fine control. Understanding the differences in latency, ease of use, and user fatigue between pointer and gaze interaction is critical for optimizing VR user experience.

User Experience Comparison: Gaze vs Pointer

Gaze interaction in virtual reality offers a more intuitive and hands-free user experience by leveraging eye-tracking technology for precise control and reduced physical effort. Pointer interaction, typically using handheld controllers, provides greater accuracy and tactile feedback, enhancing task-specific precision in complex VR applications. User experience comparisons show gaze interaction excels in speed and natural engagement, while pointer interaction delivers superior control and versatility for detailed manipulation.

Accuracy and Precision: Gaze Interaction vs Pointer Interaction

Gaze interaction in virtual reality leverages eye-tracking technology to enable hands-free control, offering rapid targeting but often facing challenges in precision due to involuntary eye movements. Pointer interaction, utilizing handheld controllers or tracked devices, typically delivers higher accuracy and steadier input by providing physical feedback and fine motor control. Studies demonstrate pointer-based systems achieve sub-degree precision, whereas gaze-based methods may vary between one to three degrees of visual angle, impacting task performance in detailed virtual environments.

Accessibility Considerations in VR Interfaces

Gaze interaction in VR offers hands-free control, enhancing accessibility for users with limited motor skills by enabling selection through eye movement detection. Pointer interaction, while precise, may pose challenges for individuals with fine motor impairments due to reliance on handheld controllers or hand tracking. Integrating gaze interaction alongside adaptive pointer settings optimizes VR interfaces, ensuring inclusivity and reducing physical strain for diverse user abilities.

Application Scenarios: When to Use Gaze or Pointer

Gaze interaction excels in hands-free environments such as medical simulations or industrial training where users require seamless control without physical devices, enhancing immersion and reducing fatigue. Pointer interaction is ideal for precision tasks like 3D modeling or virtual object manipulation, offering exact targeting through controllers or hand tracking. Choosing between gaze and pointer depends on the application's demand for accuracy versus ease of use and user mobility.

Implementation Challenges of Gaze and Pointer Methods

Gaze interaction in virtual reality faces implementation challenges such as ensuring precise eye-tracking calibration, minimizing latency, and accounting for natural eye movement variability. Pointer interaction requires robust controller tracking, ergonomic design, and accurate spatial mapping to prevent user fatigue and interaction errors. Both methods must address sensor accuracy, environmental interference, and consistent user experience to achieve reliable interactivity in VR environments.

Future Trends in VR Interaction Design

Gaze interaction in virtual reality enhances intuitive user experiences by leveraging eye-tracking technology to create hands-free navigation, while pointer interaction relies on controllers or hand tracking for precise object manipulation. Future trends indicate a convergence of these methods, integrating AI-powered gaze prediction with advanced haptic feedback to increase immersion and reduce user fatigue. Emerging VR systems will prioritize multi-modal interaction frameworks, combining gaze, gestures, and voice commands to create seamless, context-aware environments.

Conclusion: Choosing the Right VR Interaction Technique

Gaze interaction offers a highly intuitive and hands-free experience well-suited for applications requiring minimal physical input and quick selection, enhancing user comfort in VR environments. Pointer interaction provides precise control ideal for complex tasks demanding accuracy and detailed manipulation, such as VR design and gaming. The choice between gaze and pointer interaction depends on the VR application's complexity, user preferences, and required precision, ensuring an optimized and immersive user experience.

Gaze Interaction vs Pointer Interaction Infographic

techiny.com

techiny.com