Activity recognition in wearable technology for pets focuses on identifying broad patterns such as walking, running, or resting to monitor overall health and fitness. Gesture recognition, on the other hand, detects specific movements like paw lifts or tail wags, enabling more precise interaction and behavior analysis. Both technologies enhance pet care by providing detailed insights into physical activity and emotional states.

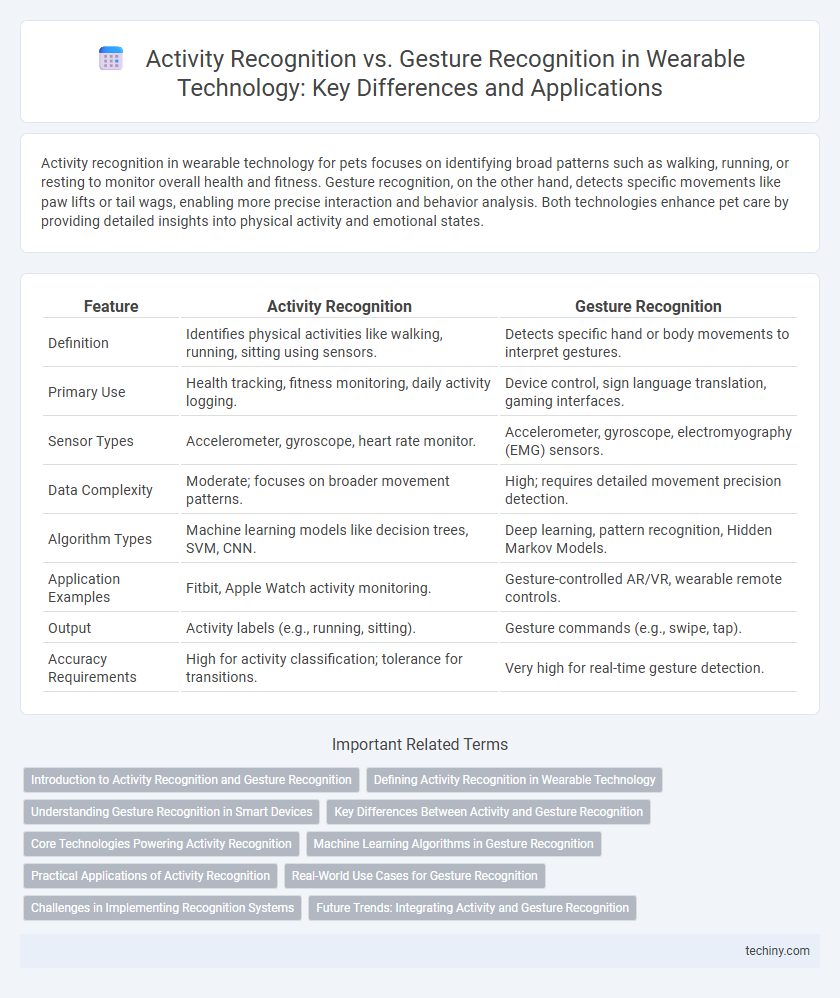

Table of Comparison

| Feature | Activity Recognition | Gesture Recognition |

|---|---|---|

| Definition | Identifies physical activities like walking, running, sitting using sensors. | Detects specific hand or body movements to interpret gestures. |

| Primary Use | Health tracking, fitness monitoring, daily activity logging. | Device control, sign language translation, gaming interfaces. |

| Sensor Types | Accelerometer, gyroscope, heart rate monitor. | Accelerometer, gyroscope, electromyography (EMG) sensors. |

| Data Complexity | Moderate; focuses on broader movement patterns. | High; requires detailed movement precision detection. |

| Algorithm Types | Machine learning models like decision trees, SVM, CNN. | Deep learning, pattern recognition, Hidden Markov Models. |

| Application Examples | Fitbit, Apple Watch activity monitoring. | Gesture-controlled AR/VR, wearable remote controls. |

| Output | Activity labels (e.g., running, sitting). | Gesture commands (e.g., swipe, tap). |

| Accuracy Requirements | High for activity classification; tolerance for transitions. | Very high for real-time gesture detection. |

Introduction to Activity Recognition and Gesture Recognition

Activity recognition involves identifying physical movements and patterns using wearable sensors to monitor daily activities such as walking, running, or sitting, enabling comprehensive health and fitness tracking. Gesture recognition focuses on interpreting specific hand or body gestures, often relying on accelerometers and gyroscopes within wearables to facilitate intuitive human-computer interaction. Both technologies leverage machine learning algorithms to enhance accuracy and user experience in real-time applications.

Defining Activity Recognition in Wearable Technology

Activity recognition in wearable technology involves the continuous monitoring and analysis of sensor data to identify specific physical actions or movements, such as walking, running, or cycling. This technology leverages accelerometers, gyroscopes, and other sensors embedded in devices like smartwatches and fitness trackers to provide real-time activity classification. Accurate activity recognition enhances personalized fitness tracking, health monitoring, and context-aware applications by understanding user behavior patterns.

Understanding Gesture Recognition in Smart Devices

Gesture recognition in smart devices enables intuitive user interaction by interpreting specific hand or body movements through sensors like accelerometers and gyroscopes. Unlike general activity recognition that monitors broad patterns such as walking or running, gesture recognition focuses on detecting precise motions to execute commands or control applications. This technology enhances user experience in wearable devices by providing seamless, hands-free operation tailored to individual gestures.

Key Differences Between Activity and Gesture Recognition

Activity recognition in wearable technology involves identifying ongoing physical activities such as walking, running, or cycling using sensors like accelerometers and gyroscopes, while gesture recognition focuses on interpreting specific hand or body movements to control devices or input commands. Activity recognition processes continuous data patterns over time to classify behaviors, whereas gesture recognition detects discrete, intentional movements with precise start and end points. Key differences include the scope of motion detected, data granularity, and application purposes, with activity recognition targeting broader behavior monitoring and gesture recognition emphasizing interaction and control.

Core Technologies Powering Activity Recognition

Activity recognition in wearable technology relies heavily on core technologies such as accelerometers, gyroscopes, and magnetometers embedded in devices to capture motion data with high precision. Advanced machine learning algorithms, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs), process sensor data to accurately distinguish between activities like walking, running, and cycling. These technologies enable continuous, real-time monitoring of physical activities, enhancing fitness tracking and healthcare applications.

Machine Learning Algorithms in Gesture Recognition

Gesture recognition in wearable technology heavily relies on machine learning algorithms like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to accurately interpret complex hand and body movements. These algorithms analyze sensor data from accelerometers, gyroscopes, and electromyography (EMG) devices to classify gestures in real time, enabling intuitive user interaction. Machine learning models such as support vector machines (SVM) and long short-term memory (LSTM) networks are also commonly employed to improve recognition accuracy and adapt to individual user patterns.

Practical Applications of Activity Recognition

Activity recognition in wearable technology enables precise monitoring of daily physical activities such as walking, running, and cycling, providing valuable data for fitness tracking and health management. It supports personalized workout recommendations and real-time health alerts by detecting patterns and anomalies in user movement. Practical applications extend to rehabilitation monitoring, elder care fall detection, and workplace ergonomics optimization, enhancing safety and well-being.

Real-World Use Cases for Gesture Recognition

Gesture recognition in wearable technology enables intuitive control of devices in real-world scenarios such as hands-free navigation of smart glasses, touchless operation of medical equipment by surgeons, and seamless interaction with augmented reality applications during sports or gaming. Unlike activity recognition, which classifies broader movements or behaviors, gesture recognition detects specific hand or finger movements, facilitating precise command inputs even in dynamic environments. Real-world deployment of gesture recognition enhances accessibility, safety, and user experience by translating natural gestures into digital commands without the need for external controllers or complex interfaces.

Challenges in Implementing Recognition Systems

Activity recognition and gesture recognition in wearable technology face challenges such as varying user behaviors, sensor noise, and computational limitations. Differentiating complex activities or subtle gestures requires advanced machine learning algorithms capable of processing multimodal sensor data in real-time. Ensuring accuracy and reliability while maintaining low power consumption and seamless user experience remains a critical obstacle in implementation.

Future Trends: Integrating Activity and Gesture Recognition

Future trends in wearable technology emphasize the integration of activity recognition and gesture recognition to create more intuitive and responsive devices. Combining these capabilities enables seamless interaction by accurately interpreting complex user movements and contextual behaviors in real-time. Advanced machine learning algorithms and sensor fusion techniques drive this integration, enhancing personalization and expanding applications in healthcare, sports, and augmented reality.

Activity Recognition vs Gesture Recognition Infographic

techiny.com

techiny.com