Gesture control in wearable technology for pets offers hands-free interaction, enhancing convenience and hygiene while reducing physical strain. Touchscreen control provides precise input and immediate feedback, making it ideal for detailed commands and customizable settings. Both methods improve user experience, but gesture control excels in dynamic environments where quick, wireless responses are essential.

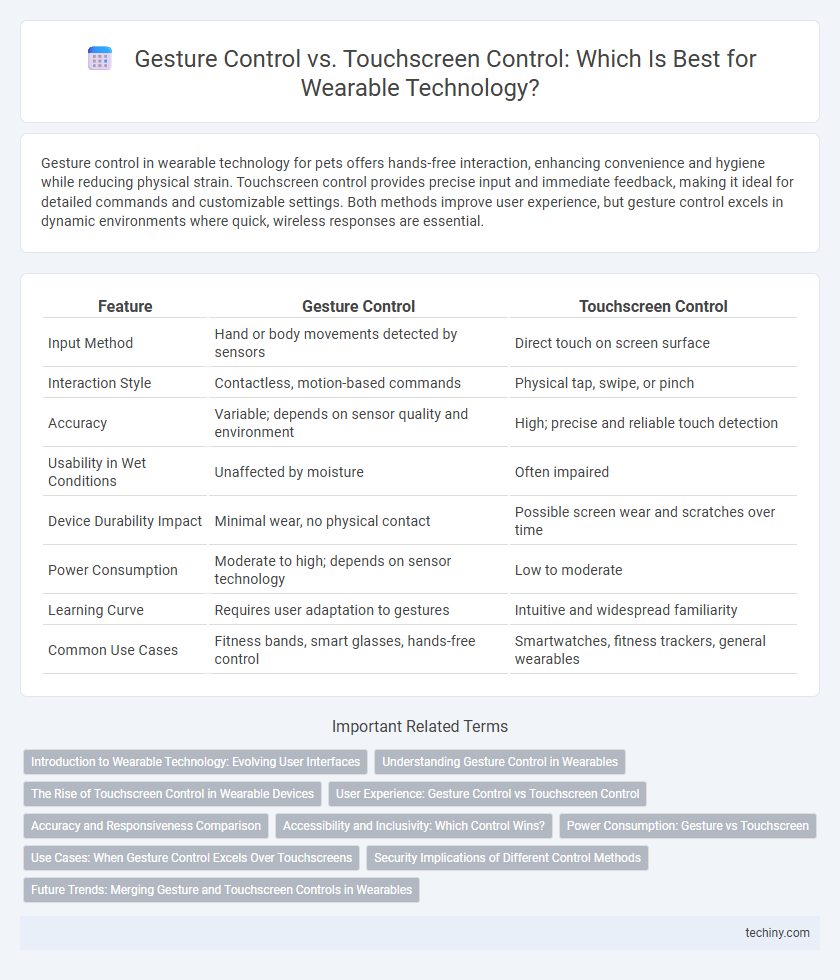

Table of Comparison

| Feature | Gesture Control | Touchscreen Control |

|---|---|---|

| Input Method | Hand or body movements detected by sensors | Direct touch on screen surface |

| Interaction Style | Contactless, motion-based commands | Physical tap, swipe, or pinch |

| Accuracy | Variable; depends on sensor quality and environment | High; precise and reliable touch detection |

| Usability in Wet Conditions | Unaffected by moisture | Often impaired |

| Device Durability Impact | Minimal wear, no physical contact | Possible screen wear and scratches over time |

| Power Consumption | Moderate to high; depends on sensor technology | Low to moderate |

| Learning Curve | Requires user adaptation to gestures | Intuitive and widespread familiarity |

| Common Use Cases | Fitness bands, smart glasses, hands-free control | Smartwatches, fitness trackers, general wearables |

Introduction to Wearable Technology: Evolving User Interfaces

Gesture control and touchscreen control represent two pivotal user interface technologies in wearable devices, enhancing hands-free interaction and precision input, respectively. Gesture control leverages motion sensors and cameras to detect user movements, offering an intuitive and seamless experience, particularly in fitness trackers and smart glasses. Touchscreen control remains a dominant method, utilizing capacitive or resistive screens to provide direct tactile feedback, crucial for smartwatches and health monitors.

Understanding Gesture Control in Wearables

Gesture control in wearable technology relies on advanced sensors like accelerometers, gyroscopes, and cameras to interpret user movements, enabling hands-free interaction. This method enhances user experience by providing intuitive navigation and reducing physical contact with devices, particularly beneficial in hygiene-sensitive or active environments. Compared to touchscreen control, gesture recognition offers greater accessibility and freedom of motion, making it integral to the future of wearable interfaces.

The Rise of Touchscreen Control in Wearable Devices

Touchscreen control has rapidly become the dominant interface in wearable technology due to its intuitive design and direct manipulation capabilities. Advances in capacitive touch sensors and high-resolution displays enable precise input and responsive feedback on compact, power-efficient devices such as smartwatches and fitness trackers. This rise is further driven by user preference for seamless interaction and the integration of multi-touch gestures, which enhance functionality and accessibility in wearables.

User Experience: Gesture Control vs Touchscreen Control

Gesture control in wearable technology offers a hands-free, intuitive user experience by recognizing natural hand movements, enhancing accessibility during physical activities or when touch is impractical. Touchscreen control provides precise input and immediate feedback, favored for detailed navigation and direct interaction on small wearable displays. User preference often depends on context: gesture control excels in convenience and accessibility, while touchscreen control ensures accuracy and tactile responsiveness.

Accuracy and Responsiveness Comparison

Gesture control in wearable technology offers intuitive interaction but often lags behind touchscreen control in accuracy due to sensor limitations and environmental interference. Touchscreen control delivers higher precision and faster responsiveness, benefiting from direct contact and refined haptic feedback systems. Advanced wearables integrate both methods to balance seamless user experience with reliable input accuracy and responsiveness.

Accessibility and Inclusivity: Which Control Wins?

Gesture control in wearable technology enhances accessibility for users with limited dexterity or visual impairments by enabling hands-free operation through motion sensing. Touchscreen control, while intuitive for many, may exclude individuals with fine motor skill challenges or those who rely on assistive tools. Overall, gesture control provides a more inclusive interface, accommodating diverse abilities and creating broader access to wearable devices.

Power Consumption: Gesture vs Touchscreen

Gesture control in wearable technology typically consumes less power than touchscreen control due to reduced need for continuous screen illumination and sensory input processing. Touchscreens require active backlighting and frequent capacitive sensing, which significantly drains battery life in compact devices. Efficient infrared or motion sensor-based gesture recognition systems optimize power usage by activating only on specific movements, extending wearable battery longevity.

Use Cases: When Gesture Control Excels Over Touchscreens

Gesture control excels in wearable technology applications requiring hands-free operation, such as fitness tracking during intense workouts or medical monitoring where hygiene is critical. It enables users to navigate interfaces without physical contact, enhancing usability in environments where touchscreens may be impractical or unresponsive due to sweat, gloves, or dirt. Gesture-based controls improve safety and convenience in scenarios like cycling or industrial work, where maintaining physical interaction with devices is challenging.

Security Implications of Different Control Methods

Gesture control reduces physical contact, minimizing the risk of surface-based malware or unauthorized tactile hacking common in touchscreen interfaces. However, gesture recognition systems may be vulnerable to spoofing attacks if not equipped with advanced biometric verification methods. Touchscreen controls allow for encrypted input methods but remain susceptible to shoulder surfing and smudge attacks that can compromise user security.

Future Trends: Merging Gesture and Touchscreen Controls in Wearables

Gesture control and touchscreen control are increasingly merging in wearable technology, enhancing user interaction by combining intuitive touch sensitivity with dynamic gesture recognition. Advances in AI-driven sensors and machine learning algorithms enable wearables to interpret complex hand movements alongside precise touch inputs, creating more seamless and adaptive user experiences. Future trends point toward hybrid interfaces that leverage contextual awareness and haptic feedback, enabling wearables to offer more natural, efficient control methods in health monitoring, augmented reality, and smart device integration.

Gesture Control vs Touchscreen Control Infographic

techiny.com

techiny.com