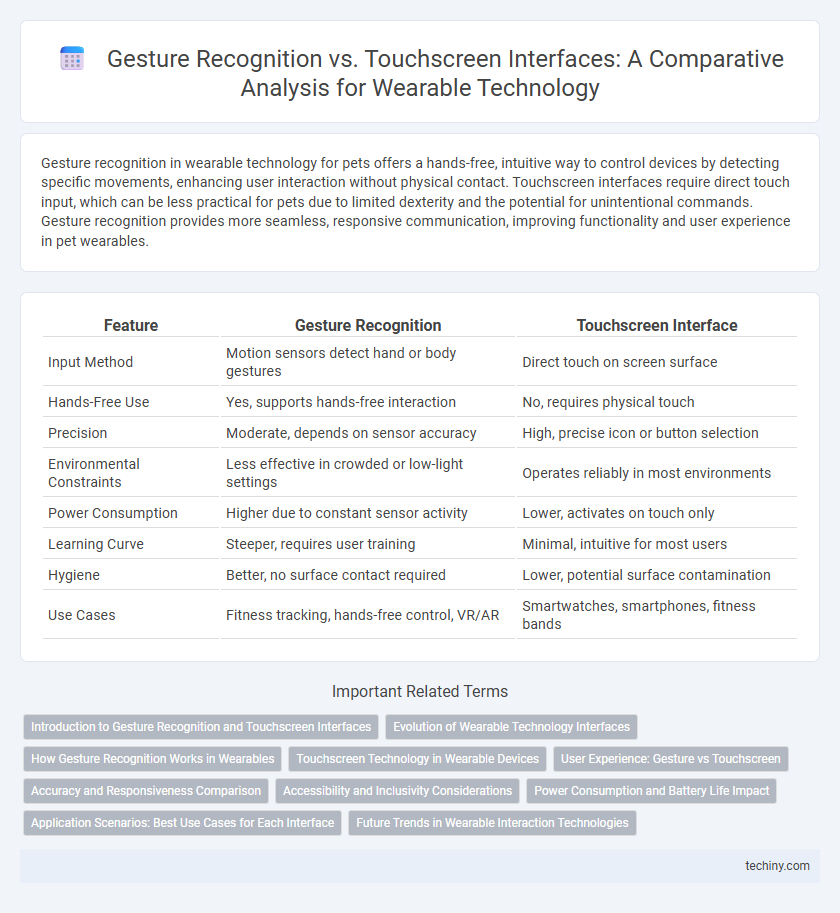

Gesture recognition in wearable technology for pets offers a hands-free, intuitive way to control devices by detecting specific movements, enhancing user interaction without physical contact. Touchscreen interfaces require direct touch input, which can be less practical for pets due to limited dexterity and the potential for unintentional commands. Gesture recognition provides more seamless, responsive communication, improving functionality and user experience in pet wearables.

Table of Comparison

| Feature | Gesture Recognition | Touchscreen Interface |

|---|---|---|

| Input Method | Motion sensors detect hand or body gestures | Direct touch on screen surface |

| Hands-Free Use | Yes, supports hands-free interaction | No, requires physical touch |

| Precision | Moderate, depends on sensor accuracy | High, precise icon or button selection |

| Environmental Constraints | Less effective in crowded or low-light settings | Operates reliably in most environments |

| Power Consumption | Higher due to constant sensor activity | Lower, activates on touch only |

| Learning Curve | Steeper, requires user training | Minimal, intuitive for most users |

| Hygiene | Better, no surface contact required | Lower, potential surface contamination |

| Use Cases | Fitness tracking, hands-free control, VR/AR | Smartwatches, smartphones, fitness bands |

Introduction to Gesture Recognition and Touchscreen Interfaces

Gesture recognition enables devices to interpret human motions as input, utilizing sensors such as accelerometers, gyroscopes, and cameras to track hand or body movements. Touchscreen interfaces rely on capacitive or resistive technology to detect finger or stylus contact, allowing direct manipulation of digital elements through taps, swipes, and pinches. Both technologies enhance user interaction in wearable devices, with gesture recognition offering hands-free control and touchscreens providing intuitive tactile feedback.

Evolution of Wearable Technology Interfaces

Gesture recognition and touchscreen interfaces represent significant milestones in the evolution of wearable technology interfaces. Gesture recognition leverages sensors and machine learning algorithms to interpret user movements, offering hands-free control and enhancing accessibility in smartwatches and AR glasses. Touchscreen interfaces, characterized by capacitive and resistive technologies, provide direct tactile interaction, enabling intuitive navigation and faster input on devices like fitness trackers and smart rings.

How Gesture Recognition Works in Wearables

Gesture recognition in wearable technology uses advanced sensors such as accelerometers, gyroscopes, and infrared cameras to detect and interpret user movements. These sensors capture real-time data on hand or body gestures, which is processed by machine learning algorithms to translate motion into specific commands. Unlike touchscreen interfaces, gesture recognition enables hands-free control, enhancing user interaction in scenarios where direct contact with a device is impractical.

Touchscreen Technology in Wearable Devices

Touchscreen technology in wearable devices enables intuitive user interaction through direct contact, enhancing accessibility and efficiency. Advanced capacitive and OLED touchscreens offer high sensitivity and vibrant displays tailored for compact wearable formats. Integration of haptic feedback further improves user experience by simulating tactile sensations, making touchscreens a preferred interface in smartwatches and fitness trackers.

User Experience: Gesture vs Touchscreen

Gesture recognition offers an immersive user experience by enabling intuitive, hands-free control through natural movements, enhancing accessibility and reducing physical contact. Touchscreen interfaces provide precise and direct interaction, allowing users to execute commands with tactile feedback and visual confirmation. User preference often depends on the context of use, with gesture controls favored in dynamic environments and touchscreens preferred for tasks requiring accuracy and frequent input.

Accuracy and Responsiveness Comparison

Gesture recognition in wearable technology offers hands-free interaction but currently lags behind touchscreen interfaces in accuracy due to sensor limitations and environmental interference. Touchscreen interfaces provide more precise input with rapid responsiveness, benefiting from direct contact and mature technology standards. Advances in machine learning algorithms and sensor fusion are gradually enhancing gesture recognition's reliability, narrowing the gap in user experience performance.

Accessibility and Inclusivity Considerations

Gesture recognition technology in wearable devices enhances accessibility by enabling users with limited dexterity or visual impairments to interact through natural movements. Touchscreen interfaces may pose challenges for individuals with fine motor difficulties or those using assistive devices, limiting inclusivity. Incorporating multimodal interaction combining gesture recognition and touchscreen options ensures broader usability and accommodates diverse user needs in wearable technology.

Power Consumption and Battery Life Impact

Gesture recognition in wearable technology typically consumes less power than continuous touchscreen usage due to its event-driven activation, extending battery life significantly. Touchscreen interfaces require constant backlighting and sensor scanning, leading to higher energy drain and shorter usage intervals. Optimizing sensor sensitivity and leveraging low-power processors in gesture systems further reduces power consumption, enhancing overall device endurance.

Application Scenarios: Best Use Cases for Each Interface

Gesture recognition excels in hands-free environments such as medical surgeries, manufacturing floors, and virtual reality setups where users require intuitive interaction without physical contact. Touchscreen interfaces are ideal for precise control in mobile devices, kiosks, and point-of-sale systems due to their responsiveness and familiarity. Each interface optimizes user experience based on context-specific needs, with gesture recognition enhancing mobility and hygiene, while touchscreens enable detailed input and accessibility.

Future Trends in Wearable Interaction Technologies

Gesture recognition in wearable technology is rapidly advancing through AI-driven motion sensors and machine learning algorithms, enabling more intuitive and hands-free user experiences compared to traditional touchscreen interfaces. Emerging trends include the integration of haptic feedback and adaptive gesture systems that personalize interactions based on user behavior and environmental context. These innovations are set to enhance accessibility, reduce reliance on screen-based input, and pave the way for seamless, natural communication between users and devices.

Gesture Recognition vs Touchscreen Interface Infographic

techiny.com

techiny.com