Voice command enhances wearable technology for pets by enabling hands-free control, allowing owners to issue commands or check pet statuses effortlessly. Touch navigation offers intuitive, direct interaction through screens or sensors, providing precise control but requiring manual input. Both methods improve user experience, with voice commands suited for convenience and touch navigation ideal for detailed adjustments.

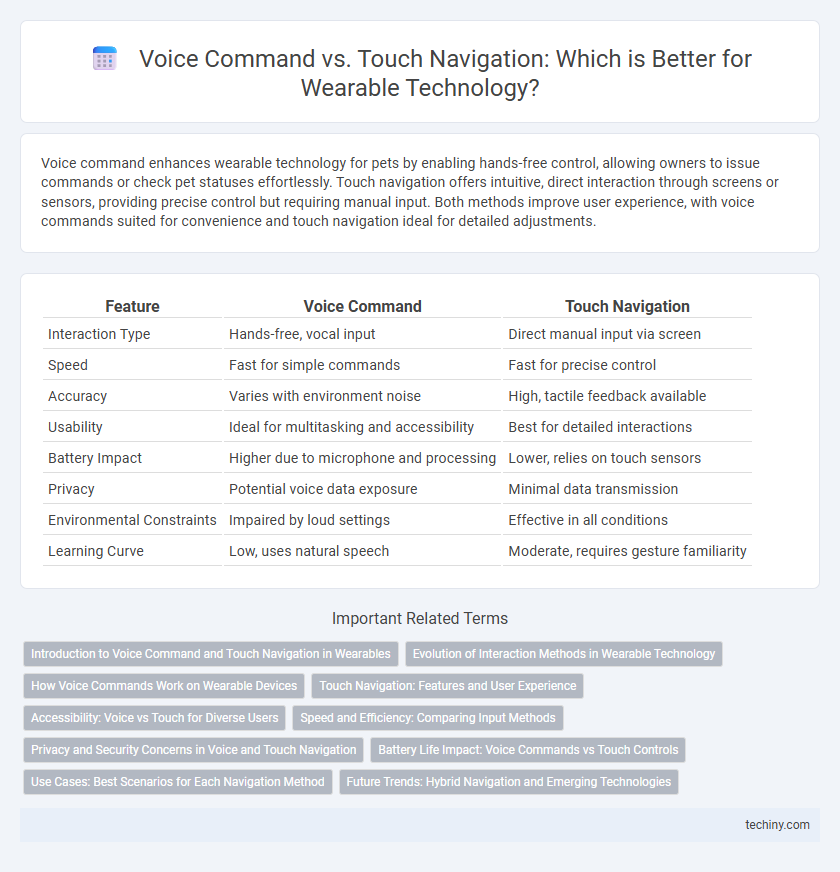

Table of Comparison

| Feature | Voice Command | Touch Navigation |

|---|---|---|

| Interaction Type | Hands-free, vocal input | Direct manual input via screen |

| Speed | Fast for simple commands | Fast for precise control |

| Accuracy | Varies with environment noise | High, tactile feedback available |

| Usability | Ideal for multitasking and accessibility | Best for detailed interactions |

| Battery Impact | Higher due to microphone and processing | Lower, relies on touch sensors |

| Privacy | Potential voice data exposure | Minimal data transmission |

| Environmental Constraints | Impaired by loud settings | Effective in all conditions |

| Learning Curve | Low, uses natural speech | Moderate, requires gesture familiarity |

Introduction to Voice Command and Touch Navigation in Wearables

Voice command and touch navigation serve as primary input methods in wearable technology, enabling hands-free and intuitive device interaction. Voice commands leverage speech recognition algorithms to interpret user instructions, enhancing accessibility and convenience for tasks like messaging and media control. Touch navigation employs capacitive sensors on wearable displays, allowing precise gesture-based control for seamless app navigation and real-time feedback.

Evolution of Interaction Methods in Wearable Technology

Voice command and touch navigation represent pivotal advancements in the evolution of interaction methods in wearable technology, enhancing user convenience and accessibility. Voice recognition systems, powered by AI and natural language processing, facilitate hands-free control, allowing seamless multitasking and faster command execution. Touch navigation, evolving from basic taps to advanced gestures and haptic feedback, provides intuitive and precise control over wearable devices, adapting to diverse user needs and environments.

How Voice Commands Work on Wearable Devices

Voice commands on wearable devices operate through advanced speech recognition algorithms that convert spoken words into digital commands, enabling hands-free control and increased user convenience. These devices integrate microphones and natural language processing (NLP) systems to accurately interpret voice inputs in various environments. Continuous machine learning enhances the system's ability to understand accents and contextual speech, optimizing responsiveness and functionality across diverse use cases.

Touch Navigation: Features and User Experience

Touch navigation in wearable technology offers intuitive control through gestures like swiping, tapping, and pinching on compact screens, enhancing user accessibility. Features such as haptic feedback, customizable gesture controls, and multi-touch capabilities improve operational precision and responsiveness. This method supports seamless interaction in various environments, making it ideal for users seeking quick, tactile input without voice dependency.

Accessibility: Voice vs Touch for Diverse Users

Voice command offers enhanced accessibility for users with limited mobility or visual impairments by enabling hands-free interaction with wearable technology. Touch navigation relies heavily on precise gestures, which can be challenging for individuals with motor skill difficulties or sensory sensitivities. Integrating both voice and touch interfaces maximizes usability, ensuring diverse users can effectively control wearable devices according to their specific abilities.

Speed and Efficiency: Comparing Input Methods

Voice command in wearable technology offers faster interaction speeds, enabling users to execute tasks hands-free and without visual focus, boosting overall efficiency in dynamic environments. Touch navigation, while precise and intuitive, often requires more time and deliberate user input, potentially slowing down workflows during rapid task execution. Studies indicate voice recognition systems can reduce command input time by up to 50%, significantly enhancing operational speed in wearable devices compared to traditional touch interfaces.

Privacy and Security Concerns in Voice and Touch Navigation

Voice command interfaces in wearable technology pose privacy risks due to continuous listening and potential data interception during voice transmission, increasing vulnerability to unauthorized access. Touch navigation offers enhanced security by limiting interaction to physical contact, reducing the risk of remote eavesdropping and data breaches. However, touch interfaces may be compromised through smudge attacks or physical device theft, requiring robust encryption and authentication measures for comprehensive protection.

Battery Life Impact: Voice Commands vs Touch Controls

Voice commands significantly reduce battery consumption in wearable technology by limiting screen activation and minimizing touch sensor use. Touch navigation demands constant screen illumination and frequent sensor engagement, leading to faster battery drain compared to voice interactions. Optimizing voice recognition algorithms further extends battery life by reducing processing power during command execution.

Use Cases: Best Scenarios for Each Navigation Method

Voice command navigation is ideal for hands-free scenarios such as fitness workouts, driving, or situations requiring multitasking where touch interaction is impractical. Touch navigation excels in environments demanding precision, like adjusting settings on smartwatches or browsing detailed menus when voice recognition may struggle due to noise or privacy concerns. Combining both methods enhances usability, allowing users to switch based on context for optimal control in wearable technology.

Future Trends: Hybrid Navigation and Emerging Technologies

Future trends in wearable technology emphasize hybrid navigation systems that combine voice command with touch navigation for enhanced user experience and accessibility. Emerging technologies like AI-powered voice recognition and haptic feedback are driving seamless integration, enabling intuitive control in varying environments. These advancements improve efficiency and offer personalized interaction by adapting to user preferences and contextual factors.

Voice Command vs Touch Navigation Infographic

techiny.com

techiny.com