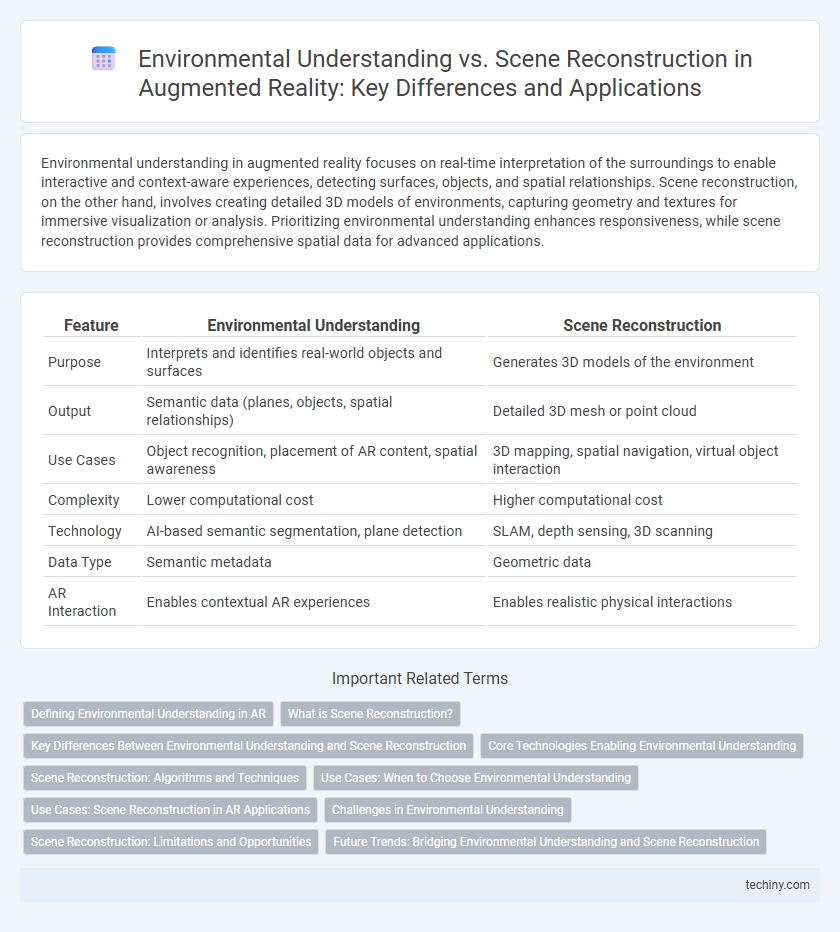

Environmental understanding in augmented reality focuses on real-time interpretation of the surroundings to enable interactive and context-aware experiences, detecting surfaces, objects, and spatial relationships. Scene reconstruction, on the other hand, involves creating detailed 3D models of environments, capturing geometry and textures for immersive visualization or analysis. Prioritizing environmental understanding enhances responsiveness, while scene reconstruction provides comprehensive spatial data for advanced applications.

Table of Comparison

| Feature | Environmental Understanding | Scene Reconstruction |

|---|---|---|

| Purpose | Interprets and identifies real-world objects and surfaces | Generates 3D models of the environment |

| Output | Semantic data (planes, objects, spatial relationships) | Detailed 3D mesh or point cloud |

| Use Cases | Object recognition, placement of AR content, spatial awareness | 3D mapping, spatial navigation, virtual object interaction |

| Complexity | Lower computational cost | Higher computational cost |

| Technology | AI-based semantic segmentation, plane detection | SLAM, depth sensing, 3D scanning |

| Data Type | Semantic metadata | Geometric data |

| AR Interaction | Enables contextual AR experiences | Enables realistic physical interactions |

Defining Environmental Understanding in AR

Environmental understanding in augmented reality (AR) involves the real-time recognition and interpretation of physical surroundings to enable context-aware digital overlays. Unlike scene reconstruction, which creates detailed 3D models of environments, environmental understanding focuses on identifying key elements like surfaces, objects, and spatial relationships essential for interactive AR experiences. This process leverages sensor data and computer vision algorithms to facilitate precise placement and interaction of virtual content within the real world.

What is Scene Reconstruction?

Scene reconstruction in augmented reality involves creating a detailed, three-dimensional digital model of the physical environment by capturing spatial geometry and surface textures. This process uses data from sensors like LiDAR, depth cameras, or stereo vision to map the environment's structure accurately. Unlike basic environmental understanding, scene reconstruction enables precise interaction and realistic placement of virtual objects by providing comprehensive spatial awareness.

Key Differences Between Environmental Understanding and Scene Reconstruction

Environmental understanding in augmented reality involves interpreting and recognizing real-world elements such as surfaces, objects, and spatial boundaries to enable context-aware interactions. Scene reconstruction focuses on creating a detailed, often 3D, model of the physical environment, capturing geometry, texture, and spatial relationships for immersive visualization or navigation. Key differences include environmental understanding prioritizing semantic interpretation for interaction, while scene reconstruction emphasizes precise geometric mapping and detailed spatial representation.

Core Technologies Enabling Environmental Understanding

Environmental understanding in augmented reality relies on core technologies such as simultaneous localization and mapping (SLAM), depth sensing, and semantic segmentation to interpret and classify real-world environments accurately. These technologies enable devices to detect surfaces, recognize objects, and comprehend spatial relationships without requiring full scene reconstruction, facilitating more efficient and responsive AR interactions. Depth sensors, including LiDAR and structured light, provide critical 3D data, while machine learning algorithms enhance environmental context awareness for dynamic and robust AR experiences.

Scene Reconstruction: Algorithms and Techniques

Scene reconstruction in augmented reality relies on advanced algorithms such as simultaneous localization and mapping (SLAM), stereo vision, and depth sensing to create accurate 3D models of environments. Techniques including point cloud generation, mesh reconstruction, and volumetric integration enable detailed spatial mapping essential for realistic AR experiences. Real-time processing and optimization of these algorithms ensure seamless interaction between virtual objects and physical surroundings.

Use Cases: When to Choose Environmental Understanding

Environmental understanding in augmented reality excels in use cases requiring real-time object recognition and interaction within dynamic spaces, such as navigation assistance and gaming. It enables AR applications to interpret semantic information about surroundings, identifying walls, floors, and furniture without detailed 3D models, ideal for lightweight devices and scenarios needing quick adaptability. This approach is optimal when rapid context awareness and user interaction are prioritized over precise spatial reconstruction.

Use Cases: Scene Reconstruction in AR Applications

Scene reconstruction in augmented reality (AR) enables the creation of detailed 3D models of an environment, supporting applications such as interior design, remote collaboration, and cultural heritage preservation. By capturing spatial data and accurately mapping surfaces, AR applications facilitate immersive user interactions with real-world spaces, enhancing spatial awareness and decision-making. This technology improves navigation in complex environments, allows for virtual object placement with high precision, and supports real-time modifications in dynamic settings.

Challenges in Environmental Understanding

Environmental understanding in augmented reality faces challenges such as accurately detecting and classifying diverse real-world surfaces and objects in dynamic, cluttered environments. Limited sensor resolutions and computational constraints hinder real-time processing, causing difficulties in maintaining spatial consistency and depth accuracy. Moreover, variations in lighting and occlusions further complicate robust semantic mapping and interaction within complex scenes.

Scene Reconstruction: Limitations and Opportunities

Scene reconstruction in augmented reality faces challenges such as computational complexity, limited real-time processing capabilities, and difficulties in accurately capturing dynamic environments. Advances in machine learning and sensor fusion offer opportunities to enhance reconstruction accuracy, enable richer environmental interactions, and improve user immersion. Addressing these limitations can lead to more robust AR applications in fields like gaming, navigation, and industrial design.

Future Trends: Bridging Environmental Understanding and Scene Reconstruction

Future trends in augmented reality emphasize bridging environmental understanding and scene reconstruction to create more immersive and interactive experiences. Advanced machine learning models and sensor fusion techniques enable real-time semantic mapping and accurate 3D reconstruction of complex environments. Integration of AI-driven context awareness with high-fidelity spatial models will drive the next generation of AR applications in industries like gaming, healthcare, and urban planning.

Environmental Understanding vs Scene Reconstruction Infographic

techiny.com

techiny.com