Visual Inertial Odometry (VIO) combines camera data with inertial measurements to estimate device motion accurately, offering real-time tracking with low latency. SLAM (Simultaneous Localization and Mapping) not only tracks movement but also builds a map of the environment, enabling more robust localization in dynamic or unknown spaces. While VIO excels in fast, lightweight applications, SLAM provides enhanced spatial awareness critical for complex augmented reality experiences.

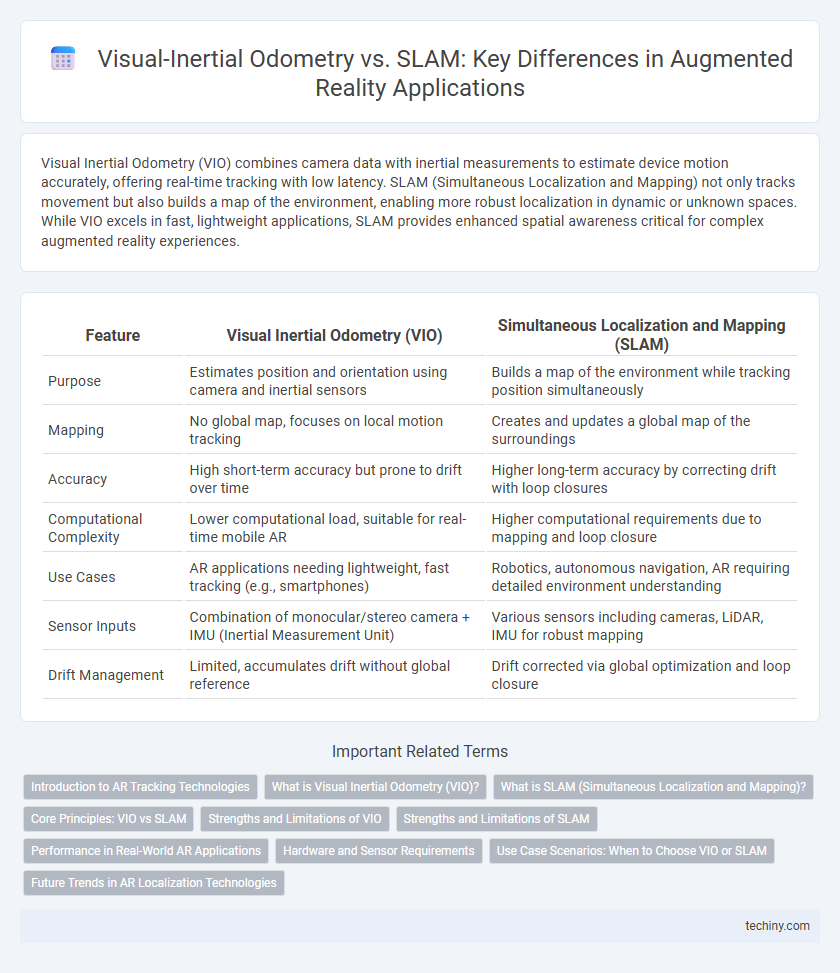

Table of Comparison

| Feature | Visual Inertial Odometry (VIO) | Simultaneous Localization and Mapping (SLAM) |

|---|---|---|

| Purpose | Estimates position and orientation using camera and inertial sensors | Builds a map of the environment while tracking position simultaneously |

| Mapping | No global map, focuses on local motion tracking | Creates and updates a global map of the surroundings |

| Accuracy | High short-term accuracy but prone to drift over time | Higher long-term accuracy by correcting drift with loop closures |

| Computational Complexity | Lower computational load, suitable for real-time mobile AR | Higher computational requirements due to mapping and loop closure |

| Use Cases | AR applications needing lightweight, fast tracking (e.g., smartphones) | Robotics, autonomous navigation, AR requiring detailed environment understanding |

| Sensor Inputs | Combination of monocular/stereo camera + IMU (Inertial Measurement Unit) | Various sensors including cameras, LiDAR, IMU for robust mapping |

| Drift Management | Limited, accumulates drift without global reference | Drift corrected via global optimization and loop closure |

Introduction to AR Tracking Technologies

Visual Inertial Odometry (VIO) combines camera data with inertial measurements to estimate a device's position and orientation with high accuracy, making it essential for real-time AR tracking. Simultaneous Localization and Mapping (SLAM) builds a map of an unknown environment while tracking the user's location within it, enabling robust and dynamic AR experiences in complex spaces. Both technologies are fundamental in AR to ensure precise spatial awareness, but VIO excels in short-term motion tracking, whereas SLAM provides long-term environmental understanding.

What is Visual Inertial Odometry (VIO)?

Visual Inertial Odometry (VIO) is a technique that combines visual information from cameras with inertial data from IMUs (Inertial Measurement Units) to estimate the position and orientation of a device in real-time. VIO enables accurate motion tracking by fusing image features with acceleration and rotational velocity measurements, making it essential for AR applications where GPS signals are unreliable or unavailable. Unlike traditional SLAM, VIO primarily focuses on continuous pose estimation rather than building a detailed environmental map.

What is SLAM (Simultaneous Localization and Mapping)?

SLAM (Simultaneous Localization and Mapping) is a computational technique used in augmented reality to build a map of an unknown environment while concurrently tracking the device's position within it. It integrates sensor data from cameras, LiDAR, and inertial measurement units to create accurate 3D maps and enable real-time localization. SLAM enhances AR experiences by providing robust spatial awareness and navigation in dynamic or previously uncharted settings.

Core Principles: VIO vs SLAM

Visual Inertial Odometry (VIO) combines camera images with inertial sensor data to estimate device position and orientation in real-time, emphasizing accurate motion tracking through sensor fusion. Simultaneous Localization and Mapping (SLAM) builds a map of the environment while localizing the device within it, creating a detailed spatial understanding by continuously updating the map as new data arrives. VIO excels in dynamic, fast-motion scenarios due to high-frequency sensor updates, whereas SLAM provides robust environmental context essential for complex AR applications.

Strengths and Limitations of VIO

Visual Inertial Odometry (VIO) excels in providing real-time, accurate pose estimation by fusing camera data with inertial measurement units (IMUs), making it highly effective in dynamic environments with rapid motion. However, VIO struggles with long-term drift and lacks robust environmental mapping capabilities compared to Simultaneous Localization and Mapping (SLAM). Despite its strengths in lightweight and low-latency tracking, VIO's limitations include reduced accuracy in low-texture or visually repetitive areas where SLAM's loop closure and map optimization techniques outperform.

Strengths and Limitations of SLAM

Simultaneous Localization and Mapping (SLAM) excels in creating comprehensive environmental maps while tracking device position in real-time, enabling robust navigation and interaction in complex Augmented Reality (AR) scenarios. SLAM's strength lies in handling dynamic and large-scale environments through continuous map updates, but it often requires significant computational resources and can struggle with rapid motion or textureless spaces. Despite these limitations, SLAM remains essential for precise spatial understanding and persistent AR experiences beyond the capabilities of Visual Inertial Odometry (VIO), which primarily focuses on motion estimation without mapping the surroundings.

Performance in Real-World AR Applications

Visual Inertial Odometry (VIO) offers robust and efficient pose estimation by fusing camera data with inertial measurements, enabling smooth tracking in real-world AR scenarios with limited computational resources. Simultaneous Localization and Mapping (SLAM) excels in creating detailed environment maps while localizing the device, providing enhanced spatial awareness but often requiring higher processing power and more complex algorithms. In practical AR applications, VIO is preferred for fast and stable motion tracking, whereas SLAM delivers superior environmental context crucial for advanced interactions and persistent AR experiences.

Hardware and Sensor Requirements

Visual Inertial Odometry (VIO) requires a combination of cameras and inertial measurement units (IMUs) to estimate motion by fusing visual data with accelerometer and gyroscope readings, enabling real-time pose tracking with relatively low computational overhead. SLAM systems demand more complex hardware setups, often integrating multiple sensors such as LiDAR, depth cameras, and IMUs to construct and update detailed maps while localizing the device simultaneously, which increases processing power and sensor calibration complexity. Both approaches depend heavily on sensor accuracy and synchronization, but SLAM's sensor suite typically involves higher costs and more demanding hardware requirements for robust environmental mapping and localization.

Use Case Scenarios: When to Choose VIO or SLAM

Visual Inertial Odometry (VIO) excels in lightweight mobile applications requiring fast, real-time tracking with limited computational resources, such as augmented reality glasses or smartphones navigating indoor environments without pre-mapped landmarks. SLAM (Simultaneous Localization and Mapping) is preferable for complex or large-scale AR scenarios involving dynamic mapping and long-term environment understanding, including robotic navigation and AR in unfamiliar or expansive spaces. Selecting VIO or SLAM depends on factors like environmental complexity, processing power availability, and the necessity for map-building versus pure localization.

Future Trends in AR Localization Technologies

Visual Inertial Odometry (VIO) and Simultaneous Localization and Mapping (SLAM) are evolving rapidly, leveraging advancements in machine learning and sensor fusion to enhance AR localization accuracy and efficiency. Future trends indicate a shift towards tightly coupled VIO-SLAM hybrid systems that balance real-time performance with robust environmental mapping, enabling seamless AR experiences in complex and dynamic settings. The integration of 5G networks and edge computing is expected to further reduce latency and improve scalability, making localization technologies more adaptive and precise across various AR applications.

Visual Inertial Odometry vs SLAM (Simultaneous Localization and Mapping) Infographic

techiny.com

techiny.com