Occlusion handling in augmented reality ensures virtual objects are correctly hidden behind real-world elements, enhancing the realism of the experience. Depth sensing technology captures spatial information to accurately position and scale virtual content within the environment. Combining occlusion handling with advanced depth sensing creates seamless interactions between digital and physical worlds, improving user immersion and spatial awareness.

Table of Comparison

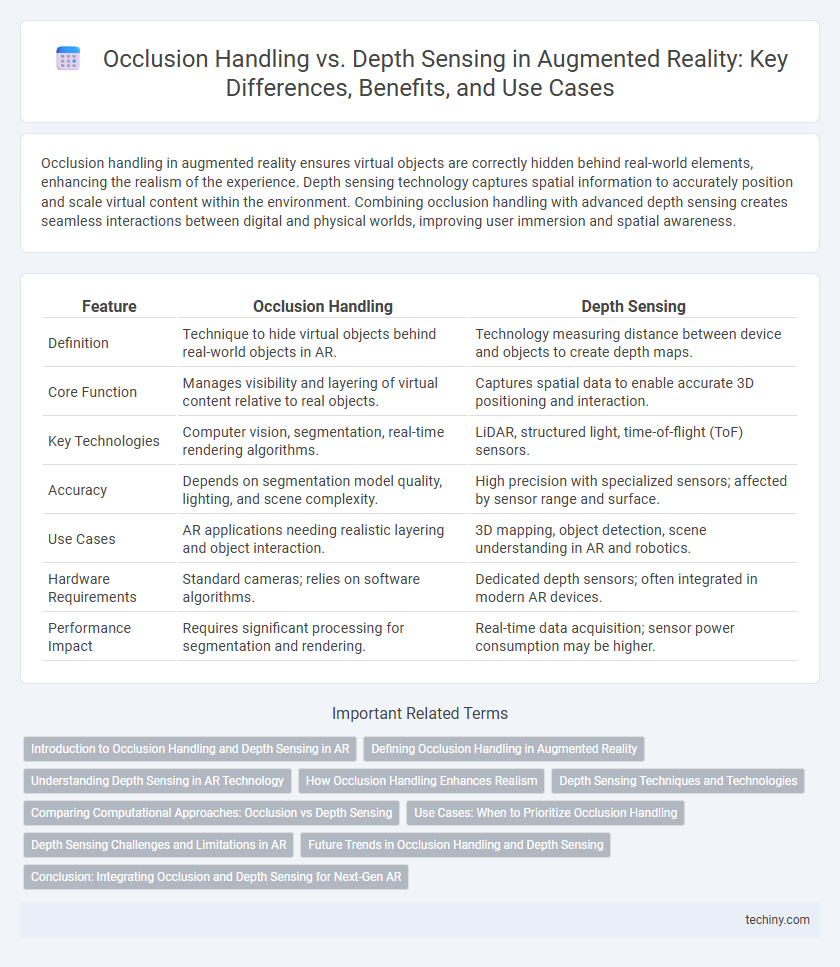

| Feature | Occlusion Handling | Depth Sensing |

|---|---|---|

| Definition | Technique to hide virtual objects behind real-world objects in AR. | Technology measuring distance between device and objects to create depth maps. |

| Core Function | Manages visibility and layering of virtual content relative to real objects. | Captures spatial data to enable accurate 3D positioning and interaction. |

| Key Technologies | Computer vision, segmentation, real-time rendering algorithms. | LiDAR, structured light, time-of-flight (ToF) sensors. |

| Accuracy | Depends on segmentation model quality, lighting, and scene complexity. | High precision with specialized sensors; affected by sensor range and surface. |

| Use Cases | AR applications needing realistic layering and object interaction. | 3D mapping, object detection, scene understanding in AR and robotics. |

| Hardware Requirements | Standard cameras; relies on software algorithms. | Dedicated depth sensors; often integrated in modern AR devices. |

| Performance Impact | Requires significant processing for segmentation and rendering. | Real-time data acquisition; sensor power consumption may be higher. |

Introduction to Occlusion Handling and Depth Sensing in AR

Occlusion handling in augmented reality ensures virtual objects are correctly layered behind or in front of real-world elements, enhancing realism by accurately simulating spatial relationships. Depth sensing technology captures distance information from surfaces using LiDAR or time-of-flight sensors, enabling precise placement and interaction of digital content within physical environments. Combining occlusion handling with advanced depth sensing drives immersive experiences by improving spatial awareness and seamless integration of AR elements.

Defining Occlusion Handling in Augmented Reality

Occlusion handling in augmented reality refers to the technique of accurately rendering virtual objects behind real-world objects to create a realistic depth perception. This process relies on identifying the spatial relationship between physical environments and digital content, ensuring virtual elements are visually masked when obstructed. Effective occlusion handling enhances immersion by preventing virtual objects from unrealistically overlapping or appearing in front of real objects.

Understanding Depth Sensing in AR Technology

Depth sensing in AR technology enables devices to accurately measure the distance between the camera and real-world objects using sensors such as LiDAR, time-of-flight cameras, or structured light. This capability allows for precise placement of virtual objects within a physical environment, enhancing realism by enabling correct scaling and interaction based on spatial relationships. Effective depth sensing improves occlusion handling by ensuring virtual elements are properly hidden behind real objects when necessary, creating a seamless augmented experience.

How Occlusion Handling Enhances Realism

Occlusion handling enhances augmented reality realism by accurately rendering virtual objects behind real-world elements, creating a seamless integration between both layers. This technique relies on advanced algorithms that detect objects' spatial relationships, enabling precise masking and visibility adjustments. Depth sensing provides essential spatial data, but occlusion handling refines this information to deliver immersive and believable AR experiences.

Depth Sensing Techniques and Technologies

Depth sensing techniques in augmented reality leverage technologies such as LiDAR, structured light, and time-of-flight cameras to capture precise spatial information by measuring the distance between the sensor and objects in the environment. These methods enable accurate 3D mapping and real-time depth data acquisition, which are critical for rendering realistic occlusion effects and improving scene understanding. Advanced depth sensors enhance AR applications by providing detailed depth maps that facilitate seamless integration of virtual objects with physical surroundings.

Comparing Computational Approaches: Occlusion vs Depth Sensing

Occlusion handling in augmented reality relies on identifying and masking objects to ensure virtual elements appear correctly behind real-world objects, often through image segmentation and depth buffer techniques. Depth sensing captures per-pixel distance data using sensors like LiDAR or structured light, providing precise spatial information to accurately place and occlude virtual content. Comparing computational approaches, occlusion handling typically demands complex real-time image processing, whereas depth sensing offers more direct and reliable spatial measurements but requires specialized hardware.

Use Cases: When to Prioritize Occlusion Handling

Occlusion handling is critical in augmented reality applications requiring realistic interactions between virtual objects and the real environment, such as interior design visualization and medical training simulations. Prioritize occlusion handling when accurate layering of virtual objects behind real-world elements enhances user immersion and spatial awareness. Depth sensing complements occlusion but may be less effective alone in complex scenes with overlapping objects or transparent surfaces.

Depth Sensing Challenges and Limitations in AR

Depth sensing in augmented reality faces challenges including limited accuracy in dynamic lighting conditions and difficulties in detecting transparent or reflective surfaces. Sensor range and resolution constraints can lead to imprecise depth maps, impacting the realism of occlusion handling. Complex computational requirements for real-time processing further limit the effectiveness of depth sensing in mobile AR devices.

Future Trends in Occlusion Handling and Depth Sensing

Future trends in occlusion handling and depth sensing in augmented reality focus on enhancing spatial awareness and realism through advanced AI-driven algorithms and neural rendering techniques. Integration of LiDAR sensors and time-of-flight cameras is expected to improve depth accuracy, enabling seamless interaction between virtual and real objects. Emerging developments in machine learning models will facilitate real-time adaptive occlusion, dramatically improving user immersion and device efficiency.

Conclusion: Integrating Occlusion and Depth Sensing for Next-Gen AR

Integrating occlusion handling with advanced depth sensing technologies enhances spatial awareness and realism in augmented reality experiences by accurately layering virtual objects within real-world environments. Utilizing depth data for precise occlusion allows AR systems to render scenes where digital content convincingly interacts with physical surroundings, improving immersion and user engagement. This synergy between occlusion techniques and depth sensing represents a critical step toward fully integrated, next-generation AR applications.

Occlusion Handling vs Depth Sensing Infographic

techiny.com

techiny.com