Remote rendering in augmented reality offloads complex graphics processing to powerful cloud servers, enabling high-quality visuals without taxing the device's limited hardware. On-device rendering processes graphics locally, providing lower latency and offline functionality but often sacrificing detail and performance due to hardware constraints. Choosing between remote and on-device rendering depends on factors like available bandwidth, device capability, and application requirements for responsiveness and visual fidelity.

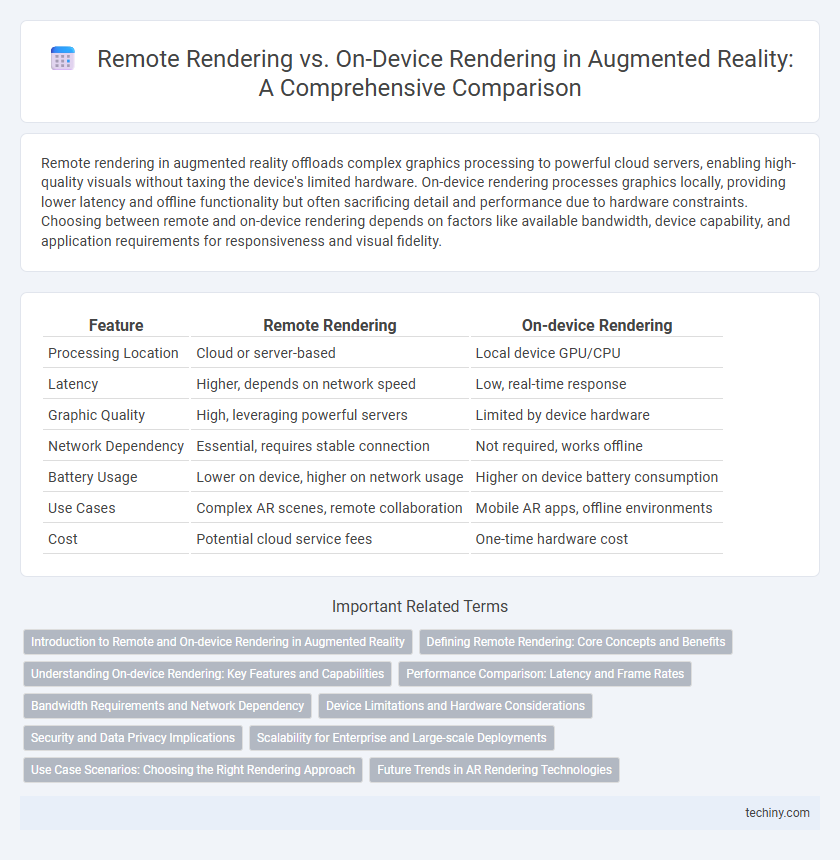

Table of Comparison

| Feature | Remote Rendering | On-device Rendering |

|---|---|---|

| Processing Location | Cloud or server-based | Local device GPU/CPU |

| Latency | Higher, depends on network speed | Low, real-time response |

| Graphic Quality | High, leveraging powerful servers | Limited by device hardware |

| Network Dependency | Essential, requires stable connection | Not required, works offline |

| Battery Usage | Lower on device, higher on network usage | Higher on device battery consumption |

| Use Cases | Complex AR scenes, remote collaboration | Mobile AR apps, offline environments |

| Cost | Potential cloud service fees | One-time hardware cost |

Introduction to Remote and On-device Rendering in Augmented Reality

Remote rendering in augmented reality leverages powerful cloud servers to process and deliver high-quality 3D visuals, enabling complex scenes without taxing local device resources. On-device rendering performs all graphics computation locally on the AR hardware, offering lower latency and offline functionality but limited by device processing power. The choice between remote and on-device rendering hinges on factors like network reliability, computational demands, and desired visual fidelity in AR applications.

Defining Remote Rendering: Core Concepts and Benefits

Remote rendering in augmented reality involves processing complex 3D graphics on powerful cloud servers instead of local devices, enabling real-time streaming of high-fidelity visuals to AR headsets with minimal latency. This technology mitigates hardware limitations of AR devices, allowing for richer, more detailed environments without compromising battery life or processing power. Key benefits include scalability, seamless updates, and enhanced user experiences through offloading intense computational tasks from on-device resources.

Understanding On-device Rendering: Key Features and Capabilities

On-device rendering processes augmented reality graphics directly on the user's device, harnessing its GPU and CPU to deliver real-time, low-latency experiences without relying on network connectivity. Key features include enhanced privacy, offline functionality, and reduced bandwidth consumption, enabling smoother interactions in dynamic environments. Advanced hardware acceleration and optimized software frameworks maximize performance and battery efficiency while supporting complex 3D models and high-resolution textures.

Performance Comparison: Latency and Frame Rates

Remote rendering in augmented reality leverages powerful cloud servers to deliver high-quality visuals with minimal local processing, significantly reducing latency and enabling consistent high frame rates, especially on devices with limited hardware resources. On-device rendering processes graphics directly on the AR headset or smartphone, which can lead to higher latency and variable frame rates depending on the device's GPU capability and thermal constraints. Optimizing performance requires balancing the low latency and high frame rates of remote rendering against the independence and responsiveness of on-device rendering, often influenced by network stability and hardware power.

Bandwidth Requirements and Network Dependency

Remote rendering in augmented reality relies heavily on high bandwidth and stable network connections to transmit complex 3D graphics from cloud servers to AR devices, enabling detailed visuals without taxing local hardware. On-device rendering reduces bandwidth requirements by processing graphics locally, minimizing network dependency and latency but is limited by the device's processing power. Choosing between remote and on-device rendering involves balancing network infrastructure capabilities with the desired AR experience quality and responsiveness.

Device Limitations and Hardware Considerations

Remote rendering in augmented reality reduces the processing load on local devices by offloading complex graphics computations to powerful cloud servers, addressing device limitations such as limited CPU, GPU, and battery capacity. On-device rendering demands advanced hardware specifications including high-performance GPUs, ample RAM, and efficient thermal management to maintain real-time performance and low latency. Hardware considerations are critical for balancing quality and responsiveness, with remote rendering enabling richer experiences on lightweight, less capable AR devices.

Security and Data Privacy Implications

Remote rendering in augmented reality enhances security by processing sensitive data on secure cloud servers, reducing the risk of device-level breaches and unauthorized access. On-device rendering keeps data locally, minimizing exposure to network vulnerabilities but requiring robust device security protocols to prevent physical and software attacks. Balancing these approaches involves assessing encryption standards, data transmission risks, and compliance with privacy regulations such as GDPR and HIPAA.

Scalability for Enterprise and Large-scale Deployments

Remote rendering enhances scalability for enterprise and large-scale augmented reality deployments by offloading processing to powerful cloud servers, enabling consistent high-quality visuals across numerous devices without hardware limitations. On-device rendering struggles with scalability due to varying device capabilities and limited computational resources, impacting performance and user experience at scale. Enterprises benefit from remote rendering by seamlessly managing extensive AR workloads, reducing maintenance overhead, and ensuring uniform AR experiences across global deployments.

Use Case Scenarios: Choosing the Right Rendering Approach

Remote rendering excels in complex AR use cases like industrial design and medical imaging, where high-fidelity visuals and real-time interaction with detailed 3D models are critical. On-device rendering suits mobile AR applications such as gaming and retail, offering low latency and offline capabilities by leveraging local device hardware. Selecting the appropriate rendering approach depends on factors like network stability, device processing power, and the required visual quality for the specific AR experience.

Future Trends in AR Rendering Technologies

Remote rendering leverages powerful cloud servers to deliver high-quality AR visuals with reduced latency, enabling complex scenes beyond the limitations of on-device hardware. On-device rendering advances with more efficient GPUs and AI-driven optimization, allowing for real-time, low-latency interaction without constant internet dependency. Future trends indicate a hybrid approach combining edge computing and 5G networks to balance performance, power consumption, and seamless user experiences in augmented reality applications.

Remote Rendering vs On-device Rendering Infographic

techiny.com

techiny.com